Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: NiFi: Convert data into lower case

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

NiFi: Convert data into lower case

- Labels:

-

Apache NiFi

Created 09-13-2018 10:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We have headers coming in multiple sized like in lower and upper case. As we are converting CSV to Json, we are first converting the csv schema into Avro Schema where avro is not accepting upper case column name.

Kindly advice how to convert upper case into lower case.

Thanks in advance.

Created on 09-14-2018 01:27 PM - edited 08-17-2019 11:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We can achieve this case in two ways using UpdateRecord Processor ,QueryRecord processor

Using UpdateRecord processor:

As your data having Header so use Csv reader use

Then use UpdateRecord processor with

Replacement Value Strategy

Record Path Value

Then swap the record path value, add new property as

/lowercase_filed_name

/upper_lowercase_field_name

Refer to this link for more details regards to UpdateRecord processor.

Configure the Record Writer avro schema registry matching with your new filed names(i.e lowercase filed names).

Then processor will swap the values from the record path and keep them to your new lowercase field names.

2.Using QueryRecord processor:

Add new property in QueryRecord processor and keep the query as

select col1,col2,col3.. from FLOWFILE

Then configure the Record Writer controller service avro schema matching with the select statement column names(case sensitive), Then processor will write the flowfile with new filed names.

For more details regards to convert record processor refer to this link.

You can use either of these methods choose which is better fit for your case.

I tried some simple flow using both processors, Use the attached flow xml as a reference and change per your case

Created 09-13-2018 11:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Could you add more details regards to Avro is not accepting Upper case column name means?

Some sample data will be useful to understand root cause of the issue..

Created on 09-14-2018 11:51 AM - edited 08-17-2019 11:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

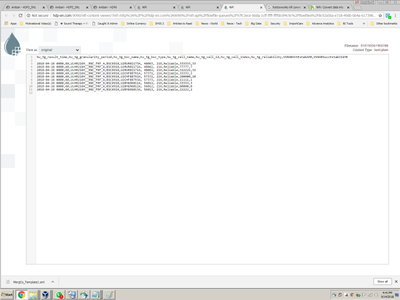

Data coming in ConvertRecord from CSV to AVRO schema.

Avro_Schema

Outgoing Data

As you can see, incoming data have column name in different format. I changed the Avro Schema to reflect the same column name format but then it loaded NULL values in all the columns.

Created on 09-14-2018 01:27 PM - edited 08-17-2019 11:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We can achieve this case in two ways using UpdateRecord Processor ,QueryRecord processor

Using UpdateRecord processor:

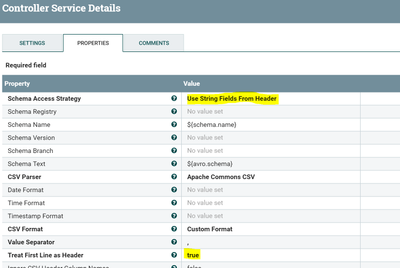

As your data having Header so use Csv reader use

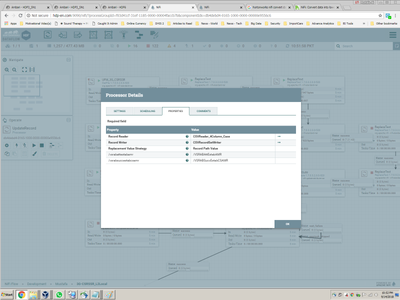

Then use UpdateRecord processor with

Replacement Value Strategy

Record Path Value

Then swap the record path value, add new property as

/lowercase_filed_name

/upper_lowercase_field_name

Refer to this link for more details regards to UpdateRecord processor.

Configure the Record Writer avro schema registry matching with your new filed names(i.e lowercase filed names).

Then processor will swap the values from the record path and keep them to your new lowercase field names.

2.Using QueryRecord processor:

Add new property in QueryRecord processor and keep the query as

select col1,col2,col3.. from FLOWFILE

Then configure the Record Writer controller service avro schema matching with the select statement column names(case sensitive), Then processor will write the flowfile with new filed names.

For more details regards to convert record processor refer to this link.

You can use either of these methods choose which is better fit for your case.

I tried some simple flow using both processors, Use the attached flow xml as a reference and change per your case

Created on 09-14-2018 06:09 PM - edited 08-17-2019 11:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

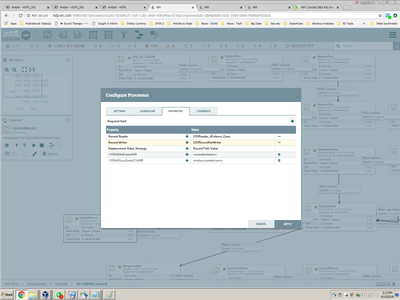

UpdateRecord Error: Can not write to schema, schema is unknow.

UpdateRecord Properties

CSV Reader

CSV Writer

Created on 09-14-2018 06:45 PM - edited 08-17-2019 10:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

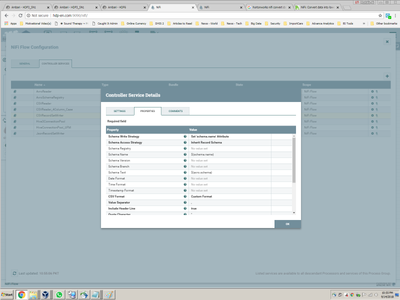

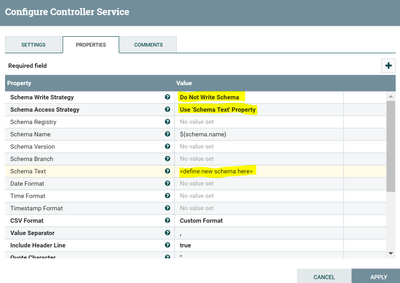

In your CSVSetWriter controller service configure as

in Schema Text Property define new schema i.e. avro schema with lowercase field names.

Let us know if you are having any issues..

Created on 09-15-2018 10:34 AM - edited 08-17-2019 10:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It got successful but out out was empty.

Source Header: Aim is to convert mentioned last 2 columns in lower case.

hw_3g_result_time,hw_3g_granularity_period,hw_3g_bsc_name,hw_3g_bsc_type,hw_3g_cell_name,hw_3g_cell_id,hw_3g_cell_index,hw_3g_reliability,VSRABAttEstabAMR,VSRABSuccEstabCSAMR

CSVWriter Properties

Avro Schema

UpdateRecord Property

Source Header: Aim is to convert mentioned last 2 columns in lower case.

hw_3g_result_time,hw_3g_granularity_period,hw_3g_bsc_name,hw_3g_bsc_type,hw_3g_cell_name,hw_3g_cell_id,hw_3g_cell_index,hw_3g_reliability,VSRABAttEstabAMR,VSRABSuccEstabCSAMR

Created on 09-15-2018 11:49 AM - edited 08-17-2019 10:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

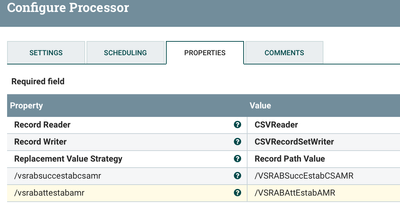

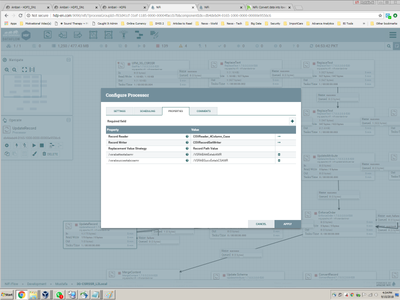

Add change the added dynamic properties in update record processor as shown below:

/vsrabsuccestabcsamr

/VSRABSuccEstabCSAMR

/vsrabattestabamr

/VSRABAttEstabAMR

As we swapping the data to lower case, so keep the lowercase field names as property name and upper lower mix filed name as property value.

Then processor will add the field value to the newly added field names.

Created on 09-15-2018 12:07 PM - edited 08-17-2019 10:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

pmresult-67109368-60-201804260000-201804260100-ori.zip (Please unzip, its a csv file)

Same result :-(. Also attaching XML and Source data file for testing.

Empty Queue

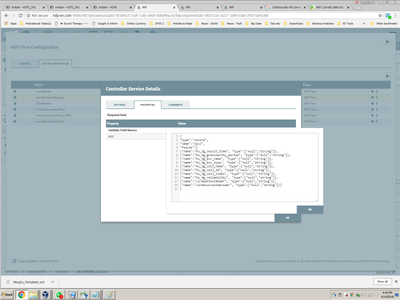

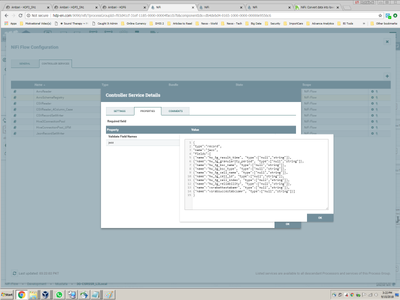

Avro Schema:

{ "type":"record", "name":"jazz", "fields":[ {"name":"hw_3g_result_time", "type":["null","string"]}, {"name":"hw_3g_granularity_period", "type":["null","string"]}, {"name":"hw_3g_bsc_name", "type":["null","string"]}, {"name":"hw_3g_bsc_type", "type":["null","string"]}, {"name":"hw_3g_cell_name", "type":["null","string"]}, {"name":"hw_3g_cell_id", "type":["null","string"]}, {"name":"hw_3g_cell_index", "type":["null","string"]}, {"name":"hw_3g_reliability", "type":["null","string"]}, {"name":"vsrabattestabamr", "type":["null","string"]}, {"name":"vsrabsuccestabcsamr", "type":["null","string"]}] }

Created 09-15-2018 12:50 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I tried with your UpdateRecord configs, not able to get your csv data(I used some sample data from your question screenshot) and the flow worked as expected.

InputData:

hw_3g_result_time,hw_3g_granularity_period,hw_3g_bsc_name,hw_3g_bsc_type,hw_3g_cell_name,hw_3g_cell_id,hw_3g_cell_index,hw_3g_reliability,VSRABAttEstabAMR,VSRABSuccEstabCSAMR 2018-04-26 0000,60,ULHR,BSC,UQRUR,48063,216,Reliable,33333,33 2018-04-26 0000,60,ULHR,BSC,UQRUR,48063,216,Reliable,77777,7

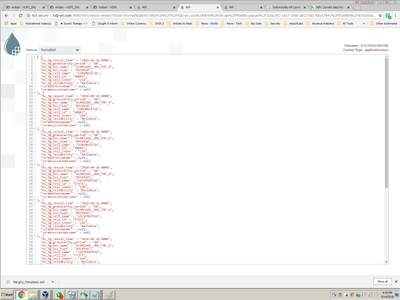

OutputData:

hw_3g_result_time,hw_3g_granularity_period,hw_3g_bsc_name,hw_3g_bsc_type,hw_3g_cell_name,hw_3g_cell_id,hw_3g_cell_index,hw_3g_reliability,vsrabattestabamr,vsrabsuccestabcsamr 2018-04-26 0000,60,ULHR,BSC,UQRUR,48063,216,Reliable,33333,33 2018-04-26 0000,60,ULHR,BSC,UQRUR,48063,216,Reliable,77777,7

I attached the sample flow template that I have tried update-record-222899.xml .Use the attached flow xml as a reference and change per your case.