Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: NiFi ValidateCSV processor throwing away valid...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

NiFi ValidateCSV processor throwing away valid file

- Labels:

-

Apache NiFi

Created on 01-16-2018 03:52 PM - edited 08-18-2019 12:26 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

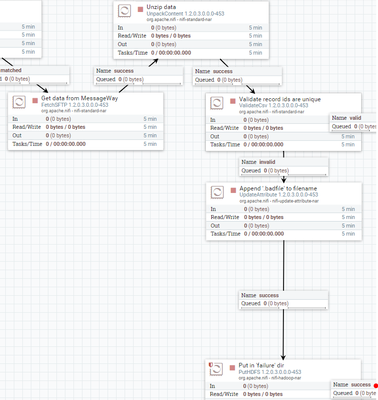

I'm having an issue with the NiFi ValidateCSV processor claiming a valid file is invalid. This file will pass through the processor correctly as a valid file on the first attempt, but if I leave the flow running and try to put the same file through again immediately after, it claims that the file is invalid. However, if I stop and start the flow between each file validation, the file will always be valid. It seems like the processor is keeping some of the flowfile content in memory when it tries to evaluate the second pass, causing it to fail.

The schema validation is only checking the first column for a unique() entry. It's set up like this:

"Unique(), Optional(StrRegEx(".*")), Optional(StrRegEx(".*")), Optional(StrRegEx(".*")), Optional(StrRegEx(".*")), Optional(StrRegEx(".*")), Optional(StrRegEx(".*")), Optional(StrRegEx(".*")), Optional(StrRegEx(".*")), Optional(StrRegEx(".*")), Optional(StrRegEx(".*")), Optional(StrRegEx(".*")), Optional(StrRegEx(".*")), Optional(StrRegEx(".*")), Optional(StrRegEx(".*")), Optional(StrRegEx(".*")), Optional(StrRegEx(".*")), Optional(StrRegEx(".*")), Optional(StrRegEx(".*")), Optional(StrRegEx(".*"))"

See attached for the flow and processor in question.

Any ideas? Any help is much appreciated.

Created 02-22-2018 05:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We contacted Hortonworks directly and talked with the Nifi developers who confirmed this is a bug in the ValidateCSV processor. No timeline on a fix.

Created on 01-17-2018 03:29 PM - edited 08-18-2019 12:26 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've continued to research this issue further and have been able to replicate the issue with a simplified flow using just a GenerateFlowfile processor running at 30 second intervals and a ValidateCSV.

The test data in GenerateFlowFile:

1000000|Firstname1000000|Lastname1000000|2452954106|3387173669|9625048215|test2@bkfs.com|FstName2-1000000|LstName2-1000000|6483154023|2898543994|8006477595|blah@test.com|123|Fake Street||Jacksonville|Fl|32250|1234 1000001|Firstname1000001|Lastname1000001|5227791843|9503374934|4617490963|test2@bkfs.com|FstName2-1000001|LstName2-1000001|2351899318|2250724929|9755971010|blah@test.com|123|Fake Street||Jacksonville|Fl|32250|1234 1000002|Firstname1000002|Lastname1000002|2726022427|8455734201|5061581356|test2@bkfs.com|FstName2-1000002|LstName2-1000002|4889605315|9765983450|5530103619|blah@test.com|123|Fake Street||Jacksonville|Fl|32250|1234 1000003|Firstname1000003|Lastname1000003|6600426098|5527562109|5457567035|test2@bkfs.com|FstName2-1000003|LstName2-1000003|2219888741|2230922868|6707470404|blah@test.com|123|Fake Street||Jacksonville|Fl|32250|1234 1000004|Firstname1000004|Lastname1000004|6786938248|6411937419|5123777198|test2@bkfs.com|FstName2-1000004|LstName2-1000004|2831114709|4010383704|6841344395|blah@test.com|123|Fake Street||Jacksonville|Fl|32250|1234 1000005|Firstname1000005|Lastname1000005|2906459644|4113670124|9732997854|test2@bkfs.com|FstName2-1000005|LstName2-1000005|6029871693|2601194119|5229252727|blah@test.com|123|Fake Street||Jacksonville|Fl|32250|1234 1000006|Firstname1000006|Lastname1000006|6206996436|8377906697|6096568098|test2@bkfs.com|FstName2-1000006|LstName2-1000006|3776671873|7219153330|6267564904|blah@test.com|123|Fake Street||Jacksonville|Fl|32250|1234 1000007|Firstname1000007|Lastname1000007|6592010372|4396981880|3689391047|test2@bkfs.com|FstName2-1000007|LstName2-1000007|5482870564|9124280167|7764088854|blah@test.com|123|Fake Street||Jacksonville|Fl|32250|1234 1000008|Firstname1000008|Lastname1000008|2439048871|3800831154|6990841221|test2@bkfs.com|FstName2-1000008|LstName2-1000008|4823913636|8864891978|4810747004|blah@test.com|123|Fake Street||Jacksonville|Fl|32250|1234 1000009|Firstname1000009|Lastname1000009|6352662441|6724206719|3289556363|test2@bkfs.com|FstName2-1000009|LstName2-1000009|6531169670|7010161669|8092909403|blah@test.com|123|Fake Street||Jacksonville|Fl|32250|1234 etc..

The validation in ValidateCSV:

Unique(), Optional(StrRegEx(".*")), Optional(StrRegEx(".*")), Optional(StrRegEx(".*")), Optional(StrRegEx(".*")), Optional(StrRegEx(".*")), Optional(StrRegEx(".*")), Optional(StrRegEx(".*")), Optional(StrRegEx(".*")), Optional(StrRegEx(".*")), Optional(StrRegEx(".*")), Optional(StrRegEx(".*")), Optional(StrRegEx(".*")), Optional(StrRegEx(".*")), Optional(StrRegEx(".*")), Optional(StrRegEx(".*")), Optional(StrRegEx(".*")), Optional(StrRegEx(".*")), Optional(StrRegEx(".*")), Optional(StrRegEx(".*"))

The exception after it runs through twice:

2018-01-17 10:15:32,988 DEBUG [Timer-Driven Process Thread-9] o.a.nifi.processors.standard.ValidateCsv ValidateCsv[id=327d1aac-1000-1160-9184-47b5669853c4] Failed to validate StandardFlowFileRecord[uuid=8a93d2e3-d304-44d6-a1f3-1cd38d16560e,claim=StandardContentClaim [resourceClaim=StandardResourceClaim[id=1516201957046-1, container=default, section=1], offset=101225, length=98225],offset=0,name=4399965514884,size=98225] against schema due to org.supercsv.exception.SuperCsvConstraintViolationException: duplicate value '1000000' encountered processor=org.supercsv.cellprocessor.constraint.Unique context={lineNo=1, rowNo=1, columnNo=1, rowSource=[1000000, Firstname1000000, Lastname1000000, 2452954106, 3387173669, 9625048215, test2@bkfs.com, FstName2-1000000, LstName2-1000000, 6483154023, 2898543994, 8006477595, blah@test.com, 123, Fake Street, null, Jacksonville, Fl, 32250, 1234]}; routing to 'invalid': {} org.supercsv.exception.SuperCsvConstraintViolationException: duplicate value '1000000' encountered at org.supercsv.cellprocessor.constraint.Unique.execute(Unique.java:76) at org.supercsv.util.Util.executeCellProcessors(Util.java:93) at org.supercsv.io.AbstractCsvReader.executeProcessors(AbstractCsvReader.java:203) at org.apache.nifi.processors.standard.ValidateCsv$NifiCsvListReader.read(ValidateCsv.java:617) at org.apache.nifi.processors.standard.ValidateCsv$1.process(ValidateCsv.java:479) at org.apache.nifi.controller.repository.StandardProcessSession.read(StandardProcessSession.java:2136) at org.apache.nifi.controller.repository.StandardProcessSession.read(StandardProcessSession.java:2106) at org.apache.nifi.processors.standard.ValidateCsv.onTrigger(ValidateCsv.java:446) at org.apache.nifi.processor.AbstractProcessor.onTrigger(AbstractProcessor.java:27) at org.apache.nifi.controller.StandardProcessorNode.onTrigger(StandardProcessorNode.java:1120) at org.apache.nifi.controller.tasks.ContinuallyRunProcessorTask.call(ContinuallyRunProcessorTask.java:147) at org.apache.nifi.controller.tasks.ContinuallyRunProcessorTask.call(ContinuallyRunProcessorTask.java:47) at org.apache.nifi.controller.scheduling.TimerDrivenSchedulingAgent$1.run(TimerDrivenSchedulingAgent.java:132) at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) at java.util.concurrent.FutureTask.runAndReset(FutureTask.java:308) at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$301(ScheduledThreadPoolExecutor.java:180) at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:294) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) at java.lang.Thread.run(Thread.java:748)

Shouldn't ValidateCSV only be validating the Unique() attribute on a single flowfile? Instead, it seems like it keeps the first flowfile in memory, then validates the next flowfile against the first and second. Is this a bug?

Created 02-22-2018 05:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We contacted Hortonworks directly and talked with the Nifi developers who confirmed this is a bug in the ValidateCSV processor. No timeline on a fix.