Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: NiFi best practices for error handling

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

NiFi best practices for error handling

- Labels:

-

Apache NiFi

Created on 01-10-2017 09:13 PM - edited 08-19-2019 03:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All,

I would appreciate if you guys can point me to where I can find best practices for error handling in NiFi. Below is how I'm envisioning handling errors in my workflows. Would you suggest any enhancements or better ways to do it.

My error handling requirements are simple, basically to log the errored flow files to the file system and send an alert; so all the processors in the dataflow that have a "failure" relationship would send the failed flowfiles to a funnel and from there they would go to an error handling Process group, which does the logging and alerting.

Thanks

Created on 01-10-2017 09:43 PM - edited 08-19-2019 03:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Not all Errors are equal. I would avoid lumping all failure relationships into the same error handling strategy. Some Errors are no surprise, can be expected to occur on occasion, and may be a one time thing that resolves itself.

Lets use your example above....

The putHDFS processor is likely to experience some failure over time do to events outside of NiFi's control. For example, let say a file in the middle of transferring to HDFs when the network connection is lost. NIFi would in turn route that FlowFile to failure. If that failure relationship had been routed back on the putHDFS, it would have likely been successful on the subsequent attempt. A better error handling strategy in this case may be to build a simple error handling flow that can be used when the type of failure might lead to self resolution.

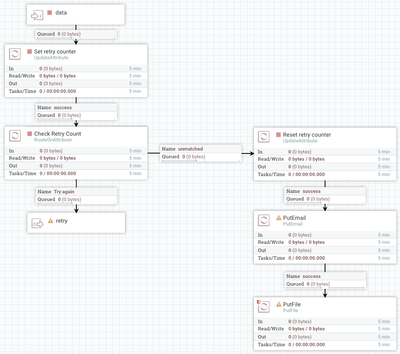

So here you see Failed FlowFiles enter at "data", they are then checked for a failure counter and if one does not exist it is created and set to 1. If it exists, it is incremented by 1. The check recount count will continue to pass the file to "retry" until the same file has been seen x number of times. "Retry" would be routed back to the source processor of the failure. after x attempts the counter is reset, an email is sent, and the file is place in some local error directory for manual intervention.

The other scenario is where the type of failure is not likely to ever correct itself. Your mergeContent processor is a good example here. If the processor failed to merge some FlowFiles, it is extremely likely to happen again, so there is little benefit in looping this failure relationship back on the processor like we did above. In this case you may want to route this processors failure to a putEmail processor to notify the end user of the failure and where it occurred in the dataflow. The success of the putEmail processor may just feed another processor such as UpdateAttribute which is in a stopped/disabled state. This will hold the data in the dataflow until manually intervention can be taken to identify the issue and either reroute the data back in to the flow once corrected or discard the data. If there is concern over available space in your NiFi Content repository, i would some processor to write it out to a different error file location using putFile, PutHDFS, PutSFTP, etc...

Hope this helps,

Matt

Created on 01-10-2017 09:43 PM - edited 08-19-2019 03:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Not all Errors are equal. I would avoid lumping all failure relationships into the same error handling strategy. Some Errors are no surprise, can be expected to occur on occasion, and may be a one time thing that resolves itself.

Lets use your example above....

The putHDFS processor is likely to experience some failure over time do to events outside of NiFi's control. For example, let say a file in the middle of transferring to HDFs when the network connection is lost. NIFi would in turn route that FlowFile to failure. If that failure relationship had been routed back on the putHDFS, it would have likely been successful on the subsequent attempt. A better error handling strategy in this case may be to build a simple error handling flow that can be used when the type of failure might lead to self resolution.

So here you see Failed FlowFiles enter at "data", they are then checked for a failure counter and if one does not exist it is created and set to 1. If it exists, it is incremented by 1. The check recount count will continue to pass the file to "retry" until the same file has been seen x number of times. "Retry" would be routed back to the source processor of the failure. after x attempts the counter is reset, an email is sent, and the file is place in some local error directory for manual intervention.

The other scenario is where the type of failure is not likely to ever correct itself. Your mergeContent processor is a good example here. If the processor failed to merge some FlowFiles, it is extremely likely to happen again, so there is little benefit in looping this failure relationship back on the processor like we did above. In this case you may want to route this processors failure to a putEmail processor to notify the end user of the failure and where it occurred in the dataflow. The success of the putEmail processor may just feed another processor such as UpdateAttribute which is in a stopped/disabled state. This will hold the data in the dataflow until manually intervention can be taken to identify the issue and either reroute the data back in to the flow once corrected or discard the data. If there is concern over available space in your NiFi Content repository, i would some processor to write it out to a different error file location using putFile, PutHDFS, PutSFTP, etc...

Hope this helps,

Matt

Created 01-11-2017 02:16 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Awesome explanation @Matt, thanks. Earlier I saw some examples of people retrying failed flow files 3 times, etc., but I was not sure where that would make sense; but I see now where it would be appropriate to retry flow files; for retrying, besides the failed flowfiles for network related errors, at what other processors or types of scenarios would need a retrying of failed flowfiles?

since we have 2 types of scenarios, one where you want to retry flow files and the other where you want to log, etc., I was thinking to have 2 process groups that accommodate these 2 scenarios and if I have a lot of processors where there is potential for failure, then collect the 2 kinds of failed flowfiles (one to retry and one to log) and send them to either of these 2 process groups accordingly. would that approach work ?

Thanks in advance.

Created 01-11-2017 02:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

A good rule of thumb is to attempt retry anytime you are dealing with an external system where failures resulting from things that are out of NiFi's control can occur (Network outages, destination systems have no disk space, destination has files of same name, etc...) This is not to say that there may not be cases where an internal situation would warrant a retry as well. Using MergeContent as an example. The Processor bins incoming FlowFiles until criteria is met for merge. If at the time of merge there is not enough disk space left in the content repository for the new merged FlowFile it will get routed to failure. Other processing of FlowFiles may result in freed space where a retry might be successful. This is more of a rare case and less likely then the external system examples I first provided.

I think you approach is a valid approach and keep in mind as Joe mentioned on this thread, the NIFi community is working toward better error handling in the future.

Created 01-11-2017 02:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Terrific, thanks a lot for your time.

Created 01-11-2017 02:47 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think Matt provided a nice and comprehensive response. I'll add that while we do offer a lot of flexibility and fine grained control for handling any case (whether for you that is a failure, success, connection issue, etc..). But, we can do better. One of the plans we've discussed is to provide for reference-able process groups. This would allow you to effectively call a portion of the flow like a function with a simple input/function/output model. You can read more about this here https://cwiki.apache.org/confluence/display/NIFI/Reference-able+Process+Groups.

We also have data provenance in which we can capture details of why we routed any given flowfile to any given relationship. This is not used as often as it could be. Further, we need to surface this information for use within the flow so error handling steps could capture things like 'last processor' and 'last transfer description' or something.

In short, there are exciting things on the horizon to make all sorts of flow management cases easier and more intuitive. The above items I mention will be an important part of that.

thanks

Created 01-11-2017 02:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you @jwitt for the heads up on what's in the pipeline.

Created 03-08-2018 08:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How far is the work on surfacing data provenance data in error handling? I have just discussed this option internally, rolling our own error handling process group using the data provenance rest api for looking up relevant data to convey in error logs and messages, but if on the near horizon as a built-in option, that sounds great.

Created on 10-12-2017 12:58 PM - edited 08-19-2019 03:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Everyone,

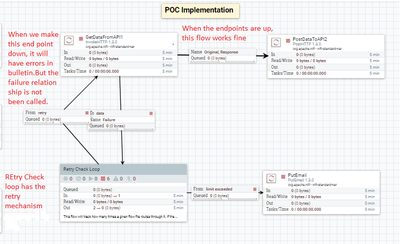

I am following the above suggested failure mechanism by @Matt in my NIFI Project template. But the failure flow is not trigerring the retry mechanism from invokeHTTP Processor. Can anyone let me know what I am missing? Or if this is not the right way, can you please suggest any alternative. Attached the template for the same.

Thanks

Created 10-12-2017 02:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I highly recommend staring a new HCC question for your particular use case so it gets better visibility. You van always reference this HCC in your new question.

The bulletin and any stack trace from the nifi-app.log when you take your end-point down would also be very helpful. Perhaps it is doing a session rollback instead which leaves the file on the inbound connection rather the routing to failure.

Thank you,

Matt