Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Nifi Mysql to hive data format not match

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Nifi Mysql to hive data format not match

- Labels:

-

Apache Hive

-

Apache NiFi

Created 03-27-2018 06:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I use Nifi(QueryDatabaseTable=>PutHiveStreaming) processor to load table from mysql to Hive.But the data format is not match.

eg:

mysql column:`id` bigint(20)

Hive column: id int

I will get the erro log like below:

2018-03-27 11:59:22,607 WARN [put-hive-streaming-0] org.apache.hive.hcatalog.data.JsonSerDe Error [org.codehaus.jackson.

JsonParseException: Current token (VALUE_STRING) not numeric, can not use numeric value accessors

at [Source: java.io.ByteArrayInputStream@1afd4f32; line: 1, column: 9]] parsing json text [{"id": "3520", ...].

...

Caused by: org.codehaus.jackson.JsonParseException: Current token (VALUE_STRING) not numeric, can not use numeric value

accessors

at [Source: java.io.ByteArrayInputStream@4e94db44; line: 1, column: 9]

As you see,The QueryDatabaseTable read the mysql int column as String,like [{"id": "3520", ...].I can't believe this.So I guess, is there something wrong with my operation?

Need Your Help~

ps, if I change the Hive column: id from "int" to "String",I can load the table into Hive smoothly~

- Nifi Version : nifi-1.5.0.3.1.1.0-35

- JDBC Version : mysql-connector-java-5.1.7.jar/mysql-connector-java-5.1.28.jar(I tried different JDBC version)

Created 03-27-2018 03:03 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The issue is with your data type at the source side.

Let's talk about the biggest "integral" data types in Avro and Hive. As per Hive documentation, BIGINT is defined as

BIGINT(8-byte signed integer, from-9,223,372,036,854,775,808to9,223,372,036,854,775,807)

As per Avro documentation, the Long datatype is defined as follows.

- long: 64-bit signed integer

These are signed numbers and the max range is 9,223,372,036,854,775,807 [19 digits]. You are trying to port essentially a number from the source database which is way beyond Hive's/Avro data type range [number in MySQL looks like BIGINT(20) ]

Why are you not able to access the column value using INT/BIGINT data type?

The data is stored on HDFS in Avro format with your column specified as "STRING". Your data type does not match the data type of the data and hence you will get a parse exception. A switch from INT to STRING works for the same very reason.

Solution - You should either tone down the size of the column at the source side, even BIGINT(19) is not recommended since the values may go beyond the range of Hive BIGINT/AVRO Long. Or else, have your table on the destination side(Hive) with the column as STRING.

PS - Avro DOES NOT have Date/Timestamp datatypes, so such columns are also converted to a string when imported.

Hope that helps!

Created 03-27-2018 10:51 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There is nothing wrong with your operation, the table used in PutHivestreaming processor needs to have all the data types are strings.

if you want to have id type as Bigint then after Querydatabasetable processor store the Avro file into HDFS location using PutHDFS processor, then create avro table on top of HDFS directory.

(or)

Use ConvertAvroToORC processor after QueryDatabasetable processor then store the Orc to HDFS location using PutHDFS processor,then create table on top of HDFS directory.

Created 03-27-2018 12:47 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

PutHiveStreaming will accept all valid Avro files (the fields don't have to be strings), the problem here is that a bigint(20) will not fit in a Long object (the max number of digits is 19), so QueryDatabaseTable converts it to a String, and thus it won't go into your Hive table as that expects an int. If your values are not 20 digits long, I recommend you alter your table to make the ID column smaller, then things should work better. If you can't alter the table, but the values will never be 20 digits long, you could use ConvertAvroSchema to try and convert the ID values from String to Long/Int.

Created 03-27-2018 08:43 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank a ton Matt for the valuable info, Could you please convert your comment to answer, As my answer is not pointing to the root cause.

Created on 03-28-2018 03:20 AM - edited 08-17-2019 11:11 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your reply.

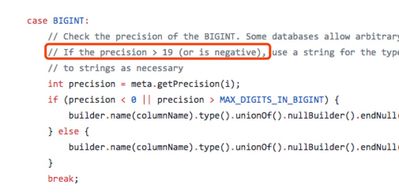

As your answer,I noticed the nifi BigInt problem. It`s true that "if the percision>19, it will be changed into string".

the process happened in the "QueryDatabaseTable" processor.

I read the source code, and find the function which they write to dell with the jdbc data.

#QueryDatabaseTable https://github.com/apache/nifi/blob/master/nifi-nar-bundles/nifi-standard-bundle/nifi-standard-proce... #QueryDatabaseTable import this class for data process https://github.com/apache/nifi/blob/master/nifi-nar-bundles/nifi-standard-bundle/nifi-standard-proce...

jdbcCommon.java

Tanks a lot for your help~

But I don't know how to solve the problem gently, I might just use the String format in Hive.

There also some place should notice, they deal the "TinyInt" and "Boolean" with the same way.The value "0" or "1" will be change into "false"/"true".

Different databases have it's own design, we can't get a good way to deal with the Data transfer?

Created 03-28-2018 03:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you, Shu ~

Your answer is very timely. And I decided to use String Format in Hive to avoid this problem~

Created 03-27-2018 03:03 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The issue is with your data type at the source side.

Let's talk about the biggest "integral" data types in Avro and Hive. As per Hive documentation, BIGINT is defined as

BIGINT(8-byte signed integer, from-9,223,372,036,854,775,808to9,223,372,036,854,775,807)

As per Avro documentation, the Long datatype is defined as follows.

- long: 64-bit signed integer

These are signed numbers and the max range is 9,223,372,036,854,775,807 [19 digits]. You are trying to port essentially a number from the source database which is way beyond Hive's/Avro data type range [number in MySQL looks like BIGINT(20) ]

Why are you not able to access the column value using INT/BIGINT data type?

The data is stored on HDFS in Avro format with your column specified as "STRING". Your data type does not match the data type of the data and hence you will get a parse exception. A switch from INT to STRING works for the same very reason.

Solution - You should either tone down the size of the column at the source side, even BIGINT(19) is not recommended since the values may go beyond the range of Hive BIGINT/AVRO Long. Or else, have your table on the destination side(Hive) with the column as STRING.

PS - Avro DOES NOT have Date/Timestamp datatypes, so such columns are also converted to a string when imported.

Hope that helps!

Created 03-28-2018 03:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your reply ~

Your answer helps me a lot.

I may use the "String" format to store my table in Hive.

But I still have a worry, the "String" format may slow down the the execution speed of the data program in the future?

Created 03-28-2018 05:02 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In later versions of NiFi that use newer versions of Avro, Date and Timestamps are supported, but as logical types (backed by long values not strings). Previous versions of NiFi converted them to strings as the version of Avro at the time (1.7.7) didn't support logical types for Date/Timestamp

Created 04-01-2018 04:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did the answer help in the resolution of your query? Please close the thread by marking the answer as Accepted!