Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Nifi TailFile Processor does not detect vast i...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Nifi TailFile Processor does not detect vast insertion into a file

- Labels:

-

Apache NiFi

Created on 07-11-2018 01:45 PM - edited 08-18-2019 01:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

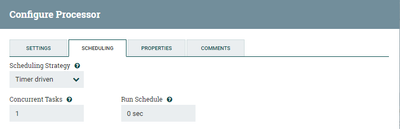

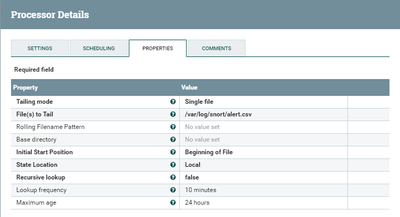

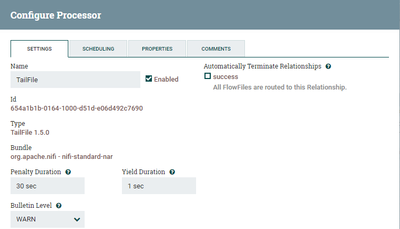

We have setup Nifi site-to-site to transfer Snort logs (alert.csv) to a remote Nifi server, using Apache Metron (Hortonworks Cybersecurity). Once Snort is running, it produces a vast amount of logs in CSV format, more than 500 records only in 5 seconds for instance. The problem is that Nifi Tailfile cannot detect and separate all 500 records, it may detect around 20 changes (totally include 500 records).

Current configurations are attached to this message.How can we configure the Tailfile processor to detect every single line as a new packet (event)? Any help would be appreciated.

Created 07-11-2018 02:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just to make sure I understand correctly...

-

The TailFile is producing only 20 output FlowFiles; however, all 500 records are included within those 20 FlowFiles. correct?

-

With a Run Schedule of 0 secs, the processor will be scheduled toe execute and then scheduled to execute again immediately following completion of last execution. During its execution, it will consume all new lines seen since last execution. There is no configuration option that will force this processor to output a separate FlowFile for each line read from the file being tailed.

-

You could however feed the output FlowFiles to a splitText processor to split each FlowFile in to a separate FlowFile per line.

-

Thank you,

Matt

-

When an "Answer" addresses/solves your question, please select "Accept" beneath that answer. This encourages user participation in this forum.

Created 07-11-2018 02:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just to make sure I understand correctly...

-

The TailFile is producing only 20 output FlowFiles; however, all 500 records are included within those 20 FlowFiles. correct?

-

With a Run Schedule of 0 secs, the processor will be scheduled toe execute and then scheduled to execute again immediately following completion of last execution. During its execution, it will consume all new lines seen since last execution. There is no configuration option that will force this processor to output a separate FlowFile for each line read from the file being tailed.

-

You could however feed the output FlowFiles to a splitText processor to split each FlowFile in to a separate FlowFile per line.

-

Thank you,

Matt

-

When an "Answer" addresses/solves your question, please select "Accept" beneath that answer. This encourages user participation in this forum.

Created 07-25-2018 01:20 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

-

*** Forum tip: Please try to avoid responding to an Answer by starting a new answer. Instead use the "add comment" tp respond to en existing answer. There is no guaranteed order to different answers which can make following a response thread difficult especially when multiple people are trying to assist you.

-

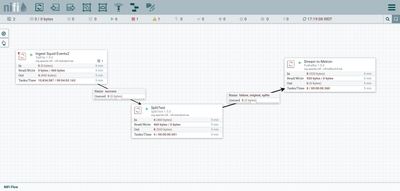

Based on what you are showing me, your flow is working as designed.

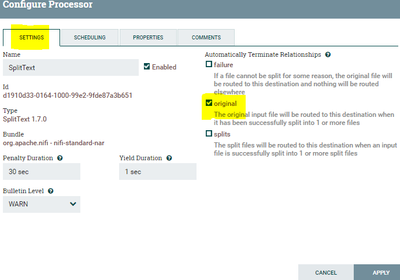

Since you have added all three outgoing relationships to the outgoing connection of the splitText processor, you would end up with duplication.

-

the "original" relationship is basically a passthrough for the incoming Flowfiles to splitText. This relationship is often auto-terminated unless you need to keep the original un-split flowfiles for something else in your flow. IN that case the original relationship would be routed within its own outbound connection and not in the same connection as "splits".

-

The fact that splitText is not really splitting your source Flowfiles (4 in and 4 out) tells me that the 4 source Flowfiles created do not contain any line returns from which to split that text. So the question is what does the output of one of these ~115 byte FlowFiles look like?

-

I also do not recommend routing the "failure" relationship along with "success" or "original" in the same connection. Should a failure occur, how would you easily separate what failed and what was successful.

-

Thank you,

Matt

Created on 07-25-2018 01:21 PM - edited 08-18-2019 01:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Connect only splits relationship from Split Text to Publish kafka processor

Auto terminate original relationship

Go to configure --> settings tab--> check the original box

and regarding failure relationship connect to putEmail processor to get notified if some thing went wrong.

Flow:-

Created on 07-25-2018 01:00 PM - edited 08-18-2019 01:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear @Matt Clarke

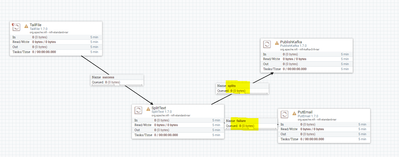

Thank you for your great help. Actually, based on your help I used a SplitText after a TailFile processor, which ingest Squid logs (based on Apache Metron), with the following properties:

Line Split Count: 1

Maximum Fragment Size: (No value set)

Header Line Count: 0

Header Line Marker Characters: (No value set)

Remove Trailing Newlines: false

I checked all types of the relationship (including: failure / original / splits) in the "Connection Details" between SplitText and PutKafka processors.

The problem is the SplitText duplicates the input data. Please find the attachment which is completely obvious. Actually, there is an extra newline character at the end of each received FlowFile (if I increase the insertion rate, the extra newline only would be added after a bunch of lines in a FlowFile).

Any advice?

Created on 08-04-2018 12:43 PM - edited 08-18-2019 01:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

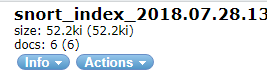

I transferred alert.csv (snort alert) to Metron by NiFi site-to-site, But the number of topology state in storm is not equal to the number snort_index in Elasticsearch. for example:

the number of row in alert.csv is 140 and the number of topology state is 140, But I see 6 docs in Elasticsearch!

How can I solve it?

thanks for answering my question.

Created 08-06-2018 03:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

-

I recommend starting a new question for this question. This question was originally about tailFile and splitting files. It is best to keep one question per HCC post.

-

Thank you,

Matt

Created 08-07-2018 06:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your input.

As my question was related to the previous ones,I put it there, but I think you're right. I started a new one.