Thanks for response.

Small correction.. From custom code the message is gonna publish to kafka queue from there I am picking the JSON message to pass to EvaluateJsonPath processor. the EvaluateJsonPath has now two values one is source path and one is destination path.

As you said you can use FetchS3Object to get the file from S3, how should I pass the source path to FetchS3Object processor and then how should I pass the destination path to PutFile processor?

Could you explain me briefly ?

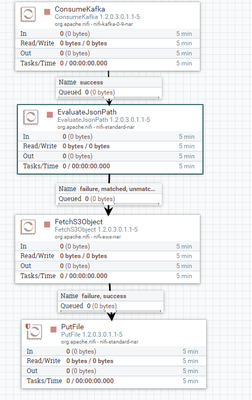

Right now my flow is like attached screen shot.

PFA...