Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Nifi: how to use fileFileter for fetching file...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Nifi: how to use fileFileter for fetching files from hadoop?

- Labels:

-

Apache Hadoop

-

Apache NiFi

Created 10-30-2017 08:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I want to fetch files from hadoop directory based on their filename,logically it looks like this ${filename}.* (because i have several files with similar name they look like this 2011-01-01.1 , 2011-01-01.2 etc.) i tried to use listhdfs+fetchhdfs but they can't match my logic

- Can you give me any batter idea how can i do it inside nifi environment?

- is it possible to make this task by groovy code inside ExecuteScript processor ?

- how can i connect hdfs directory by groovy code ?

- after getting this files i should put them in a flowfile list and can't transfer flowfiles untill flowfile list size hasn't matched the value of count attribute( placed in flowfile)

Created on 10-30-2017 02:33 PM - edited 08-18-2019 02:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes you can do this in several methods using by nifi processors.

1.By using GetHDFS processor(pure nifi processors).

2.By using ListHDFS processor(pure nifi processors).

3.Run Script and add the attributes to the flowfile and use them in FetchHDFS processor.

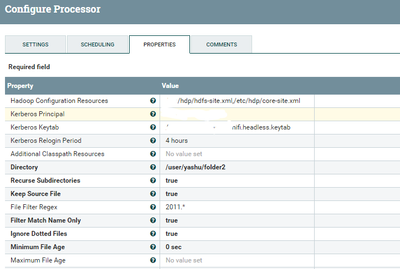

Method 1:-

By using GetHDFS processor:-

for testing i am having these 4 files in folder2 directory and i want to fetch only file name starting with 2011

hadoop fs -ls /user/yashu/folder2/ Found 4 items -rw-r--r-- 3 hdfs 27 2017-10-30 09:16 /user/yashu/folder2/2011-01-01.1 -rw-r--r-- 3 hdfs 359 2017-10-20 08:47 /user/yashu/folder2/hbase.txt -rw-r--r-- 3 hdfs 24 2017-10-09 21:45 /user/yashu/folder2/sam.txt -rw-r--r-- 3 hdfs 12 2017-10-09 21:45 /user/yashu/folder2/sam1.txt

Use GetHDFS processor and change property

Keep Source File to true by default is false.//if you want to keep the source in the directory then change property to true. (or) if you want to delete the file after fetching then keep property to false.

2. Give the path of your Directory

3.In File Filter Regex give the regex that matches your required filenames.

Ex:- i need only files starting with 2011 so i have given regex as

2011.*

this processor now fetches only /user/yashu/folder2/2011-01-01.1 file from directory.

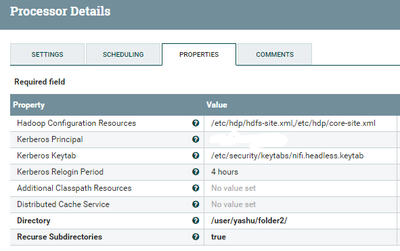

Method 2:-

using ListHDFS processor:-

configure your directory path in list HDFS processor and this processor will list all the files that are in the directory. We cannot filter out the files that we required from listhdfs processor but every flowfile from listhdfs processor will have filename attribute associated with the flowfile.

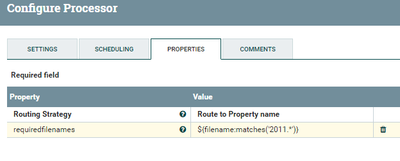

we can make use of filename attribute and use RouteOnAttribute processor.

RouteOnAttribute:-

Add new property in RouteOnattribute and this processor will works as file filter to filter out the flowfiles.

Property:-

requiredfilenames

${filename:matches('2011.*')}This property only matches the filenames and routes if they satisfies the expression as above.

All the other filenames sam.txt,sam1.txt, ...etc are not ignored only 2011 filename will be routed to the property relation.

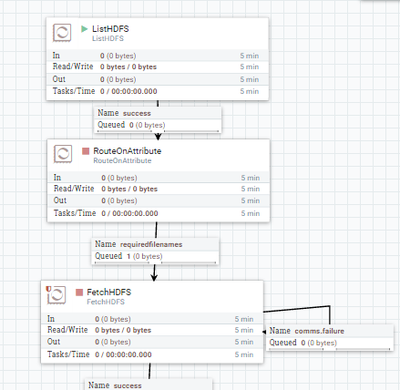

Flow:-

Method 3:-

Run Script:-

you can run the script and then use some processors(extract text..etc) to extract the filename and path name from the result and use those attributes in FetchHDFS processor.

Created on 10-30-2017 02:33 PM - edited 08-18-2019 02:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes you can do this in several methods using by nifi processors.

1.By using GetHDFS processor(pure nifi processors).

2.By using ListHDFS processor(pure nifi processors).

3.Run Script and add the attributes to the flowfile and use them in FetchHDFS processor.

Method 1:-

By using GetHDFS processor:-

for testing i am having these 4 files in folder2 directory and i want to fetch only file name starting with 2011

hadoop fs -ls /user/yashu/folder2/ Found 4 items -rw-r--r-- 3 hdfs 27 2017-10-30 09:16 /user/yashu/folder2/2011-01-01.1 -rw-r--r-- 3 hdfs 359 2017-10-20 08:47 /user/yashu/folder2/hbase.txt -rw-r--r-- 3 hdfs 24 2017-10-09 21:45 /user/yashu/folder2/sam.txt -rw-r--r-- 3 hdfs 12 2017-10-09 21:45 /user/yashu/folder2/sam1.txt

Use GetHDFS processor and change property

Keep Source File to true by default is false.//if you want to keep the source in the directory then change property to true. (or) if you want to delete the file after fetching then keep property to false.

2. Give the path of your Directory

3.In File Filter Regex give the regex that matches your required filenames.

Ex:- i need only files starting with 2011 so i have given regex as

2011.*

this processor now fetches only /user/yashu/folder2/2011-01-01.1 file from directory.

Method 2:-

using ListHDFS processor:-

configure your directory path in list HDFS processor and this processor will list all the files that are in the directory. We cannot filter out the files that we required from listhdfs processor but every flowfile from listhdfs processor will have filename attribute associated with the flowfile.

we can make use of filename attribute and use RouteOnAttribute processor.

RouteOnAttribute:-

Add new property in RouteOnattribute and this processor will works as file filter to filter out the flowfiles.

Property:-

requiredfilenames

${filename:matches('2011.*')}This property only matches the filenames and routes if they satisfies the expression as above.

All the other filenames sam.txt,sam1.txt, ...etc are not ignored only 2011 filename will be routed to the property relation.

Flow:-

Method 3:-

Run Script:-

you can run the script and then use some processors(extract text..etc) to extract the filename and path name from the result and use those attributes in FetchHDFS processor.

Created 10-30-2017 04:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

At first thank you for your answer 😄 ,In this case how can i find amount of flowfile which contains "2011.*"? i need to find this value and check weather it is equal to my count attribute ( main problem is that i can't get exact number of flowfiles which match this regex "2011.*'

Created 03-28-2019 04:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

how can i use this for multiple file base on one file name

example :- input path contains 3 files and one is .done.cvs

emp.csv

dept.csv

account.csv

date.done.csv

if the input path contains the .done.csv then only my file should route in nifi flow .

else it should not be route .

Created 03-28-2019 04:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

how can i use this for multiple file base on one file name

example :- input path contains 3 files and one is .done.cvs

emp.csv

dept.csv

account.csv

date.done.csv

if the input path contains the .done.csv then only my file should route in nifi flow .

else it should not be route .