Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Nifi - putsql for phoenix upsert very slow - i...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Nifi - putsql for phoenix upsert very slow - improve performace ??

- Labels:

-

Apache NiFi

-

Apache Phoenix

Created 10-13-2016 04:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Nifi - putsql for phoenix upsert very slow , getting records 1000/sec , phoenix putsql is unabel toinsert it seems .

Please let us know how to improve phoenix insertion fast .

Created 10-13-2016 05:08 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

"phoenix putsql is unabel toinsert it seems"

Are you referring to the INSERT SQL command? Phoenix does not expose INSERT, it exposes UPSERT which matches the actual semantics of how data is written.

"Nifi - putsql for phoenix upsert very slow , getting records 1000/sec"

The PutSQL processor seems to be written in a way that would allow for optimal performance with Phoenix. Care to share your PutSQL processor configuration? Have you tried to write a simple JDBC application to verify the "theoretical" performance of your system?

One thing I can see is that if PutSQL is getting triggered very frequently, you will be making a large number of RPCs and not batching updates into HBase. How many FlowFiles does PutSQL process per invocation?

Created 10-13-2016 05:08 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

"phoenix putsql is unabel toinsert it seems"

Are you referring to the INSERT SQL command? Phoenix does not expose INSERT, it exposes UPSERT which matches the actual semantics of how data is written.

"Nifi - putsql for phoenix upsert very slow , getting records 1000/sec"

The PutSQL processor seems to be written in a way that would allow for optimal performance with Phoenix. Care to share your PutSQL processor configuration? Have you tried to write a simple JDBC application to verify the "theoretical" performance of your system?

One thing I can see is that if PutSQL is getting triggered very frequently, you will be making a large number of RPCs and not batching updates into HBase. How many FlowFiles does PutSQL process per invocation?

Created on 10-14-2016 01:29 AM - edited 08-19-2019 02:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1. we are using UPSERT commad only in phoenix .

2. even i am unable insert 100/sec

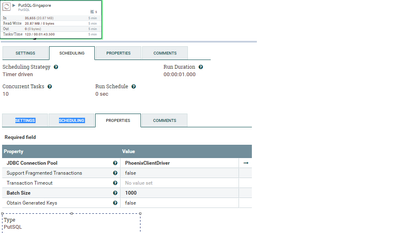

3 . PFA of PutSQL configuration

I am getting 10,000 records per second . but i am able to insert only 20-50 records per second . queue is not getting piled up , looks data loss is happening . please let me know how to improve performace .atleadt 1000/sec as said.

and how to debug/test whether the data loss is happening or not . thanks in advance .

Created 10-14-2016 04:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

With sufficient backpressure, I would imagine that NiFi will drop data eventually.

Based on your screenshot, you are writing no data via this processor (Out is 0bytes). I would recommend you verify that Phoenix and HBase are healthy before trying to introduce NiFi into the picture.

Created 10-17-2016 12:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The "Read/Write" stats on the processor have nothing to do with writing to your SQL end-point. This particular stat is all about reads from and writes to the NIFi content Repository. This helps identify where in your flow you may have disk high disk I/O in the form of either reads or more expensive writes.

From the screenshot above, I see that this processor brought in off inbound connections 35,655 FlowFiles in the past 5 minutes. It read 20.87 MB of content from the content repository in that same timeframe. The processor then output 0 FlowFiles to any outbound connection (This indicates all files where either routed to a an auto-terminated relationship). Assuming only the "success" relationship was auto-terminated, all data was sent successfully. If the "failure" relationship (which should not be auto-terminated here) is routed to another processor, the 0 "out" indicates that in the past 5 minutes 0 files failed. The Tasks shows a cumulative total CPU usage reported over the past 5 minutes. A high "Time" value indicates a cpu intensive processor.

Thanks,

Matt