Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Nifi will be stuck when process large file

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Nifi will be stuck when process large file

- Labels:

-

Apache NiFi

Created 10-19-2021 09:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Description:

Nifi node will be stuck and all processors won't deal with flow file when one processor is processing a large flow file, like PutFile, ExecuteStreamCommand, ConvertRecord. Nifi node will be very very slow and heavy cpu usage. Sometime the cpu usage rate will over 700%. Then the heartbeat may be Disconnected. Heavy cpu usage also can cause other processors stuck and never consume any flow file until the large flow file processing is completed. As I know, the PutFile or ExecuteStreamCommand shouldn't cost too many cpu, it is just executing a cp command in linux. We have tried to increase the total thread count. But it is not any effect. This issue happened after we upgrade the nifi version from 1.8 to 1.12. We can't understand why these processors can cost so many cpu than nifi node will be disconnected from cluster. Anyone can help me? Thanks a lot.

Nifi Version: 1.12

Machine capacity:

cpu 8 core,

memory 64G,

Xmx 20G,

Nifi node amount 5

Created 10-19-2021 12:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@DayDream

The ExecuteStreamCommand processor executes a system level command and not something native within NiFi, so its impact on CPU is completely dependent on what the command being called is doing.

You mention that the ExecuteStreamCommand is just executing a CP command and that issue happens when you are dealing with a large file. The first thing I would be looking in to is disk I/O of the source and destination directory location where the file is being copied from and copied to.

You also mention that the PutFile is writing out a large FlowFile to disk. This means that the processors is reading FlowFile content from the NiFi content_repository and then writing it to some target folder location. I would once again look at the disk I/O of both locations when this is happening.

The CPU usage may be high simply because these threads are running a long time waiting on disk I/O.

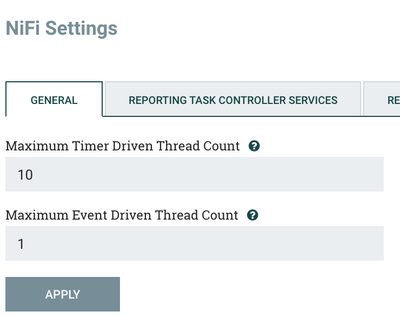

NiFi uses CPU for its core level functions and then you configure an additional thread pool that is used by the NiFi components you add to the NiFi canvas. This resource pool is configured via NiFi UI --> Global Menu (upper right corner of UI) --> Controller Settings:

The "Event Driven" thread pool is experimental and deprecated and is used by processors configured to use the event driven scheduling strategy. Stay away from this scheduling strategy.

The "Timer Driven" thread pool is used by controller services, reporting tasks, processors, etc... The Processors will use it when configured to use the "Timer Driven" or "Cron driven" scheduling strategies.

This pool is what is available for the NiFi controller to hand out to all processors requesting time to execute. Setting this value to an arbitrarily high value will simply lead to many NiFi components getting threads to execute but then spending excessive time in CPU wait as the time on the limited cores is time sliced across all active threads. The general rule of thumb here is to set the pool to 2 to 4 times the number of available core on a single NiFi host/node. So for your 8 core server, you would want this between 16 and 32. This does not mean you can't set this higher, but should only do this in smaller increments while monitoring CPU usage over extended period of time. If you have 5 nodes, this setting is per node so you would have a thread pool of 16 - 32 on each NiFi host/node.

Another thing you may want to start looking at is the GC stats for your JVM. Is GC (young and old) running very often? Is it taking a long tome to run? All GC is a stop-the-world event, so the JVM simply is paused while this is going on which can also impact how long a thread is "running".

You can get some interesting details about your running NiFi using the built in NiFi diagnostics tool.

<path to NiFi>/bin/nifi.sh diagnostics --verbose <path/filename where output should be written>

For a NiFi node to remain connected to it must be successful at sending a heartbeat to the elected cluster coordinator at least 1 out of 8 scheduled heartbeat intervals. Let's say the heartbeat interval is configured in the nifi.properties file for 5 secs, then the elected CC must successfully process at least 1 heartbeat every 40 secs or that node would get disconnected for lack of heartbeat. The node would initiate a reconnection once a heartbeat is received after having been disconnected for above reason. Configuring a larger heartbeat interval will help avoid this disconnect/reconnect by allowing from time before heartbeat is considered lost. This would allow more time if the node is going through a long GC pause or the CPU is so saturated it can't get a thread to create a heartbeat.

I also recommend reading through this community article:

https://community.cloudera.com/t5/Community-Articles/HDF-CFM-NIFI-Best-practices-for-setting-up-a-hi...

If you found this response assisted with your query, please take a moment to login and click on "Accept as Solution" below this post.

Thank you,

Matt

Created 10-19-2021 12:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@DayDream

The ExecuteStreamCommand processor executes a system level command and not something native within NiFi, so its impact on CPU is completely dependent on what the command being called is doing.

You mention that the ExecuteStreamCommand is just executing a CP command and that issue happens when you are dealing with a large file. The first thing I would be looking in to is disk I/O of the source and destination directory location where the file is being copied from and copied to.

You also mention that the PutFile is writing out a large FlowFile to disk. This means that the processors is reading FlowFile content from the NiFi content_repository and then writing it to some target folder location. I would once again look at the disk I/O of both locations when this is happening.

The CPU usage may be high simply because these threads are running a long time waiting on disk I/O.

NiFi uses CPU for its core level functions and then you configure an additional thread pool that is used by the NiFi components you add to the NiFi canvas. This resource pool is configured via NiFi UI --> Global Menu (upper right corner of UI) --> Controller Settings:

The "Event Driven" thread pool is experimental and deprecated and is used by processors configured to use the event driven scheduling strategy. Stay away from this scheduling strategy.

The "Timer Driven" thread pool is used by controller services, reporting tasks, processors, etc... The Processors will use it when configured to use the "Timer Driven" or "Cron driven" scheduling strategies.

This pool is what is available for the NiFi controller to hand out to all processors requesting time to execute. Setting this value to an arbitrarily high value will simply lead to many NiFi components getting threads to execute but then spending excessive time in CPU wait as the time on the limited cores is time sliced across all active threads. The general rule of thumb here is to set the pool to 2 to 4 times the number of available core on a single NiFi host/node. So for your 8 core server, you would want this between 16 and 32. This does not mean you can't set this higher, but should only do this in smaller increments while monitoring CPU usage over extended period of time. If you have 5 nodes, this setting is per node so you would have a thread pool of 16 - 32 on each NiFi host/node.

Another thing you may want to start looking at is the GC stats for your JVM. Is GC (young and old) running very often? Is it taking a long tome to run? All GC is a stop-the-world event, so the JVM simply is paused while this is going on which can also impact how long a thread is "running".

You can get some interesting details about your running NiFi using the built in NiFi diagnostics tool.

<path to NiFi>/bin/nifi.sh diagnostics --verbose <path/filename where output should be written>

For a NiFi node to remain connected to it must be successful at sending a heartbeat to the elected cluster coordinator at least 1 out of 8 scheduled heartbeat intervals. Let's say the heartbeat interval is configured in the nifi.properties file for 5 secs, then the elected CC must successfully process at least 1 heartbeat every 40 secs or that node would get disconnected for lack of heartbeat. The node would initiate a reconnection once a heartbeat is received after having been disconnected for above reason. Configuring a larger heartbeat interval will help avoid this disconnect/reconnect by allowing from time before heartbeat is considered lost. This would allow more time if the node is going through a long GC pause or the CPU is so saturated it can't get a thread to create a heartbeat.

I also recommend reading through this community article:

https://community.cloudera.com/t5/Community-Articles/HDF-CFM-NIFI-Best-practices-for-setting-up-a-hi...

If you found this response assisted with your query, please take a moment to login and click on "Accept as Solution" below this post.

Thank you,

Matt

Created 10-22-2021 07:41 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Matt, It is very helpful