Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: No errors in error logs - services start green...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

No errors in error logs - services start green but then turn RED after 60 seconds

- Labels:

-

Apache Hive

Created on 07-26-2016 12:23 PM - edited 09-16-2022 03:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am using the Hortonworks Sandbox - it previously ran just fine.

I did not use the system for some time and it seems the machine is broken even after a reboot.

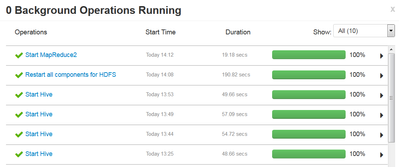

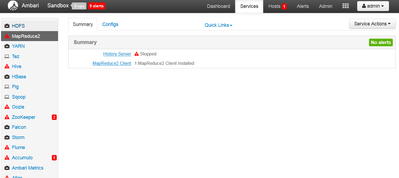

Whenever I start a service (e.g. HIVE) all proces steps appear green. For about 60 seconds the service turns up green BUT then switches back to RED. It does not matter which service I start - it's the same behaviour for all services ... all services start up fine BUT then switch to RED.

The 9 alerts are all OLD errors from 5 months ago ... any hint where to look for the error is appreciated.

Created 07-26-2016 04:43 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For the Hive errors, it's looks like hive is having issues communicating with Zookeeper. If Zookeper won't startup, then that's not surprising.

For the Ambari Agent errors, it looks like you have a corrupted json data file in your ambari-agent (/var/lib/ambari-agent/data/structured-out-status.json). Try moving the file to a different name like ".backup" and restart all of the services. I would do a "stop all" of the services, if it is available. Then try starting only Zookeeper to see if it comes up cleanly. After that, try starting the HDFS service. Then you can do a "start all".

Created 07-27-2016 01:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So Zookeeper is the only process not starting now ... seems like there are more corrup files. Is there the option install the zookeeper service again in the Sandbox?

2016-07-27 13:15:35,961 - INFO [main:Environment@100] - Server environment:user.name=zookeeper

2016-07-27 13:15:35,961 - INFO [main:Environment@100] - Server environment:user.home=/home/zookeeper

2016-07-27 13:15:35,961 - INFO [main:Environment@100] - Server environment:user.dir=/home/zookeeper

2016-07-27 13:15:35,962 - INFO [main:ZooKeeperServer@755] - tickTime set to 2000

2016-07-27 13:15:35,962 - INFO [main:ZooKeeperServer@764] - minSessionTimeout set to -1

2016-07-27 13:15:35,962 - INFO [main:ZooKeeperServer@773] - maxSessionTimeout set to -1

2016-07-27 13:15:35,971 - INFO [main:NIOServerCnxnFactory@94] - binding to port 0.0.0.0/0.0.0.0:2181

2016-07-27 13:15:35,987 - INFO [main:FileSnap@83] - Reading snapshot /hadoop/zookeeper/version-2/snapshot.1612d5

2016-07-27 13:15:35,997 - INFO [NIOServerCxn.Factory:0.0.0.0/0.0.0.0:2181:NIOServerCnxnFactory@197] - Accepted socket connection from /10.13.8.41:40623

2016-07-27 13:15:36,005 - WARN [NIOServerCxn.Factory:0.0.0.0/0.0.0.0:2181:NIOServerCnxn@362] - Exception causing close of session 0x0 due to java.io.IOException: ZooKeeperServer not running

2016-07-27 13:15:36,005 - INFO [NIOServerCxn.Factory:0.0.0.0/0.0.0.0:2181:NIOServerCnxn@1007] - Closed socket connection for client /10.13.8.41:40623 (no session established for client)

2016-07-27 13:15:36,922 - ERROR [main:ZooKeeperServerMain@63] - Unexpected exception, exiting abnormally

java.io.EOFException

at java.io.DataInputStream.readInt(DataInputStream.java:392)

at org.apache.jute.BinaryInputArchive.readInt(BinaryInputArchive.java:63)

at org.apache.zookeeper.server.persistence.FileHeader.deserialize(FileHeader.java:64)

at org.apache.zookeeper.server.persistence.FileTxnLog$FileTxnIterator.inStreamCreated(FileTxnLog.java:576)

at org.apache.zookeeper.server.persistence.FileTxnLog$FileTxnIterator.createInputArchive(FileTxnLog.java:595)

at org.apache.zookeeper.server.persistence.FileTxnLog$FileTxnIterator.goToNextLog(FileTxnLog.java:561)

at org.apache.zookeeper.server.persistence.FileTxnLog$FileTxnIterator.next(FileTxnLog.java:643)

at org.apache.zookeeper.server.persistence.FileTxnSnapLog.restore(FileTxnSnapLog.java:158)

at org.apache.zookeeper.server.ZKDatabase.loadDataBase(ZKDatabase.java:223)

at org.apache.zookeeper.server.ZooKeeperServer.loadData(ZooKeeperServer.java:272)

at org.apache.zookeeper.server.ZooKeeperServer.startdata(ZooKeeperServer.java:399)

at org.apache.zookeeper.server.NIOServerCnxnFactory.startup(NIOServerCnxnFactory.java:122)

at org.apache.zookeeper.server.ZooKeeperServerMain.runFromConfig(ZooKeeperServerMain.java:113)

at org.apache.zookeeper.server.ZooKeeperServerMain.initializeAndRun(ZooKeeperServerMain.java:86)

at org.apache.zookeeper.server.ZooKeeperServerMain.main(ZooKeeperServerMain.java:52)

at org.apache.zookeeper.server.quorum.QuorumPeerMain.initializeAndRun(QuorumPeerMain.java:116)

Created 07-27-2016 02:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The Zookeeper startup error seems to come from a broken zookeeper snapshot that cannot be read - can I delete this zookeeper snapshot without creating more problems?

2016-07-27 14:29:33,805 - INFO [main:Environment@100] - Server environment:java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib

2016-07-27 14:29:33,806 - INFO [main:Environment@100] - Server environment:java.io.tmpdir=/tmp

2016-07-27 14:29:33,806 - INFO [main:Environment@100] - Server environment:java.compiler=<NA>

2016-07-27 14:29:33,806 - INFO [main:Environment@100] - Server environment:os.name=Linux

2016-07-27 14:29:33,806 - INFO [main:Environment@100] - Server environment:os.arch=amd64

2016-07-27 14:29:33,806 - INFO [main:Environment@100] - Server environment:os.version=2.6.32-573.12.1.el6.x86_64

2016-07-27 14:29:33,806 - INFO [main:Environment@100] - Server environment:user.name=zookeeper

2016-07-27 14:29:33,806 - INFO [main:Environment@100] - Server environment:user.home=/home/zookeeper

2016-07-27 14:29:33,806 - INFO [main:Environment@100] - Server environment:user.dir=/home/zookeeper

2016-07-27 14:29:33,807 - INFO [main:ZooKeeperServer@755] - tickTime set to 2000

2016-07-27 14:29:33,807 - INFO [main:ZooKeeperServer@764] - minSessionTimeout set to -1

2016-07-27 14:29:33,807 - INFO [main:ZooKeeperServer@773] - maxSessionTimeout set to -1

2016-07-27 14:29:33,815 - INFO [main:NIOServerCnxnFactory@94] - binding to port 0.0.0.0/0.0.0.0:2181

2016-07-27 14:29:33,829 - INFO [main:FileSnap@83] - Reading snapshot /hadoop/zookeeper/version-2/snapshot.1612d5

2016-07-27 14:29:34,826 - ERROR [main:ZooKeeperServerMain@63] - Unexpected exception, exiting abnormally

java.io.EOFException

at java.io.DataInputStream.readInt(DataInputStream.java:392)

at org.apache.jute.BinaryInputArchive.readInt(BinaryInputArchive.java:63)

"zookeeper.out" 49L, 7773C

Created 07-27-2016 03:08 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok - now after i removed all the snapshot and logfiles in /var/log/zookeeper - shut down everything and started zookeeper all alone, then HDFS, then YARN and then everything else it worked.

For people that might find this thread:

What happened was, my filesystem ran full - system stopped. Lots of corrupted files. Ambari did not catch errors as they were on the filesystem and the problem were corrupted files.

How to solve it.

1. Shut down

2. Start each service on its own

3. If it fails check /var/log/<SERVICENAME> (e.g. /var/log/zookeeper)

4. Find the last logfile

5. tail -f /var/log/zookeeper/zookeeper.out or even

tail -f /var/log/zookeeper/zookeeper.out | grep --line-buffered ERROR

2016-07-27 14:29:33,829 - INFO [main:FileSnap@83] - Reading snapshot /hadoop/zookeeper/version-2/snapshot.1612d5

2016-07-27 14:29:34,826 - ERROR [main:ZooKeeperServerMain@63] - Unexpected exception, exiting abnormally

java.io.EOFException

at java.io.DataInputStream.readInt(DataInputStream.java:392)

at org.apache.jute.BinaryInputArchive.readInt(BinaryInputArchive.java:63)

"zookeeper.out" 49L, 7773C

6. Start the service again - anaylze the errors - right before it fails with an uncaught exception it will show you the file it is trying to read. Copy that file to another location or rename it to file.BACKUP. Delete the original file. Try starting up again and check for errors - rinse and repeat step 5 and 6 until now read errors are found.

7. Do this for every service that fails to start - if you are lucky you will be able to find all corrupted files and remove them.

Lesson learned: If you let your filesystem run full you are in for an adventure. Thanks everyone for their help. I hope this helps others with similar problems trying HADOOP on this sandbox.

Created 07-27-2016 11:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good catch! I'm glad you took the time to write up your findings for others to learn.

- « Previous

-

- 1

- 2

- Next »