Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Non-DFS storage occupied in Hadoop mount in Li...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Non-DFS storage occupied in Hadoop mount in Linux server

- Labels:

-

Apache Hadoop

Created on 06-12-2018 02:04 PM - edited 09-16-2022 06:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

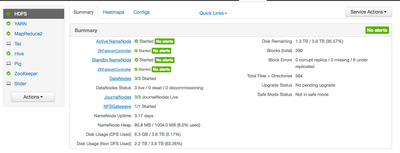

I am working in a 16 node cluster and recently i received an issue with the Non-DFS storage where the /hadoop/ mount which I am using is being consumed for non-DFS data and when I observed i found lot of blk_12345 etc. named .meta and original files. where each file is of 128 MB size and .meta file of size 1.1 MB (Totally all the files in total are consuming 1.7 TB of total cluster storage). Please let me know if I can remove these files and what is the impact if I remove them.

And what is the reason they are created?

Created on 06-21-2018 07:02 PM - edited 08-17-2019 07:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Karthik Chandrashekhar!

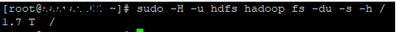

Sorry about my delay, so taking a look at your du outputs, it looks like HDFS is doing okay with the DFS total size.

If you sum the values from 16 hosts under the /hadoop/hadoop/hdfs/data it will be equal to 1.7TB.

Do you have a specific mount disk for /hadoop/hadoop/hdfs/data? Or it's all under the / directory, and how many disks do you have?

E.g., in my case, I have a lab and its everything under the / directory in 1 disk.

[root@c1123-node3 hadoop]# df -h

Filesystem Size Used Avail Use% Mounted on

rootfs 1.2T 731G 423G 64% /

overlay 1.2T 731G 423G 64% /

tmpfs 126G 0 126G 0% /dev

tmpfs 126G 0 126G 0% /sys/fs/cgroup

/dev/mapper/vg01-vsr_lib_docker

1.2T 731G 423G 64% /etc/resolv.conf

/dev/mapper/vg01-vsr_lib_docker

1.2T 731G 423G 64% /etc/hostname

/dev/mapper/vg01-vsr_lib_docker

1.2T 731G 423G 64% /etc/hosts

shm 64M 12K 64M 1% /dev/shm

overlay 1.2T 731G 423G 64% /proc/meminfoIf I calculate on all hosts the du --max-depth=1-h /hadoop/hdfs/data, I'll get my DFS Usage.

And if I calculate on all hosts my du --max-depth=1-h / minus the value from HDFS directory, I'll get the total of Non-dfs usage.

So the math would be:

DFS Usage = Total DU on the HDFS Path

NON-DFS Usage = Total DU - (DFS Usage)

For each disk.

And answering your last question, the finalized folder it's used by HDFS to allocated the blocks that have been processed. So deleting these files, it'll probably throw some alerts from HDFS to you (maybe a block missing a replica or some corrupted block).

I completely understand your concern about your storage getting almost full, but, If you aren't able to delete any data outside of the HDFS, I'd try to delete old and unused files from HDFS (using the HDFS DFS command!), compress any raw data, use more file formats with compression enabled or in last case change the replication-factor to a lower value (kindly remember that changing this, it may cause some problems). Just a friendly reminder, everything under the dfs.datanode.data.dir will be used internally for HDFS storing purposes 🙂

Hope this helps!

Created 06-12-2018 05:21 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm not sure if i get you right, but my advice would be to not delete these files. It belongs to HDFS Datanode, the blk_12345 dir carries some blocks+meta = data stored in HDFS.

If you want to know which file belongs to which block, you can use the following command:

[hdfs@node2 ~]$ cd /hadoop/hdfs/data/current/BP-686380642-172.25.33.129-1527546468579/current/finalized/subdir0/subdir0/ [hdfs@node2 subdir0]$ ls | head -2 blk_1073741825 blk_1073741825_1001.meta [hdfs@node2 ~]$ hdfs fsck / -files -locations -blocks -blockId blk_1073741825 Connecting to namenode via http://node3:50070/fsck?ugi=hdfs&files=1&locations=1&blocks=1&blockId=blk_1073741825+&path=%2F FSCK started by hdfs (auth:SIMPLE) from /MYIP at Tue Jun 12 14:54:08 UTC 2018 Block Id: blk_1073741825 Block belongs to: /hdp/apps/2.6.4.0-91/mapreduce/mapreduce.tar.gz No. of Expected Replica: 3 No. of live Replica: 3 No. of excess Replica: 0 No. of stale Replica: 0 No. of decommissioned Replica: 0 No. of decommissioning Replica: 0 No. of corrupted Replica: 0 Block replica on datanode/rack: node2/default-rack is HEALTHY Block replica on datanode/rack: node3/default-rack is HEALTHY Block replica on datanode/rack: node4/default-rack is HEALTHYHope this helps! 🙂

Created 06-13-2018 06:51 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is there a way to move the blocks from non-DFS mount /hadoop to DFS mounts like /data and later remove the files if they are yet there on /hadoop mount.

Created 06-13-2018 06:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

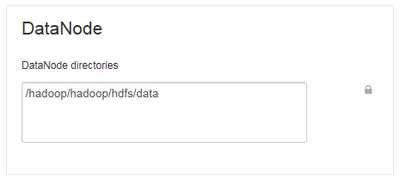

Hey @Bhanu Pamu!

I'm not sure if I get it, but if you have /hadoop (non-dfs + dfs files) and do you wanna move them to /data, guess the best choice would be to add this /data to dfs.datanode.data.dir as well. Then stop the datanodes and move the files from /hadoop to /data. Not sure if this is the best practice or if has another approach to do this, but certainly i'd investigate more on this thought before doing anything under HDFS.

Hope this helps! 🙂

Created 06-13-2018 07:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you very much for the reply and the command to see where the block is being used.

But what i don't understand is the file is already present in DFS and occupying specific amount of space.

Why for the same file there is block on non-DFS also?

Is it a kind of backup?

Created 06-13-2018 04:43 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @Karthik Chandrashekhar!

Hm, but these blocks belongs to your dfs.datanode.data.dir parameter? If so, they should belong to DFS not NON-DFS.

Cause AFAIK, any data outside of hdfs and written in the same mount disk as dfs.datanode.data.dir path is considered as non-DFS.

If these blocks doesn't belong to your DFS (NON-DFS) and they're in the same path as your dfs.datanode.data.dir value. Then, we might have an issue there 😞

Btw, could you check your mount points as well?

Hope this helps!

Created on 06-14-2018 07:22 AM - edited 08-17-2019 07:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks again.

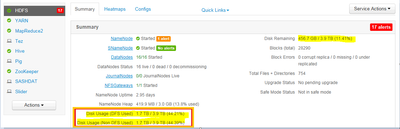

Please find few of the screenshots below from my system and let me know if the way i am analyzing is right.

DFS Storage:

Storage description in Ambari:

Non-DFS Storage: Here is where i have the blk_12345 files.

Created on 06-14-2018 09:38 AM - edited 08-17-2019 07:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 06-15-2018 06:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @Karthik Chandrashekhar!

Sorry for my delay, so basically I couldn't note anything wrong in your configs.

But one thing, on your disk-util-hadoop,png. Could you check if under the /hadoop mount is there any other subdir (besides the /hadoop/hadoop/hdfs/data)?

du --max-depth=1 -h /hadoop/hadoop/

or

du --max-depth=1 -h /hadoop/ #And just to check the mountpoints

lsblk

I think your Non-dfs usage is high, because HDFS is counting other directories under the /hadoop at the same disk.

And one last thing, how much is set for dfs.datanode.du.reserved?

Hope this helps!

Created 06-19-2018 05:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please find below the value of dfs.datanode.du.reserved.

<property> <name>dfs.datanode.du.reserved</name> <value>48204034048</value> </property>

And regarding your question on subdir.

Yes, i have a folder called subdir1 which is filled with this enormous blocks data.

It is available in below path.

/hadoop/hadoop/hdfs/data/current/BP-1468174578-IP_Address-1522297380740/current/finalized

Mountpoints: lsblk