Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Oozie spark action giving key not found SPARK_...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

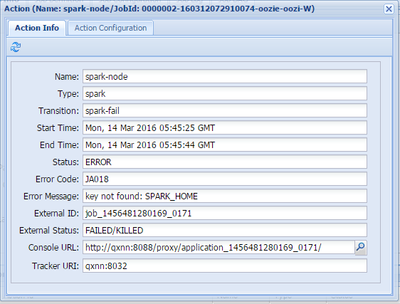

Oozie spark action giving key not found SPARK_HOME error

- Labels:

-

Apache Oozie

-

Apache Spark

Created on 03-14-2016 06:09 AM - edited 08-19-2019 03:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I recently tried spark action with Oozie and end up getting the following error:

My job.properties file:

==============================================================

jobTracker=qxnn:8032

master=local[2]

queueName=default

examplesRoot=spark-oozie

oozie.use.system.libpath=true

oozie.libpath=/user/oozie/share/lib

oozie.wf.application.path=${nameNode}/user/${user.name}/${examplesRoot}

workflow.xml:

================================================================

<workflow-app name="sparkJob" xmlns="uri:oozie:workflow:0.1">

<start to="spark-node"/>

<action name="spark-node">

<spark xmlns="uri:oozie:spark-action:0.1">

<job-tracker>${jobTracker}</job-tracker>

<name-node>${nameNode}</name-node>

<configuration>

<property>

<name>mapred.compress.map.output</name>

<value>true</value>

</property>

</configuration>

<master>yarn</master>

<mode>cluster</mode>

<name>Spark Example</name>

<jar>/user/yesuser3/spark-oozie/hive2.py</jar>

<spark-opts>--executor-memory 200M --num-executors 5</spark-opts>

</spark>

<ok to="end"/>

<error to="spark-fail"/>

</action>

<kill name="spark-fail">

<message>Spark action failed, error message[${wf:errorMessage(wf:lastErrorNode())}]</message>

</kill>

<end name="end"/>

</workflow-app>

Any help would be greatly appreciated.

Thanks,

SureshKumar

Created 07-19-2016 11:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As @Artem Ervits mentioned, Oozie Spark Action is not yet supported. Instead you can follow the alternative from the tech note below:

--------------------

Begin Tech Note

--------------------

Because spark action in oozie is not supported in HDP 2.3.x and HDP 2.4.0, there is no workaround especially in kerberos environment. We can use either java action or shell action to launch spark job in oozie workflow. In this article, we will discuss how to use oozie shell action to run a spark job in kerberos environment.

Prerequisite:

1. Spark client is installed on every host where nodemanager is running. This is because we have no control over which node the

2. Optionally, if the spark job need to interact with hbase cluster, hbase client need to be installed on every host as well.

Steps:

1. Create a shell script with the spark-submit command. For example, in the script.sh:

/usr/hdp/current/spark-client/bin/spark-submit --keytab keytab --principal ambari-qa-falconJ@FALCONJSECURE.COM --class org.apache.spark.examples.SparkPi --master yarn-client --driver-memory 500m --num-executors 1 --executor-memory 500m --executor-cores 1 spark-examples.jar 3

2. Prepare kerberos keytab which will be used by the spark job. For example, we use ambari smoke test user, the keytab is already generated by Ambari in/etc/security/keytabs/smokeuser.headless.keytab.

3. Create the oozie workflow with a shell action which will execute the script created above, for example, in the workflow.xml:

<workflow-app name="WorkFlowForShellAction" xmlns="uri:oozie:workflow:0.4">

<start to="shellAction"/>

<action name="shellAction">

<shell xmlns="uri:oozie:shell-action:0.2">

<job-tracker>${jobTracker}</job-tracker>

<name-node>${nameNode}</name-node>

<exec>script.sh</exec>

<file>/user/oozie/shell/script.sh#script.sh</file>

<file>/user/oozie/shell/smokeuser.headless.keytab#keytab</file>

<file>/user/oozie/shell/spark-examples.jar#spark-examples.jar</file>

<capture-output/>

</shell>

<ok to="end"/>

<error to="killAction"/>

</action>

<kill name="killAction">

<message>"Killed job due to error"</message>

</kill>

<end name="end"/>

</workflow-app>

4. Create the oozie job properties file. For example, in job.properties:

nameNode=hdfs://falconJ1.sec.support.com:8020

jobTracker=falconJ2.sec.support.com:8050

queueName=default

oozie.wf.application.path=${nameNode}/user/oozie/shell

oozie.use.system.libpath=true

5. Upload the following files created above to the oozie workflow application path in HDFS (In this example: /user/oozie/shell):

- workflow.xml

- smokeuser.headless.keytab

- script.sh

- spark uber jar (In this example: /usr/hdp/current/spark-client/lib/spark-examples*.jar)

- Any other configuration file mentioned in workflow (optional)

6. Execute the oozie command to run this workflow. For example:

oozie job -oozie http://<oozie-server>:11000/oozie -config job.properties -run

--------------------

End Tech Note

--------------------

Created 03-14-2016 06:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you please share your HDP version? Also it looks like this issue is similar to oozie bug https://issues.apache.org/jira/browse/OOZIE-2482.

Created 03-14-2016 11:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

oozie spark action is not supported in HDP 2.4 and earlier. We have it stated in release notes and Spark user guide.

Created 07-19-2016 11:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As @Artem Ervits mentioned, Oozie Spark Action is not yet supported. Instead you can follow the alternative from the tech note below:

--------------------

Begin Tech Note

--------------------

Because spark action in oozie is not supported in HDP 2.3.x and HDP 2.4.0, there is no workaround especially in kerberos environment. We can use either java action or shell action to launch spark job in oozie workflow. In this article, we will discuss how to use oozie shell action to run a spark job in kerberos environment.

Prerequisite:

1. Spark client is installed on every host where nodemanager is running. This is because we have no control over which node the

2. Optionally, if the spark job need to interact with hbase cluster, hbase client need to be installed on every host as well.

Steps:

1. Create a shell script with the spark-submit command. For example, in the script.sh:

/usr/hdp/current/spark-client/bin/spark-submit --keytab keytab --principal ambari-qa-falconJ@FALCONJSECURE.COM --class org.apache.spark.examples.SparkPi --master yarn-client --driver-memory 500m --num-executors 1 --executor-memory 500m --executor-cores 1 spark-examples.jar 3

2. Prepare kerberos keytab which will be used by the spark job. For example, we use ambari smoke test user, the keytab is already generated by Ambari in/etc/security/keytabs/smokeuser.headless.keytab.

3. Create the oozie workflow with a shell action which will execute the script created above, for example, in the workflow.xml:

<workflow-app name="WorkFlowForShellAction" xmlns="uri:oozie:workflow:0.4">

<start to="shellAction"/>

<action name="shellAction">

<shell xmlns="uri:oozie:shell-action:0.2">

<job-tracker>${jobTracker}</job-tracker>

<name-node>${nameNode}</name-node>

<exec>script.sh</exec>

<file>/user/oozie/shell/script.sh#script.sh</file>

<file>/user/oozie/shell/smokeuser.headless.keytab#keytab</file>

<file>/user/oozie/shell/spark-examples.jar#spark-examples.jar</file>

<capture-output/>

</shell>

<ok to="end"/>

<error to="killAction"/>

</action>

<kill name="killAction">

<message>"Killed job due to error"</message>

</kill>

<end name="end"/>

</workflow-app>

4. Create the oozie job properties file. For example, in job.properties:

nameNode=hdfs://falconJ1.sec.support.com:8020

jobTracker=falconJ2.sec.support.com:8050

queueName=default

oozie.wf.application.path=${nameNode}/user/oozie/shell

oozie.use.system.libpath=true

5. Upload the following files created above to the oozie workflow application path in HDFS (In this example: /user/oozie/shell):

- workflow.xml

- smokeuser.headless.keytab

- script.sh

- spark uber jar (In this example: /usr/hdp/current/spark-client/lib/spark-examples*.jar)

- Any other configuration file mentioned in workflow (optional)

6. Execute the oozie command to run this workflow. For example:

oozie job -oozie http://<oozie-server>:11000/oozie -config job.properties -run

--------------------

End Tech Note

--------------------