Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Oracle to Hive table

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Oracle to Hive table

- Labels:

-

Apache Hive

-

Apache NiFi

Created on 05-25-2018 04:43 PM - edited 08-17-2019 10:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi I'm new to Nifi,

I'm trying to do a simple data migration from oracle database to Hive table using Nifi. I'm using Nifi 1.4 version.

I have also read some tutorial from here and some other thread from this forum. Any pointer, thanks.

Created on 05-26-2018 06:46 AM - edited 08-17-2019 10:03 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

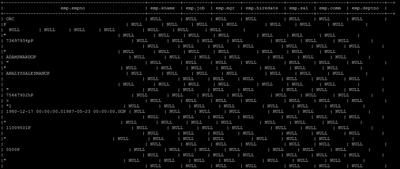

I got it fix the write permission. Now the orc file able to load to hive table but only into one column only. The source data contain 8 column and 14 row only. The result into hive table only into one column and it is in loop.

Created 05-25-2018 11:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

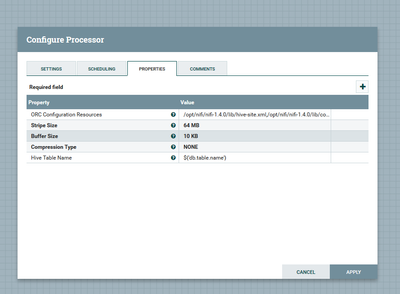

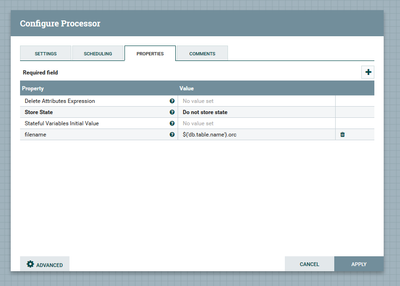

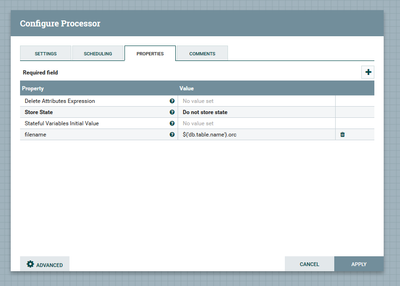

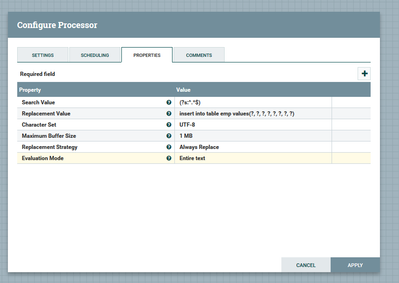

Your PutHDFS processor is placing the data into Hadoop (in ORC format after ConvertAvroToORC) for use by Hive, so you don't also need to send an INSERT statement to PutHiveQL. Rather with the pattern you're using, you should have ReplaceText setting the content to a Hive DDL statement to create a table on top of the ORC file(s) location, or a LOAD DATA INPATH to load from the HDFS location into an existing Hive table.

Created 05-26-2018 05:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Matt for the pointer. I have change the replacetext with "Load data inpath..". Now I'm having issue that the Nifi using anonymous user and access denied to move the file.

Created on 05-26-2018 06:46 AM - edited 08-17-2019 10:03 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I got it fix the write permission. Now the orc file able to load to hive table but only into one column only. The source data contain 8 column and 14 row only. The result into hive table only into one column and it is in loop.

Created 05-27-2018 03:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What does your CREATE TABLE statement look like, and does it match the schema of the file(s) (Avro/ORC) you're sending to the external location?

Created 05-27-2018 06:51 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It works fine now I change within the replacetext to ${hive.ddl} location '${absolute.hdfs.path}'