Support Questions

- Cloudera Community

- Support

- Support Questions

- Order of files MergeContent processor

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Order of files MergeContent processor

- Labels:

-

Apache NiFi

Created on 05-31-2018 08:55 AM - edited 09-16-2022 06:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, I am new to nifi

Suppose that I have multiple file to be merged, named by 0001_0, 0002_0, 0003_0 and so on

and the number of files to be merged is determined by original file which is not the thing we can control

my question is that how to use MergeContent processor to do merge

Thanks

Created 05-31-2018 11:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can use either of Merge Strategies

- 'Defragment' algorithm combines fragments that are associated by attributes back into a single cohesive FlowFile.

- The 'Bin-Packing Algorithm' generates a FlowFile populated by arbitrarily chosen FlowFiles and there are bunch of other properties that we need configure based on what you are trying to achieve.

Please refer to the below HCC threads regarding MergeContent processor usage and configurations

https://community.hortonworks.com/questions/64337/apache-nifi-merge-content.html

Let us know if you are facing any issues ..!!

Created 06-01-2018 01:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Could you please add more details(like flow/config screenshots,sample input data,expected output) regarding what you are trying to achieve, so that we can understand your requirements clearly.

Created 06-01-2018 02:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

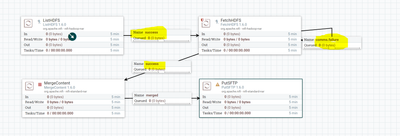

Here is flow (flow.jpg):

flow.jpg

it can be divided into two parts:

1. First part (above) is doing some Hive like language to generate some files. Please refer to result.jpg for a look

2. Second part (bottom) is doing merge process and send the final data to SFTP. But the original data is divided into several parts. If I do MergeContent processor with Bin-Packing Algorithm, it will merge the file with the order like 1→0→2, however, I want it in the order of 0→1→2

How can I achieve this propose?

Created 06-01-2018 09:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Instead of using GetHDFS processor use List/Fetch HDFS processors and then use MergeContent processor for merge.

ListHDFS processor stores the state and check only for the new files that are created after the stored state.

(or)

Create Hive table on top of this HDFS directory then use SelectHiveQL processor with your query

select * from <db.name>.<tab_name> order by <field-name> asc

Then you don't need to use MergeContent processor you can directly Put the result of SelectHiveQL processor to PutSFTP.

(or)

Once the merge is completed if you want to order by some field on the flowfile content then you can use QueryRecord processor and add new dynamic property with the value like

select * from flowfile order by <field-name> asc

then use the relationship to connect to PutSFTP processor.

(or)

Consider EnforceOrder processor before MergeContent processor this processor enforces the order of flowfiles reaching to MergeContent processor.

-

If the Answer addressed your question, Click on Accept button below to accept the answer, That would be great help to Community users to find solution quickly for these kind of issues.

Created 06-04-2018 03:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Shu

I have tried the method 1 as you suggested.

The overall flow is ListHDFS→ RouteonAttribute→ FetchHDFS→ MergeContent→PutSFTP

I try to use 'RouteonAttribute' as a filter and I add a new property which is filetofetch and I fill it with '${filename:contains('000')}'

But the question is how to fill the HDFS Filename in the processor of FetchHDFS?

Created on 06-05-2018 10:14 AM - edited 08-17-2019 09:41 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The issue is relationships that are feeding from FetchHDFS to mergeContent processors are commas.failure,failure, please use Success relation to feed MergeContent processor

Flow:

please change the feeding relationships as per the above screenshot.

In addition save and upload this template to your instance for more reference and configure MergeContent/PutSFTP processors

Created on 06-05-2018 02:44 AM - edited 08-17-2019 09:41 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

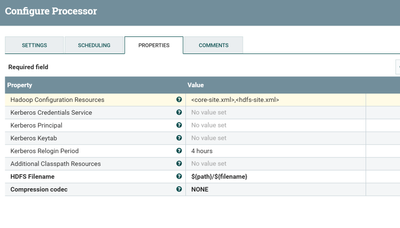

Keep FetchHDFS processor configs as below.

Give core-site.xml,hdfs-site.xml path in Hadoop Configuration Resources property.

Keep HDFS Filename property value as

${path}/${filename}${path},${filename}are the attributes that needed by FetchHDFS processor to fetch the file from hdfs directory.

These attributes are going to be associated with the each flowfile, added by ListHDFS processor.

Created 06-05-2018 02:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, I add a RouteonAttribute processor to filter some file that I need

but, the question is that I don't know how to fill the expression language for fetchHDFS

I have tried this one but it doesn't work...filetofetch.jpg

Created 06-05-2018 03:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have tried the setting as you mentioned

and the processor of fetchHDFS is with input flow

but there is no output flow... (I have waited for several minutes)

could you help me to take a look of my setting

flow flow.jpg

listHDFS listhdfs.jpg

fetchHDFS fetchhdfs.jpg

Thanks for your lots of helping