Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: PUTHDFS processor not working - NoClassDefFoun...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

PUTHDFS processor not working - NoClassDefFoundError

- Labels:

-

Apache Hadoop

-

Apache NiFi

Created on 05-17-2016 03:52 PM - edited 08-18-2019 05:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

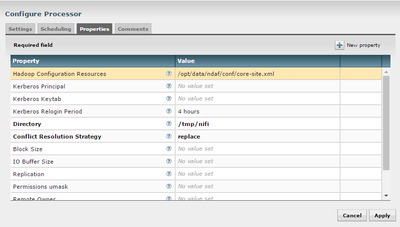

Hi, I configured the PUTHDFS processor to write on Hadoop as shown in the image, but it doesn't work.

In the log I see the following error:

java.lang.NoClassDefFoundError: org/apache/hadoop/conf/Configurable at java.lang.ClassLoader.defineClass1(Native Method) ~[na:1.8.0_45] at java.lang.ClassLoader.defineClass(ClassLoader.java:760) ~[na:1.8.0_45] at java.security.SecureClassLoader.defineClass(SecureClassLoader.java:142) ~[na:1.8.0_45] at java.net.URLClassLoader.defineClass(URLClassLoader.java:467) ~[na:1.8.0_45] at java.net.URLClassLoader.access$100(URLClassLoader.java:73) ~[na:1.8.0_45] at java.net.URLClassLoader$1.run(URLClassLoader.java:368) ~[na:1.8.0_45] at java.net.URLClassLoader$1.run(URLClassLoader.java:362) ~[na:1.8.0_45] at java.security.AccessController.doPrivileged(Native Method) ~[na:1.8.0_45] at java.net.URLClassLoader.findClass(URLClassLoader.java:361) ~[na:1.8.0_45] at java.lang.ClassLoader.loadClass(ClassLoader.java:424) ~[na:1.8.0_45] at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:331) ~[na:1.8.0_45] at java.lang.ClassLoader.loadClass(ClassLoader.java:411) ~[na:1.8.0_45] at java.lang.ClassLoader.loadClass(ClassLoader.java:411) ~[na:1.8.0_45] at java.lang.ClassLoader.loadClass(ClassLoader.java:411) ~[na:1.8.0_45] at java.lang.ClassLoader.loadClass(ClassLoader.java:411) ~[na:1.8.0_45] at java.lang.ClassLoader.loadClass(ClassLoader.java:357) ~[na:1.8.0_45] at java.lang.Class.forName0(Native Method) ~[na:1.8.0_45] at java.lang.Class.forName(Class.java:348) ~[na:1.8.0_45] at org.apache.hadoop.conf.Configuration.getClassByNameOrNull(Configuration.java:2093) ~[na:na] at org.apache.hadoop.conf.Configuration.getClassByName(Configuration.java:2058) ~[na:na] at org.apache.hadoop.io.compress.CompressionCodecFactory.getCodecClasses(CompressionCodecFactory.java:128) ~[na:na] at org.apache.hadoop.io.compress.CompressionCodecFactory.<init>(CompressionCodecFactory.java:175) ~[na:na] at org.apache.nifi.processors.hadoop.AbstractHadoopProcessor.getCompressionCodec(AbstractHadoopProcessor.java:375) ~[na:na] at org.apache.nifi.processors.hadoop.PutHDFS.onTrigger(PutHDFS.java:220) ~[na:na] at org.apache.nifi.processor.AbstractProcessor.onTrigger(AbstractProcessor.java:27) ~[nifi-api-0.6.1.jar:0.6.1] at org.apache.nifi.controller.StandardProcessorNode.onTrigger(StandardProcessorNode.java:1059) ~[nifi-framework-core-0.6.1.jar:0.6.1] at org.apache.nifi.controller.tasks.ContinuallyRunProcessorTask.call(ContinuallyRunProcessorTask.java:136) [nifi-framework-core-0.6.1.jar:0.6.1] at org.apache.nifi.controller.tasks.ContinuallyRunProcessorTask.call(ContinuallyRunProcessorTask.java:47) [nifi-framework-core-0.6.1.jar:0.6.1] at org.apache.nifi.controller.scheduling.TimerDrivenSchedulingAgent$1.run(TimerDrivenSchedulingAgent.java:123) [nifi-framework-core-0.6.1.jar:0.6.1] at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) [na:1.8.0_45] at java.util.concurrent.FutureTask.runAndReset(FutureTask.java:308) [na:1.8.0_45] at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$301(ScheduledThreadPoolExecutor.java:180) [na:1.8.0_45] at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:294) [na:1.8.0_45] at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) [na:1.8.0_45] at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) [na:1.8.0_45] at java.lang.Thread.run(Thread.java:745) [na:1.8.0_45] Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.conf.Configurable at java.net.URLClassLoader.findClass(URLClassLoader.java:381) ~[na:1.8.0_45] at java.lang.ClassLoader.loadClass(ClassLoader.java:424) ~[na:1.8.0_45] at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:331) ~[na:1.8.0_45] at java.lang.ClassLoader.loadClass(ClassLoader.java:357) ~[na:1.8.0_45] ... 36 common frames omitted

Can anyone help me to make the processor working?

Thank you

Created 05-17-2016 04:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is that the entire log message? Can you share the preceding lines to this stack trace?

Marco,

The NoClassDefFoundError you have encountered is most likely caused by the contents of your core-sites.xml file.

Check to see if the following line exists and if it does remove it from the file:

“com.hadoop.compression.lzo.LzoCodec,com.hadoop.compression.lzo.LzopCodec” from “io.compression.codecs” property in “core-site.xml” file.

Thanks,

Matt

Created 05-17-2016 03:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Marco G,

Probably not related but I think you need to point to both core-site and hdfs-site configuration files: "A file or comma separated list of files which contains the Hadoop file system configuration. Without this, Hadoop will search the classpath for a 'core-site.xml' and 'hdfs-site.xml' file or will revert to a default configuration."

Created 05-17-2016 03:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Marco G Please Try adding hdfs-site.xml also to the configuration resources separated by comma.

Created 05-18-2016 08:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As I answered above, I tried adding hdfs-site.xml but still not working. Thank you

Created 05-17-2016 04:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is that the entire log message? Can you share the preceding lines to this stack trace?

Marco,

The NoClassDefFoundError you have encountered is most likely caused by the contents of your core-sites.xml file.

Check to see if the following line exists and if it does remove it from the file:

“com.hadoop.compression.lzo.LzoCodec,com.hadoop.compression.lzo.LzopCodec” from “io.compression.codecs” property in “core-site.xml” file.

Thanks,

Matt

Created 05-18-2016 08:15 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sure, preceding lines are

2016-05-18 11:10:18,646 INFO [StandardProcessScheduler Thread-1] o.a.n.c.s.TimerDrivenSchedulingAgent Scheduled PutHDFS[id=e985d9ea-b0f7-4e05-ad1e-f7e0c2df5c7c] to run with 1 threads 2016-05-18 11:10:18,646 INFO [Timer-Driven Process Thread-6] o.apache.nifi.processors.hadoop.PutHDFS PutHDFS[id=e985d9ea-b0f7-4e05-ad1e-f7e0c2df5c7c] Kerberos ticket age exceeds threshold [14400 seconds] attempting to renew ticket for user mclaw 2016-05-18 11:10:18,646 INFO [Timer-Driven Process Thread-6] o.apache.nifi.processors.hadoop.PutHDFS PutHDFS[id=e985d9ea-b0f7-4e05-ad1e-f7e0c2df5c7c] Kerberos relogin successful or ticket still valid 2016-05-18 11:10:18,647 ERROR [Timer-Driven Process Thread-6] o.apache.nifi.processors.hadoop.PutHDFS PutHDFS[id=e985d9ea-b0f7-4e05-ad1e-f7e0c2df5c7c] PutHDFS[id=e985d9ea-b0f7-4e05-ad1e-f7e0c2df5c7c] failed to process due to java.lang.NoClassDefFoundError: org/apache/hadoop/conf/Configurable; rolling back session: java.lang.NoClassDefFoundError: org/apache/hadoop/conf/Configurable 2016-05-18 11:10:18,649 ERROR [Timer-Driven Process Thread-6] o.apache.nifi.processors.hadoop.PutHDFS

Created 05-18-2016 01:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you try what Matt suggested above, to remove the "io.compression.codecs" from core-site.xml?

I agree with him that this is likely related to the compression codecs, you can see in the stacktracke, the relevant lines are:

org.apache.hadoop.conf.Configuration.getClassByName(Configuration.java:2058) ~[na:na] at org.apache.hadoop.io.compress.CompressionCodecFactory.getCodecClasses(CompressionCodecFactory.java:128) ~[na:na] at org.apache.hadoop.io.compress.CompressionCodecFactory.<init>(CompressionCodecFactory.java:175) ~[na:na] at org.apache.nifi.processors.hadoop.AbstractHadoopProcessor.getCompressionCodec(AbstractHadoopProcessor.java:375) ~[na:na] at org.apache.nifi.processors.hadoop.PutHDFS.onTrigger(PutHDFS.java:220) ~[na:na] at

Created 05-19-2016 08:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@mclark @bbende I tried this solution and now it works! But...what if I need a compression not listed in the Processor's property "Compression codec"? For example if I need to compress with LZO codec? Do I have to create my own processor for this purpose and send the outputted compressed file (flowfile) to PutHdfs processor with "none" value in Compression codec property, or an easier way to obtain this behaviour exists?

Thank you very much

Created 05-17-2016 05:01 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What version of NiFi is this?

Created 05-18-2016 08:10 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The version is 0.6.1, the latest.