Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Parquet data duplication

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Parquet data duplication

- Labels:

-

Apache Hadoop

Created 01-19-2016 10:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello All,

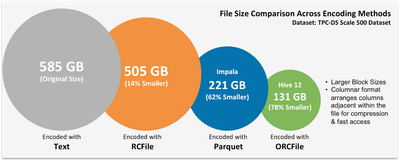

It's sur that parquet files make OLAP queries faster cause of it columnar format, but in the other side the datalake is duplicated (raw data + parquet data). even if parquet can be compressed, dont you think that duplicating all the data can costs a lot ?

Created on 01-19-2016 10:55 AM - edited 08-19-2019 05:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 01-19-2016 02:24 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

i agree, but actually the orc part will be duplicated no ?

Created 01-19-2016 02:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Mehdi TAZI Better compression mean less storage cost. My suggestion is not to confuse HBASE or Nosql with HDFS. There are customer who are using HDFS, Hive and not using HBASE. HBASE is designed for special use cases where you have to access data in real time "You have mentioned this already" 🙂

Created 01-19-2016 03:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

yes thanks ^^, in my case i'm using hbase because i'm handling a large amount of small files.

Created 01-19-2016 03:27 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Mehdi TAZI That's sound correct. I did connect with you on twitter. Feel free to connect back and we can discuss in detail. I do believe that you are on the right track

Created 01-20-2016 02:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

in one of your deleted responses you'd mentioned that you duplicate date for hive queries and hbase for small files issues. You can actually map hive to hbase and use analytics queries on top of HBase. That may not be the most efficient way but you can also map HBase snapshots to Hive and that will be a lot better as far as HBase is concerned.

Created 01-20-2016 09:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

first of all thanks for you answer the duplication wasn't about the date but more about the data on parquet and hbase, otherwise using hive over hbase is not really as good as having a columnar format... have a nice day 🙂

- « Previous

-

- 1

- 2

- Next »