Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Password Encryption in Sqoop Job not working

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Password Encryption in Sqoop Job not working

Created on 09-20-2016 10:30 AM - edited 08-18-2019 04:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am using password encryption method in Sqoop job for data ingestion into Hadoop. Used Dhadoop.security.credential.provider.path to encrypt the password.

But when I try to create the Sqoop job in CLI, it is unable to parse the arguments. Below is the code I used and error I got also mentioned below.

CODE

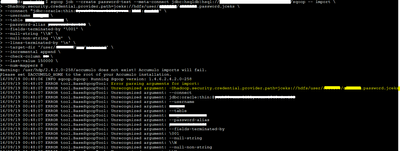

sqoop job --create password-test --meta-connect jdbc:hsqldb:hsql://<hostname>:<port>/sqoop -- import -Dhadoop.security.credential.provider.path=jceks://hdfs/user/<username>/<username>.password.jceks --connect "jdbc:oracle:thin:<hostname>:<Port>:<sid>" --username <username> --table <tablename> --password-alias <password-alias-name> --fields-terminated-by '\001' --null-string '\N' --null-non-string '\N' --lines-terminated-by '\n' --target-dir '/user/<username>/<staging loc>' --incremental append --check-column <colname> --last-value <value> --num-mappers 8

ERROR

Created 09-20-2016 10:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Gayathri Reddy G - pass generic arguments like -D after SQOOP JOB -Dhadoop.security.credential.provider.path=jceks ....

General syntax is

sqoop-job (generic-args) (job-args) [-- [subtool-name] (subtool-args)]

Created 09-20-2016 10:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Gayathri Reddy G - pass generic arguments like -D after SQOOP JOB -Dhadoop.security.credential.provider.path=jceks ....

General syntax is

sqoop-job (generic-args) (job-args) [-- [subtool-name] (subtool-args)]

Created 09-20-2016 10:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You must supply the generic arguments-conf,-D, and so on after the tool name but before any tool-specific arguments (such as--connect). Note that generic Hadoop arguments are preceeded by a single dash character (-), whereas tool-specific arguments start with two dashes (--), unless they are single character arguments such as-P.

https://sqoop.apache.org/docs/1.4.6/SqoopUserGuide.html#_using_generic_and_specific_arguments

Created 09-20-2016 11:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@njayakumar passed the Generic arguments first to the sqoop job, now its working fine. Thanks