Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Phoenix - Query - Error Size of hash cache (10...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Phoenix - Query - Error Size of hash cache (104857641 bytes) exceeds the maximum allowed size (104857600 bytes)

- Labels:

-

Apache Phoenix

Created 04-07-2016 05:01 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am using a query like the following to try to populate it. Note the “limit” statement I have included in the inner query. Without this, I get the familiar “Size of hash cache…exceeds the maximum” error that the USE_SORT_MERGE_JOIN hint is supposed to fix. (Note also that I am using that hint.) However, I need to join to all of the 2,393,244 records in the RPT_LAB_RSLT_PRE2 table to get my full data set. So the limit condition simply demonstrates that my query is good, as with the limit to 500 records, this statement completes successfully and quickly. Without it, it fails as noted above.

Query with Limit condition: (This query works)

UPSERT INTO MED_DOC_DM.RPT_LAB_RSLT_PRE3 SELECT /*+ USE_SORT_MERGE_JOIN */ rslt.ORD_PROC_ID, rslt.ORD_DT_REAL_NBR, rslt.ORD_RSLT_LN_NBR, rslt.PTNT_ENCNTR_CSN_ID, rslt.PTNT_ID, op2.ORD_PROC_DESCR, rslt.SRVC_AREA_ID, rslt.CMPNT_ID, NULL AS COMPONENT_NM, rslt.RSLT_TSP, rslt.ORD_VAL_MEAS_TXT, rslt.REF_UNIT_TXT, NULL AS CBC_IND, NULL AS HGB_IND, NULL AS PLT_IND, NULL AS WBC_IND, TO_CHAR(CURRENT_TIME()) FROM MED_DOC_DM.RPT_LAB_RSLT_PRE1 rslt JOIN ( SELECT op.ORD_PROC_ID, op.ORD_PROC_DESCR FROM MED_DOC_DM.RPT_LAB_RSLT_PRE2 op LIMIT 500 ) op2 ON rslt.ORD_PROC_ID = op2.ORD_PROC_ID; The below query doesn't have the LIMIT condition: 0: jdbc:phoenix:> UPSERT INTO MED_DOC_DM.RPT_LAB_RSLT_PRE3 . . . . . . . . . . . . . . . . . . . . . . .> SELECT /*+ USE_SORT_MERGE_JOIN */ . . . . . . . . . . . . . . . . . . . . . . .> rslt.ORD_PROC_ID, . . . . . . . . . . . . . . . . . . . . . . .> rslt.ORD_DT_REAL_NBR, . . . . . . . . . . . . . . . . . . . . . . .> rslt.ORD_RSLT_LN_NBR, . . . . . . . . . . . . . . . . . . . . . . .> rslt.PTNT_ENCNTR_CSN_ID, . . . . . . . . . . . . . . . . . . . . . . .> rslt.PTNT_ID, . . . . . . . . . . . . . . . . . . . . . . .> op.ORD_PROC_DESCR, . . . . . . . . . . . . . . . . . . . . . . .> rslt.SRVC_AREA_ID, . . . . . . . . . . . . . . . . . . . . . . .> rslt.CMPNT_ID, . . . . . . . . . . . . . . . . . . . . . . .> NULL AS COMPONENT_NM, . . . . . . . . . . . . . . . . . . . . . . .> rslt.RSLT_TSP, . . . . . . . . . . . . . . . . . . . . . . .> rslt.ORD_VAL_MEAS_TXT, . . . . . . . . . . . . . . . . . . . . . . .> rslt.REF_UNIT_TXT, . . . . . . . . . . . . . . . . . . . . . . .> NULL AS CBC_IND, . . . . . . . . . . . . . . . . . . . . . . .> NULL AS HGB_IND, . . . . . . . . . . . . . . . . . . . . . . .> NULL AS PLT_IND, . . . . . . . . . . . . . . . . . . . . . . .> NULL AS WBC_IND, . . . . . . . . . . . . . . . . . . . . . . .> TO_CHAR(CURRENT_TIME()) . . . . . . . . . . . . . . . . . . . . . . .> FROM MED_DOC_DM.RPT_LAB_RSLT_PRE1 rslt . . . . . . . . . . . . . . . . . . . . . . .> JOIN MED_DOC_DM.RPT_LAB_RSLT_PRE2 op ON rslt.ORD_PROC_ID = op.ORD_PROC_ID; Error: Encountered exception in sub plan [0] execution. (state=,code=0) java.sql.SQLException: Encountered exception in sub plan [0] execution. at org.apache.phoenix.execute.HashJoinPlan.iterator(HashJoinPlan.java:164) at org.apache.phoenix.execute.HashJoinPlan.iterator(HashJoinPlan.java:119) at org.apache.phoenix.compile.UpsertCompiler$2.execute(UpsertCompiler.java:670) at org.apache.phoenix.jdbc.PhoenixStatement$2.call(PhoenixStatement.java:304) at org.apache.phoenix.jdbc.PhoenixStatement$2.call(PhoenixStatement.java:296) at org.apache.phoenix.call.CallRunner.run(CallRunner.java:53) at org.apache.phoenix.jdbc.PhoenixStatement.executeMutation(PhoenixStatement.java:294) at org.apache.phoenix.jdbc.PhoenixStatement.execute(PhoenixStatement.java:1254) at sqlline.Commands.execute(Commands.java:822) at sqlline.Commands.sql(Commands.java:732) at sqlline.SqlLine.dispatch(SqlLine.java:808) at sqlline.SqlLine.begin(SqlLine.java:681) at sqlline.SqlLine.start(SqlLine.java:398) at sqlline.SqlLine.main(SqlLine.java:292) Caused by: org.apache.phoenix.join.MaxServerCacheSizeExceededException: Size of hash cache (104857641 bytes) exceeds the maximum allowed size (104857600 bytes) at org.apache.phoenix.join.HashCacheClient.serialize(HashCacheClient.java:109) at org.apache.phoenix.join.HashCacheClient.addHashCache(HashCacheClient.java:82) at org.apache.phoenix.execute.HashJoinPlan$HashSubPlan.execute(HashJoinPlan.java:346) at org.apache.phoenix.execute.HashJoinPlan$1.call(HashJoinPlan.java:143) at java.util.concurrent.FutureTask.run(FutureTask.java:262) at org.apache.phoenix.job.JobManager$InstrumentedJobFutureTask.run(JobManager.java:172) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1145) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615) at java.lang.Thread.run(Thread.java:744)

Created 04-07-2016 09:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I assume if you run explain that he shows both times that he ignores the hint. I found this link where SquirrelJDBC was removing hints but it looks like you use sqlline and the link said it works there and the usage looks identical to what you do. ( Could you try it once without the upsert to see if that helps? )

The second possibility would obviously be to increase the hash cache? 100MB is not that much in todays day and age and 3m rows is not the world.

phoenix.query.maxServerCacheBytes

I hope he is smart enough to only build a cache from the two columns of the right side he actually needs. But I assume since its a description column, that is actually bigger. 200 bytes * 3m rows would be 600m of data.

Created 04-23-2016 04:08 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here is some additional information about the issue Maharaj and I (Tom Forsyth) are sharing.

The table structures involved in the this failing query are provided below. Here are the counts of records in each table.

RPT_LAB_RSLT_PRE1 = 19,729,760

RPT_LAB_RSLT_PRE2 = 2,393,244

RPT_LAB_RSLT_PRE3 = 0 (count prior to upsert attempt)

Maharaj mentions a "limit" condition, but the query provided by him with the log results does not include that. Here is the version of the query that we tried which does run successfully with the limit condition. Ultimately, we need the query Maharaj has provided to run without issue, as we do not want to limit the number of procedure descriptions joined into the data set. The limited version of the query was just used to confirm that the query syntax is sound, and that the problem appears to be caused by trying to join two source tables having large record volumes.

UPSERT INTO MED_DOC_DM.RPT_LAB_RSLT_PRE3

SELECT /*+ USE_SORT_MERGE_JOIN */

rslt.ORD_PROC_ID,

rslt.ORD_DT_REAL_NBR,

rslt.ORD_RSLT_LN_NBR,

rslt.PTNT_ENCNTR_CSN_ID,

rslt.PTNT_ID,

op2.ORD_PROC_DESCR,

rslt.SRVC_AREA_ID,

rslt.CMPNT_ID,

NULL AS COMPONENT_NM,

rslt.RSLT_TSP,

rslt.ORD_VAL_MEAS_TXT,

rslt.REF_UNIT_TXT,

NULL AS CBC_IND,

NULL AS HGB_IND,

NULL AS PLT_IND,

NULL AS WBC_IND,

TO_CHAR(CURRENT_TIME())

FROM MED_DOC_DM.RPT_LAB_RSLT_PRE1 rslt

JOIN ( SELECT op.ORD_PROC_ID, op.ORD_PROC_DESCR

FROM MED_DOC_DM.RPT_LAB_RSLT_PRE2 op

LIMIT 500 ) op2

ON rslt.ORD_PROC_ID = op2.ORD_PROC_ID;

-- This table is the receiver of the upsert record set.

CREATE TABLE MED_DOC_DM.RPT_LAB_RSLT_PRE3

(

ORD_PROC_ID VARCHAR,

ORD_DT_REAL_NBR VARCHAR,

ORD_RSLT_LN_NBR VARCHAR,

PTNT_ENCNTR_CSN_ID VARCHAR,

PTNT_ID VARCHAR,

ORD_PROC_DESCR VARCHAR,

SRVC_AREA_ID VARCHAR,

CMPNT_ID VARCHAR,

CMPNT_NM VARCHAR,

RSLT_TSP VARCHAR,

ORD_VAL_MEAS_TXT VARCHAR,

REF_UNIT_TXT VARCHAR,

CBC_IND VARCHAR,

HGB_IND VARCHAR,

PLT_IND VARCHAR,

WBC_IND VARCHAR,

ROW_INSERT_TSP VARCHAR

CONSTRAINT PK_RPT_LAB_RSLT_PRE3 PRIMARY KEY( ORD_PROC_ID, ORD_DT_REAL_NBR, ORD_RSLT_LN_NBR ) )

IMMUTABLE_ROWS=true, COMPRESSION='SNAPPY', SALT_BUCKETS = 12;

-- This is the first source table being queried, providing the bulk of the data being populated to the receiver table.

CREATE TABLE MED_DOC_DM.RPT_LAB_RSLT_PRE1

(

ORD_PROC_ID VARCHAR,

ORD_DT_REAL_NBR VARCHAR,

ORD_RSLT_LN_NBR VARCHAR,

PTNT_ENCNTR_CSN_ID VARCHAR,

PTNT_ID VARCHAR,

SRVC_AREA_ID VARCHAR,

CMPNT_ID VARCHAR,

RSLT_TSP VARCHAR,

ORD_VAL_MEAS_TXT VARCHAR,

REF_UNIT_TXT VARCHAR,

ROW_INSERT_TSP VARCHAR

CONSTRAINT PK_RPT_LAB_RSLT_PRE1 PRIMARY KEY( ORD_PROC_ID, ORD_DT_REAL_NBR, ORD_RSLT_LN_NBR ) )

IMMUTABLE_ROWS=true, COMPRESSION='SNAPPY', SALT_BUCKETS = 12;

-- This table is being joined in as the 2nd of the source tables to effectively attach the procedure description to each

-- record from the 1st source table.

CREATE TABLE MED_DOC_DM.RPT_LAB_RSLT_PRE2

(

ORD_PROC_ID VARCHAR,

ORD_PROC_DESCR VARCHAR,

ROW_INSERT_TSP VARCHAR

CONSTRAINT PK_RPT_LAB_RSLT_PRE2 PRIMARY KEY( ORD_PROC_ID ) )

IMMUTABLE_ROWS=true, COMPRESSION='SNAPPY', SALT_BUCKETS = 12;

Created on 04-10-2019 05:20 PM - edited 08-18-2019 04:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Benjamin Leonhardi could you please tell me how to actually increase the hash cache? I agree 100MB is not much.

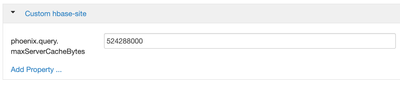

I'm running an HDP 2.6.5 cluster with HBase 1.1.2 and Phoenix enabled. I couldn't find the property phoenix.query.maxServerCacheBytes defined in hbase-site.xml config file, so I tried adding it in the "Custom hbase-site" section in Ambari and setting it to 500Mb as shown in the picture below:

Now I can see the variable defined in hbase-site.xml, but I am still getting the following error "...maximum allowed size (104857600 bytes)" even though I have changed that setting to 500MB).

Any ideas or suggestions?

Thanks in advance!

- « Previous

-

- 1

- 2

- Next »