Support Questions

- Cloudera Community

- Support

- Support Questions

- Phoenix and HBase connection

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Phoenix and HBase connection

- Labels:

-

Apache HBase

-

Apache Phoenix

Created 07-19-2017 10:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It seems I'm running into a connection issue between Phoenix and HBase, below is the error:

[root@dsun5 bin]# ./sqlline.py dsun0.field.hortonworks.com:2181:/hbase-unsecure Setting property: [incremental, false] Setting property: [isolation, TRANSACTION_READ_COMMITTED] issuing: !connect jdbc:phoenix:dsun0.field.hortonworks.com:2181:/hbase-unsecure none none org.apache.phoenix.jdbc.PhoenixDriver Connecting to jdbc:phoenix:dsun0.field.hortonworks.com:2181:/hbase-unsecure SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/usr/hdp/2.6.1.0-129/phoenix/phoenix-4.7.0.2.6.1.0-129-client.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/hdp/2.6.1.0-129/hadoop/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. 17/07/19 22:46:09 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 17/07/19 22:46:11 WARN shortcircuit.DomainSocketFactory: The short-circuit local reads feature cannot be used because libhadoop cannot be loaded. Error: org.apache.hadoop.hbase.DoNotRetryIOException: Class org.apache.phoenix.coprocessor.MetaDataEndpointImpl cannot be loaded Set hbase.table.sanity.checks to false at conf or table descriptor if you want to bypass sanity checks at org.apache.hadoop.hbase.master.HMaster.warnOrThrowExceptionForFailure(HMaster.java:1878) at org.apache.hadoop.hbase.master.HMaster.sanityCheckTableDescriptor(HMaster.java:1746) at org.apache.hadoop.hbase.master.HMaster.createTable(HMaster.java:1652) at org.apache.hadoop.hbase.master.MasterRpcServices.createTable(MasterRpcServices.java:483) at org.apache.hadoop.hbase.protobuf.generated.MasterProtos$MasterService$2.callBlockingMethod(MasterProtos.java:59846) at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:2141) at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:112) at org.apache.hadoop.hbase.ipc.RpcExecutor$Handler.run(RpcExecutor.java:187) at org.apache.hadoop.hbase.ipc.RpcExecutor$Handler.run(RpcExecutor.java:167) (state=08000,code=101)

phoenixserver.jar has been manually installed on the HBase master.

Created on 07-19-2017 11:39 PM - edited 08-18-2019 01:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

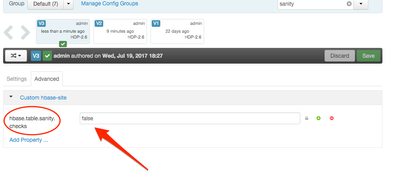

It appears all the dependency libs are present on the master, as well as all the region nodes, so I just added a parameter 'hbase.table.sanity.checks' in hbase-site.xml, and set it to 'false' in Ambari.

After that, I restarted HBaseMaster as well as all the RegionServers, then phoenix started working.

Created 07-20-2017 02:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That's a very bad idea -- you should be figuring out why the class could not be loaded. Without that coprocessor, Phoenix will not function correctly.

Created 07-20-2017 04:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Agreed, working on a quick demo, and Phoenix appears to be working fine as far as the demo concerns, will come back and spend more time after the demo, thanks.

Created 07-20-2017 05:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for clarifying. Trivial use of phoenix likely would work. It will not work for the full-feature-set of Phoenix. I appreciate your caveat that this should only be used for one-off/demo setups, not for production.

Created 07-20-2017 08:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You need to install phoenix-server.jar to all Region and Master servers. MetaDataEndpointImpl