Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Pig View Will Not Run, but Grunt CLI Will

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Pig View Will Not Run, but Grunt CLI Will

- Labels:

-

Apache Ambari

-

Apache Pig

Created on 06-20-2016 04:25 PM - edited 08-18-2019 06:15 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello All,

I will preface with, I have seen multiple questions of similar nature and have tried each of the solutions, but to no avail on my end and feel that a more in depth explanation may help others as well if they are to ever arrive at a similar issue.

The pig view fails, but the Grunt> CLI runs fine, so I am thinking that it may be a PIG View configuration error.

I started by researching the jira located at https://issues.apache.org/jira/browse/AMBARI-12738

I am trying to use the Pig View in Ambari 2.2.1 on HDP 2.4.2 and am running into a multitude of errors.

The script that I am running is

logs = LOAD 'server_logs.error_logs' USING org.apache.hive.hcatalog.pig.HCatLoader(); DUMP logs;

The job will fail with a "Job failed to start" Error which then only has a stack trace of

java.net.SocketTimeoutException: Read timed out java.net.SocketTimeoutException: Read timed out

In the history logs within the view I receive the following error only

File /user/admin/pig/jobs/errlogs_20-06-2016-15-11-39/stderr not found.

I have tried this for user hdfs and admin the same problem remains, I have also just tried to load a file with PigStorage('|'), but that also returned me the same issue.

Using both Tez and MR ExecTypes, I receive the same error.

The NameNode and ResourceManager are both in High Availability mode.

I have added the appropriate proxyuser configs to both the core-site and hcat-site in HDFS and Hive configurations. I have restarted all services and Ambari-Server

The stderr file is created within the

/user/admin/pig/jobs/errlogs_20-06-2016-15-11-39/

directory, but does not have anything written to it. The admin/pig/ directory has full go+w 777 -R permissions, but when the stderr file is created it will only show as having 644 permissions.

Against my better judgement I issued an

hdfs dfs -chmod -R 777 /user

command to see if it was an underlying permissions issue on a file unbeknownst to me, but that also left me with the same outcome.

The Resource Manager Logs show that the application is submitted and continues to hang in the RUNNING state even after the job has been noted as "Failed to Start" through Ambari.

yarn application -list shows that there are no running Applications as well.

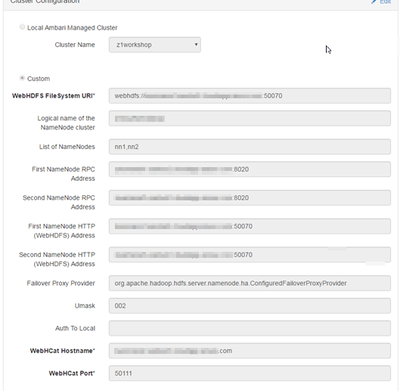

Has anyone figured out a solution to this problem? The stacktraces are not helpful, given they do not output more than 1-2 lines of information. My Pig View Cluster configuration is as follows:

Created 06-21-2016 12:05 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

From what I can tell this ended up being an available resources issue. I logged back in at midnight when all users had left and everything seems to be working correctly. Some of the time the Pig Job will say that it failed to start, but in the stderr/stdout it will show the results of the DUMP that I am trying to perform and since it was working fine in the grunt CLI, this was a very tricky problem to uncover.

Created 06-20-2016 04:49 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Do you have any ideas?

Created 06-20-2016 05:08 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

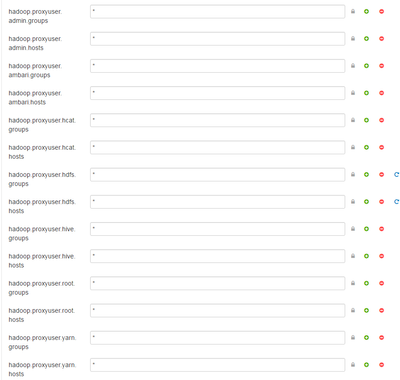

Can you provide your hadoop.proxyusers.* properties settings?

Created on 06-20-2016 06:10 PM - edited 08-18-2019 06:15 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Rahul Pathak This is what the current listing of hadoop.proxyuser is

Created 06-21-2016 12:05 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

From what I can tell this ended up being an available resources issue. I logged back in at midnight when all users had left and everything seems to be working correctly. Some of the time the Pig Job will say that it failed to start, but in the stderr/stdout it will show the results of the DUMP that I am trying to perform and since it was working fine in the grunt CLI, this was a very tricky problem to uncover.

Created 06-22-2016 04:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Colton Rodgers I assume webhcat/templeton not responding fast enough to the call hence the read timeout error. The grunt shell call does not go through webhcat I believe. Any suggestions from your perspective that we can do better to improving of isolating the issue?

Created 06-22-2016 05:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@rmolina The webhcat response time did end up seeming to be the result of the issue. I do believe that being able to block off a certain amount of memory for WebHCat specifically ( I think this may already be available with templeton.mapper.memory.mb ), but that is just the mapper memory and I haven't looked too much farther into it. When there are no other users using the cluster, the Pig GUI view will run fine, but as that is not going to be the case for most Prod clusters that we deploy, I think that being able to set a reserve specifically in the WebHCat-Env or WebHCat-Site could prove to be useful in making sure the resources are properly allocated.