@stevenmatison

Thanks for responding!

I did think this was a file permissions issue on the start, but I ran some tests.

Test 1: I chown'd/chmod'd the underlining files to match ORC files that presto could read from (those not written by PutHive3Streaming). Didn't work.

Test 2: I ran Nifi's SelectHive3QL (which supports inserts). This wrote the data with file permissions and ownership similar to the other processor. Presto is able to read that data.

Were you able to get to work?

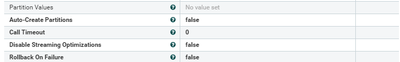

Additionally here's a snippet of puthive3streaming (minus the specifics like table, pathways, dbs). Using an avroreader to write.