Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Problem with Zoomdata connection to Hive (on T...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Problem with Zoomdata connection to Hive (on Tez) during tutorial – Getting Started with HDP Lab 7

- Labels:

-

Hortonworks Data Platform (HDP)

Created on 06-02-2016 11:08 AM - edited 08-19-2019 03:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

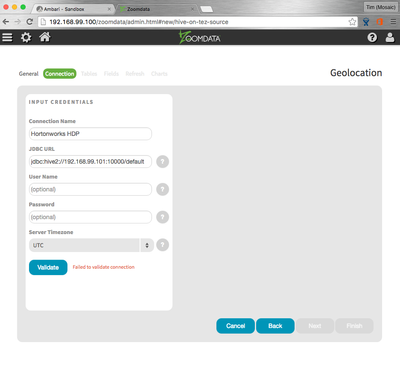

I'm running through the Getting Started with HDP tutorial Lab 7 and consistently getting errors when trying to validate the Hive (via Tez) JDBC url within Zoomdata. Hive (and the rest of the HDP) is running in well in a sandbox VM.

I have the following installed and working as per the instructions:

- Sandbox VM (on VirtualBox OS X) with network settings as per section 7.3.

- Docker (on VirtualBox OS X) running Zoomdata container.

All HDP services (including Hive) have started correctly and on the Zoomdata side I have configured connector-HIVE_ON_TEZ=true as supervisor. In the Zoomdata UI when I try to define the JDBC url (as the admin user) I receive the error 'Failed to validate connection' (see attached image).

Can anyone suggest what I need to look at to get this to work? I am wondering whether this is a VM network issue.

Many thanks!

Created 06-08-2016 11:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It will not work out of the box because HDP 2.4 Sandbox has an Atlas Bridge that's throwing the error on the post Hive hook. This happens for any query involving a "dummy" database (e.g., "select 1").

Via the Ambari config for Hive you should locate the hive.exec.post.hooks property. It will have a value like this:

org.apache.hadoop.hive.ql.hooks.ATSHook, org.apache.atlas.hive.hook.HiveHook

You should remove the second hook (the Atlas hook) so the property only reads:

org.apache.hadoop.hive.ql.hooks.ATSHook

Lastly, restart the Hive server. You should be able to verify the fix even without Zoomdata by executing in Hive (via Ambari or Beeline):

select count(1);

If the query is working, Zoomdata should have no problem connecting to HDP's Hive.

Hope this helps!

Created 06-03-2016 02:26 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Tim Apps,

This is Andrew here from the Zoomdata Support team. I was informed by a colleague that you were encountering some issues and wanted to lend a helping hand! 🙂 You can likely obtain more details about the actual error you're encountering by looking in the zoomdata.log and zoomdata-errors.log files. This can be found in the /opt/zoomdata/logs directory by default. If you are running Zoomdata through docker, you will need to first make sure to SSH into the docker container itself before you'll be able to find these log files.

Another quick test that you can run is to try connecting to Hive on Tez using some other SQL query tool from the same docker container where Zoomdata is located. For example, Zoomdata uses a similar hive2 JDBC driver as beeline so if you are unable to connect using beeline, Zoomdata will not be able to connect either. If you are getting errors when trying to use beeline (or said other SQL query tool) and depending on the error thrown, it very well might be some kind of network issue that is preventing the connection from resolving correctly.

If the above doesn't work for you, I suggest opening a support ticket with us and we'd be glad to help you further investigate this issue. You can access our Support home page here: http://www.zoomdata.com/support/

Thanks!

Andrew

Created 06-03-2016 11:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks very much @Andrew Tsao,

Your advice was very useful. I took a look at the logs and there is a NPE in the HiveStatement class (stacktrace attached zoomdata-hive-jdbc-stacktrace.txt). Looking more closely into the code (hive-jdbc-0.13.1) it seems to be down to a problem creating a TExecuteStatementReq request (this is a request to Hive's remote Thrift server as far as I can tell).

As you mentioned I tried to make a connection to Hive from another client (SQL Developer). This worked ok with the same JDBC URL.

Unfortunately as I'm new to Hive I don't quote know how to resolve this. I'll raise a support ticket as suggested.

I really appreciate the help, many thanks.

Cheers, Tim

Created 06-03-2016 02:24 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am experiencing this problem too. I have a Hortonworks sandbox running on a virtual machine in Azure and have Zoomdate running in a docker container. @Tim Apps If you have any solution could you post it?

Created 06-07-2016 04:02 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Joeri Smits,

I haven't got an answer yet I'm afraid. I tracked down the particular problem I was having to a NPE in Atlas running on my HDP Sandbox (see below). Someone from the Zoomdata support team said they would help investigate. Let's see 🙂

URL was jdbc:hive2://192.168.99.101:10000/default and I was able to connect to Hive via SQL Developer so am not entirely sure what's up at the moment.

If I find the answer I will share.

Regards, Tim

2016-06-03 13:45:58,888 ERROR [HiveServer2-Background-Pool: Thread-1193]: ql.Driver (SessionState.java:printError(932)) - FAILED: Hive Internal Error: java.lang.NullPointerException(null)

java.lang.NullPointerException

at org.apache.atlas.hive.bridge.HiveMetaStoreBridge.registerDatabase(HiveMetaStoreBridge.java:109)

at org.apache.atlas.hive.bridge.HiveMetaStoreBridge.registerTable(HiveMetaStoreBridge.java:270)

at org.apache.atlas.hive.hook.HiveHook.registerProcess(HiveHook.java:321)

at org.apache.atlas.hive.hook.HiveHook.fireAndForget(HiveHook.java:214)

at org.apache.atlas.hive.hook.HiveHook.run(HiveHook.java:172)

at org.apache.hadoop.hive.ql.Driver.execute(Driver.java:1585)

at org.apache.hadoop.hive.ql.Driver.runInternal(Driver.java:1254)

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1118)

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1113)

at org.apache.hive.service.cli.operation.SQLOperation.runQuery(SQLOperation.java:154)

at org.apache.hive.service.cli.operation.SQLOperation.access$100(SQLOperation.java:71)

at org.apache.hive.service.cli.operation.SQLOperation$1$1.run(SQLOperation.java:206)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:415)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1657)

at org.apache.hive.service.cli.operation.SQLOperation$1.run(SQLOperation.java:218)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:471)

at java.util.concurrent.FutureTask.run(FutureTask.java:262)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1145)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615)

at java.lang.Thread.run(Thread.java:745)

Created 06-08-2016 11:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It will not work out of the box because HDP 2.4 Sandbox has an Atlas Bridge that's throwing the error on the post Hive hook. This happens for any query involving a "dummy" database (e.g., "select 1").

Via the Ambari config for Hive you should locate the hive.exec.post.hooks property. It will have a value like this:

org.apache.hadoop.hive.ql.hooks.ATSHook, org.apache.atlas.hive.hook.HiveHook

You should remove the second hook (the Atlas hook) so the property only reads:

org.apache.hadoop.hive.ql.hooks.ATSHook

Lastly, restart the Hive server. You should be able to verify the fix even without Zoomdata by executing in Hive (via Ambari or Beeline):

select count(1);

If the query is working, Zoomdata should have no problem connecting to HDP's Hive.

Hope this helps!

Created 06-09-2016 09:15 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank-you very much, this configuration change you suggested worked perfectly. Much appreciated. I was able to repeat the issue in the Hive viewer in Ambari as you suggested. Once I made the change to the configuration and restarted the various services I was able to finish the tutorial.

Many thanks!

Tim

Created on 06-15-2016 01:21 AM - edited 08-19-2019 03:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

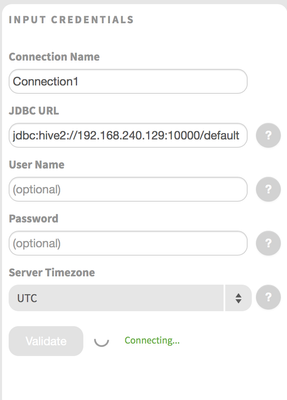

Hi, I've tried William's suggestion but it is not working for me. My problem is slightly different than Tim's: instead of 'failed to validate connection', what I get is a never-ending validation attempt:

Any ideas?

Br, Rafael

Created 06-15-2016 03:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Rafael Gomez,

If the connection is stalling indefinitely, my first inclination would be to check the components and the networking between the two.

- Is Hive up on running on HDP? Can you connect to it via a client like Beeline from outside the sandbox?

- Can the Zoomdata host see the Hive server (e.g., verify via Telnet)

What do the Zoomdata logs say? There should probably be eventually something to the effect of Connection Timeout or Connection Refused. Please share any relevant stack traces. Thanks!

Created 06-15-2016 10:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi William,

Yesterday, when I experienced the stalling connection, beeline could not establish a successful one. I keep no copy of the output.

Then, unfortunately, my Mac demanded a restart today. Something must have happened when I put it to sleep yesterday night.

So HDP Sandbox and docker Zoomdata needed to be brought to life again.

First thing I did after successful start was to test with Beeline, with successful results today:

[root@sandbox ~]# beeline -u jdbc:hive2://192.168.240.129:10000 WARNING: Use "yarn jar" to launch YARN applications. Connecting to jdbc:hive2://192.168.240.129:10000 Connected to: Apache Hive (version 1.2.1000.2.4.0.0-169) Driver: Hive JDBC (version 1.2.1000.2.4.0.0-169) Transaction isolation: TRANSACTION_REPEATABLE_READ Beeline version 1.2.1000.2.4.0.0-169 by Apache Hive 0: jdbc:hive2://192.168.240.129:10000>

Then, back in Zoomdata's UI, I could validate the jdbc:hive connection without problems this time.

I don't recall anything wrong from when I checked the Zoomdata logs yesterday night. But I wanted to have a look again. Unfortunately, there are no logs for yesterday in the /opt/zoomdata/logs/ folder of the docker container. I am sure it is the right container I have ssh:ed to.

The only thing I can do is to collect the logs immediately next time this problem manifests, if it does, and report to the community.

Thanks a lot for your help.

Br, Rafael