Support Questions

- Cloudera Community

- Support

- Support Questions

- Problem with Zoomdata connection to Hive (on Tez) ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

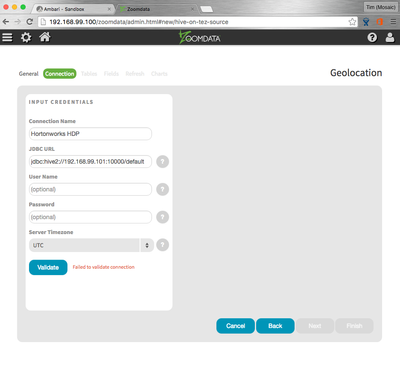

Problem with Zoomdata connection to Hive (on Tez) during tutorial – Getting Started with HDP Lab 7

- Labels:

-

Hortonworks Data Platform (HDP)

Created on 06-02-2016 11:08 AM - edited 08-19-2019 03:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm running through the Getting Started with HDP tutorial Lab 7 and consistently getting errors when trying to validate the Hive (via Tez) JDBC url within Zoomdata. Hive (and the rest of the HDP) is running in well in a sandbox VM.

I have the following installed and working as per the instructions:

- Sandbox VM (on VirtualBox OS X) with network settings as per section 7.3.

- Docker (on VirtualBox OS X) running Zoomdata container.

All HDP services (including Hive) have started correctly and on the Zoomdata side I have configured connector-HIVE_ON_TEZ=true as supervisor. In the Zoomdata UI when I try to define the JDBC url (as the admin user) I receive the error 'Failed to validate connection' (see attached image).

Can anyone suggest what I need to look at to get this to work? I am wondering whether this is a VM network issue.

Many thanks!

Created 06-08-2016 11:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It will not work out of the box because HDP 2.4 Sandbox has an Atlas Bridge that's throwing the error on the post Hive hook. This happens for any query involving a "dummy" database (e.g., "select 1").

Via the Ambari config for Hive you should locate the hive.exec.post.hooks property. It will have a value like this:

org.apache.hadoop.hive.ql.hooks.ATSHook, org.apache.atlas.hive.hook.HiveHook

You should remove the second hook (the Atlas hook) so the property only reads:

org.apache.hadoop.hive.ql.hooks.ATSHook

Lastly, restart the Hive server. You should be able to verify the fix even without Zoomdata by executing in Hive (via Ambari or Beeline):

select count(1);

If the query is working, Zoomdata should have no problem connecting to HDP's Hive.

Hope this helps!

Created 06-15-2016 07:07 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No problem Rafael, glad it works for you now.

It sounds like it was a problem with the networking of the containers that for whatever reason they couldn't establish a connection. Hopefully it doesn't manifest again!

- « Previous

-

- 1

- 2

- Next »