Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: PutHiveQL Exception

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

PutHiveQL Exception

Created on 12-08-2016 04:01 PM - edited 08-19-2019 12:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

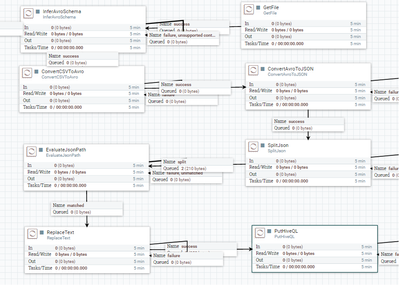

I have deployed the HDP 2.5 inside virtualbox. I have Nifi 1.0.0 installed on my machine. Earlier I had tried to read and write files to HDFS and I had connectivity issues:

PutHDFS/ GetHDFS Issue with data transfer

Which is still unsolved. I thought may be I can move forward and try my luch with HIVE.

I tried to execute an INSERT statement using PutHIVEQL. I think I am back to square one as am still getting the following errors:

First i tried to connect to Hive inside the sandbox using the following URL

jdbc://hive2://localhost:10000/default

which gives the following error: 2016-12-08 13:15:42,902 ERROR [Timer-Driven Process Thread-3] o.a.nifi.dbcp.hive.HiveConnectionPool org.apache.commons.dbcp.SQLNestedException: Cannot create JDBC driver of class 'org.apache.hive.jdbc.HiveDriver' for connect URL 'jdbc://hive2://localhost:10000/default' at org.apache.commons.dbcp.BasicDataSource.createConnectionFactory(BasicDataSource.java:1452) ~[commons-dbcp-1.4.jar:1.4] Caused by: java.sql.SQLException: No suitable driver at java.sql.DriverManager.getDriver(DriverManager.java:315) ~[na:1.8.0_111] at org.apache.commons.dbcp.BasicDataSource.createConnectionFactory(BasicDataSource.java:1437) ~[commons-dbcp-1.4.jar:1.4] ... 22 common frames omitted 2016-12-08 13:15:42,902 ERROR [Timer-Driven Process Thread-3] o.a.nifi.processors.hive.SelectHiveQL SelectHiveQL[id=de0cad2e-0158-1000-fd58-ecc08beadcb0] Unable to execute HiveQL select query SELECT * FROM drivers WHERE event='overspeed'; due to org.apache.nifi.processor.exception.ProcessException: org.apache.commons.dbcp.SQLNestedException: Cannot create JDBC driver of class 'org.apache.hive.jdbc.HiveDriver' for connect URL 'jdbc://hive2://localhost:10000/default'. No FlowFile to route to failure: org.apache.nifi.processor.exception.ProcessException: org.apache.commons.dbcp.SQLNestedException: Cannot create JDBC driver of class 'org.apache.hive.jdbc.HiveDriver' for connect URL 'jdbc://hive2://localhost:10000/default' 2016-12-08 13:15:42,903 ERROR [Timer-Driven Process Thread-3] o.a.nifi.processors.hive.SelectHiveQL org.apache.nifi.processor.exception.ProcessException: org.apache.commons.dbcp.SQLNestedException: Cannot create JDBC driver of class 'org.apache.hive.jdbc.HiveDriver' for connect URL 'jdbc://hive2://localhost:10000/default' at org.apache.nifi.dbcp.hive.HiveConnectionPool.getConnection(HiveConnectionPool.java:273) ~[nifi-hive-processors-1.0.0.jar:1.0.0] Caused by: org.apache.commons.dbcp.SQLNestedException: Cannot create JDBC driver of class 'org.apache.hive.jdbc.HiveDriver' for connect URL 'jdbc://hive2://localhost:10000/default' at org.apache.commons.dbcp.BasicDataSource.createConnectionFactory(BasicDataSource.java:1452) ~[commons-dbcp-1.4.jar:1.4] at org.apache.commons.dbcp.BasicDataSource.createDataSource(BasicDataSource.java:1371) ~[commons-dbcp-1.4.jar:1.4] at org.apache.commons.dbcp.BasicDataSource.getConnection(BasicDataSource.java:1044) ~[commons-dbcp-1.4.jar:1.4] at org.apache.nifi.dbcp.hive.HiveConnectionPool.getConnection(HiveConnectionPool.java:269) ~[nifi-hive-processors-1.0.0.jar:1.0.0] ... 19 common frames omitted Caused by: java.sql.SQLException: No suitable driver

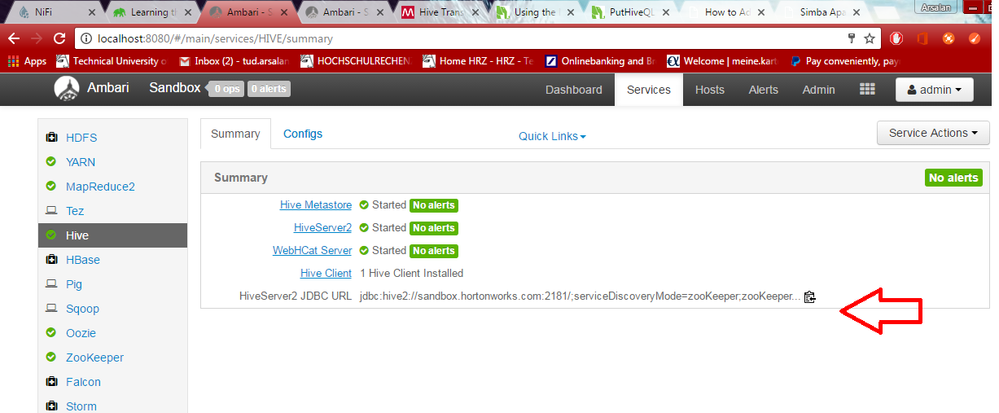

Then I checked the ambari dashboard and found the following URL:

jdbc:hive2://sandbox.hortonworks.com:2181/;serviceDiscoveryMode=zooKeeper;zooKeeperNamespace=hiveserver2

But now that I use this URL I do think I have moved forward but I am stuck with this error:

2016-12-08 13:20:31,101 ERROR [Timer-Driven Process Thread-10] o.a.nifi.processors.hive.SelectHiveQL SelectHiveQL[id=de0cad2e-0158-1000-fd58-ecc08beadcb0] SelectHiveQL[id=de0cad2e-0158-1000-fd58-ecc08beadcb0] failed to process due to java.lang.NullPointerException; rolling back session: java.lang.NullPointerException 2016-12-08 13:20:31,104 ERROR [Timer-Driven Process Thread-10] o.a.nifi.processors.hive.SelectHiveQL java.lang.NullPointerException: null at org.apache.thrift.transport.TSocket.open(TSocket.java:170) ~[hive-exec-1.2.1.jar:1.2.1] at org.apache.thrift.transport.TSaslTransport.open(TSaslTransport.java:266) ~[hive-exec-1.2.1.jar:1.2.1] at org.apache.thrift.transport.TSaslClientTransport.open(TSaslClientTransport.java:37) ~[hive-exec-1.2.1.jar:1.2.1] at org.apache.hive.jdbc.HiveConnection.openTransport(HiveConnection.java:204) ~[hive-jdbc-1.2.1.jar:1.2.1] at org.apache.hive.jdbc.HiveConnection.<init>(HiveConnection.java:176) ~[hive-jdbc-1.2.1.jar:1.2.1] at org.apache.hive.jdbc.HiveDriver.connect(HiveDriver.java:105) ~[hive-jdbc-1.2.1.jar:1.2.1] at org.apache.commons.dbcp.DriverConnectionFactory.createConnection(DriverConnectionFactory.java:38) ~[commons-dbcp-1.4.jar:1.4] at org.apache.commons.dbcp.PoolableConnectionFactory.makeObject(PoolableConnectionFactory.java:582) ~[commons-dbcp-1.4.jar:1.4] at org.apache.commons.dbcp.BasicDataSource.validateConnectionFactory(BasicDataSource.java:1556) ~[commons-dbcp-1.4.jar:1.4] at org.apache.commons.dbcp.BasicDataSource.createPoolableConnectionFactory(BasicDataSource.java:1545) ~[commons-dbcp-1.4.jar:1.4] at org.apache.commons.dbcp.BasicDataSource.createDataSource(BasicDataSource.java:1388) ~[commons-dbcp-1.4.jar:1.4] at org.apache.commons.dbcp.BasicDataSource.getConnection(BasicDataSource.java:1044) ~[commons-dbcp-1.4.jar:1.4] at org.apache.nifi.dbcp.hive.HiveConnectionPool.getConnection(HiveConnectionPool.java:269) ~[nifi-hive-processors-1.0.0.jar:1.0.0] at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[na:1.8.0_111] at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) ~[na:1.8.0_111] at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[na:1.8.0_111] at java.lang.reflect.Method.invoke(Method.java:498) ~[na:1.8.0_111] at org.apache.nifi.controller.service.StandardControllerServiceProvider$1.invoke(StandardControllerServiceProvider.java:177) ~[nifi-framework-core-1.0.0.jar:1.0.0] at com.sun.proxy.$Proxy132.getConnection(Unknown Source) ~[na:na] at org.apache.nifi.processors.hive.SelectHiveQL.onTrigger(SelectHiveQL.java:158) ~[nifi-hive-processors-1.0.0.jar:1.0.0] at org.apache.nifi.processor.AbstractProcessor.onTrigger(AbstractProcessor.java:27) ~[nifi-api-1.0.0.jar:1.0.0] at org.apache.nifi.controller.StandardProcessorNode.onTrigger(StandardProcessorNode.java:1064) [nifi-framework-core-1.0.0.jar:1.0.0] at org.apache.nifi.controller.tasks.ContinuallyRunProcessorTask.call(ContinuallyRunProcessorTask.java:136) [nifi-framework-core-1.0.0.jar:1.0.0] at org.apache.nifi.controller.tasks.ContinuallyRunProcessorTask.call(ContinuallyRunProcessorTask.java:47) [nifi-framework-core-1.0.0.jar:1.0.0] at org.apache.nifi.controller.scheduling.TimerDrivenSchedulingAgent$1.run(TimerDrivenSchedulingAgent.java:132) [nifi-framework-core-1.0.0.jar:1.0.0] at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) [na:1.8.0_111] at java.util.concurrent.FutureTask.runAndReset(FutureTask.java:308) [na:1.8.0_111] at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$301(ScheduledThreadPoolExecutor.java:180) [na:1.8.0_111] at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:294) [na:1.8.0_111] at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) [na:1.8.0_111] at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) [na:1.8.0_111] at java.lang.Thread.run(Thread.java:745) [na:1.8.0_111]

Can any one please help me with this? I have tried so many things but it seems it is impossible to get data through to the sandbox in the virtual machine. What should i do? any possible direction would be helpful. I have disabled the firewall.

The replace text yields the following statement:

INSERT INTO driveres (truckid,driverid,city,state,velocity,event) VALUES ('N02','N02','Lahore','Punjab','100','overspeeding');

which runs successfully in the ambari hive view. I have also downloaded the hive configs from ambari and added them to the connection pool.

The last option for me would be to have Nifi running in the HDP.

Created 12-08-2016 04:11 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your original URL "jdbc://hive2://localhost:10000/default" has slashes between jdbc: and hive2, this should instead be

jdbc:hive2://localhost:10000/default

For the zookeeper version of the URL, that is a known issue (NIFI-2575), I would recommend correcting the original URL and using that (port 10000 should be opened/forwarded on the sandbox already).

Created 12-08-2016 04:11 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your original URL "jdbc://hive2://localhost:10000/default" has slashes between jdbc: and hive2, this should instead be

jdbc:hive2://localhost:10000/default

For the zookeeper version of the URL, that is a known issue (NIFI-2575), I would recommend correcting the original URL and using that (port 10000 should be opened/forwarded on the sandbox already).

Created 12-08-2016 04:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I had used the format specified by @Timothy Spann in his post : PutHiveQl

I have changed the URL to the one you specified and get the following error:

2016-12-08 17:39:31,880 INFO [Timer-Driven Process Thread-1] o.a.nifi.dbcp.hive.HiveConnectionPool HiveConnectionPool[id=de18f06b-0158-1000-758a-359b8b94716b] Simple Authentication 2016-12-08 17:39:31,881 ERROR [Timer-Driven Process Thread-1] o.a.nifi.dbcp.hive.HiveConnectionPool HiveConnectionPool[id=de18f06b-0158-1000-758a-359b8b94716b] Error getting Hive connection 2016-12-08 17:39:31,883 ERROR [Timer-Driven Process Thread-1] o.a.nifi.dbcp.hive.HiveConnectionPool org.apache.commons.dbcp.SQLNestedException: Cannot create JDBC driver of class 'org.apache.hive.jdbc.HiveDriver' for connect URL ' jdbc:hive2://localhost:10000/default' at org.apache.commons.dbcp.BasicDataSource.createConnectionFactory(BasicDataSource.java:1452) ~[commons-dbcp-1.4.jar:1.4] at org.apache.commons.dbcp.BasicDataSource.createDataSource(BasicDataSource.java:1371) ~[commons-dbcp-1.4.jar:1.4] at org.apache.commons.dbcp.BasicDataSource.getConnection(BasicDataSource.java:1044) ~[commons-dbcp-1.4.jar:1.4] at org.apache.nifi.dbcp.hive.HiveConnectionPool.getConnection(HiveConnectionPool.java:269) ~[nifi-hive-processors-1.0.0.jar:1.0.0] at sun.reflect.GeneratedMethodAccessor467.invoke(Unknown Source) ~[na:na] at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[na:1.8.0_111] at java.lang.reflect.Method.invoke(Method.java:498) ~[na:1.8.0_111] at org.apache.nifi.controller.service.StandardControllerServiceProvider$1.invoke(StandardControllerServiceProvider.java:177) [nifi-framework-core-1.0.0.jar:1.0.0] at com.sun.proxy.$Proxy132.getConnection(Unknown Source) [na:na] at org.apache.nifi.processors.hive.PutHiveQL.onTrigger(PutHiveQL.java:152) [nifi-hive-processors-1.0.0.jar:1.0.0] at org.apache.nifi.processor.AbstractProcessor.onTrigger(AbstractProcessor.java:27) [nifi-api-1.0.0.jar:1.0.0] at org.apache.nifi.controller.StandardProcessorNode.onTrigger(StandardProcessorNode.java:1064) [nifi-framework-core-1.0.0.jar:1.0.0] at org.apache.nifi.controller.tasks.ContinuallyRunProcessorTask.call(ContinuallyRunProcessorTask.java:136) [nifi-framework-core-1.0.0.jar:1.0.0] at org.apache.nifi.controller.tasks.ContinuallyRunProcessorTask.call(ContinuallyRunProcessorTask.java:47) [nifi-framework-core-1.0.0.jar:1.0.0] at org.apache.nifi.controller.scheduling.TimerDrivenSchedulingAgent$1.run(TimerDrivenSchedulingAgent.java:132) [nifi-framework-core-1.0.0.jar:1.0.0] at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) [na:1.8.0_111] at java.util.concurrent.FutureTask.runAndReset(FutureTask.java:308) [na:1.8.0_111] at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$301(ScheduledThreadPoolExecutor.java:180) [na:1.8.0_111] at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:294) [na:1.8.0_111] at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) [na:1.8.0_111] at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) [na:1.8.0_111] at java.lang.Thread.run(Thread.java:745) [na:1.8.0_111] Caused by: java.sql.SQLException: No suitable driver at java.sql.DriverManager.getDriver(DriverManager.java:315) ~[na:1.8.0_111] at org.apache.commons.dbcp.BasicDataSource.createConnectionFactory(BasicDataSource.java:1437) ~[commons-dbcp-1.4.jar:1.4] ... 21 common frames omitted 2016-12-08 17:39:31,883 ERROR [Timer-Driven Process Thread-1] o.apache.nifi.processors.hive.PutHiveQL PutHiveQL[id=de0c64f1-0158-1000-b1fd-9c33f9c8e7e0] PutHiveQL[id=de0c64f1-0158-1000-b1fd-9c33f9c8e7e0] failed to process due to org.apache.nifi.processor.exception.ProcessException: org.apache.commons.dbcp.SQLNestedException: Cannot create JDBC driver of class 'org.apache.hive.jdbc.HiveDriver' for connect URL ' jdbc:hive2://localhost:10000/default'; rolling back session: org.apache.nifi.processor.exception.ProcessException: org.apache.commons.dbcp.SQLNestedException: Cannot create JDBC driver of class 'org.apache.hive.jdbc.HiveDriver' for connect URL ' jdbc:hive2://localhost:10000/default' 2016-12-08 17:39:31,885 ERROR [Timer-Driven Process Thread-1] o.apache.nifi.processors.hive.PutHiveQL org.apache.nifi.processor.exception.ProcessException: org.apache.commons.dbcp.SQLNestedException: Cannot create JDBC driver of class 'org.apache.hive.jdbc.HiveDriver' for connect URL ' jdbc:hive2://localhost:10000/default' at org.apache.nifi.dbcp.hive.HiveConnectionPool.getConnection(HiveConnectionPool.java:273) ~[nifi-hive-processors-1.0.0.jar:1.0.0] at sun.reflect.GeneratedMethodAccessor467.invoke(Unknown Source) ~[na:na]

Created 12-08-2016 05:01 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Looks like your connect URL has a space as the first character? For the second URL, that might be one of two things. The first (less likely) is that you want your client to use hostnames to resolve name nodes (see my answer here). However I would've expected an error message like the one in that question, not the one you're seeing.

I think for your second URL issue the problem is that Apache NiFi (specifically the Hive processors) doesn't necessarily work with HDP 2.5 out of the box, because Apache NiFi ships with Apache components (such as Hive 1.2.1), whereas HDP 2.5 has slightly different versions. I would try Hortonworks Data Flow (HDF) rather than Apache NiFi, as the former ships with HDP versions for Hive.

Created 12-08-2016 05:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thankyou so much! it is working now. I am surprised that even a space at the beginning would prevent the processor from working.

Created 12-08-2016 05:49 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yeah we should probably trim that URL before using it, please feel free to write a Jira for that if you like.

Created 06-08-2018 07:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @Arsalan Siddiqi, I'm having the same problem as you but I don't have any space in the beginning or ending. I'm still getting the same error. Can you please specify if you made any other changes?

Created 12-10-2016 01:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Matt

I had used the suggested URL as mentioned in this tutorial:

I have updated the URL and get the following error which I will explore further now:

2016-12-08 17:21:30,527 WARN [LeaseRenewer:ARSI@sandbox.hortonworks.com:8020] org.apache.hadoop.hdfs.LeaseRenewer Failed to renew lease for [DFSClient_NONMAPREDUCE_620414152_117, DFSClient_NONMAPREDUCE_-186846005_117] for 3487 seconds. Will retry shortly ... java.net.ConnectException: Call From PCARSI01/10.20.115.30 to sandbox.hortonworks.com:8020 failed on connection exception: java.net.ConnectException: Connection refused: no further information; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused at sun.reflect.GeneratedConstructorAccessor92.newInstance(Unknown Source) ~[na:na] at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) ~[na:1.8.0_111] at java.lang.reflect.Constructor.newInstance(Constructor.java:423) ~[na:1.8.0_111] at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:791) ~[hadoop-common-2.6.2.jar:na] at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:731) ~[hadoop-common-2.6.2.jar:na] at org.apache.hadoop.ipc.Client.call(Client.java:1473) ~[hadoop-common-2.6.2.jar:na] at org.apache.hadoop.ipc.Client.call(Client.java:1400) ~[hadoop-common-2.6.2.jar:na] at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:232) ~[hadoop-common-2.6.2.jar:na] at com.sun.proxy.$Proxy128.renewLease(Unknown Source) ~[na:na] at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.renewLease(ClientNamenodeProtocolTranslatorPB.java:571) ~[hadoop-hdfs-2.6.2.jar:na] at sun.reflect.GeneratedMethodAccessor308.invoke(Unknown Source) ~[na:na] at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[na:1.8.0_111] at java.lang.reflect.Method.invoke(Method.java:498) ~[na:1.8.0_111] at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:187) ~[hadoop-common-2.6.2.jar:na] at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:102) ~[hadoop-common-2.6.2.jar:na] at com.sun.proxy.$Proxy129.renewLease(Unknown Source) ~[na:na] at org.apache.hadoop.hdfs.DFSClient.renewLease(DFSClient.java:882) ~[hadoop-hdfs-2.6.2.jar:na] at org.apache.hadoop.hdfs.LeaseRenewer.renew(LeaseRenewer.java:423) [hadoop-hdfs-2.6.2.jar:na] at org.apache.hadoop.hdfs.LeaseRenewer.run(LeaseRenewer.java:448) [hadoop-hdfs-2.6.2.jar:na] at org.apache.hadoop.hdfs.LeaseRenewer.access$700(LeaseRenewer.java:71) [hadoop-hdfs-2.6.2.jar:na] at org.apache.hadoop.hdfs.LeaseRenewer$1.run(LeaseRenewer.java:304) [hadoop-hdfs-2.6.2.jar:na] at java.lang.Thread.run(Thread.java:745) [na:1.8.0_111] Caused by: java.net.ConnectException: Connection refused: no further information at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method) ~[na:1.8.0_111] at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:717) ~[na:1.8.0_111] at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:206) ~[hadoop-common-2.6.2.jar:na] at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:530) ~[hadoop-common-2.6.2.jar:na] at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:494) ~[hadoop-common-2.6.2.jar:na] at org.apache.hadoop.ipc.Client$Connection.setupConnection(Client.java:608) ~[hadoop-common-2.6.2.jar:na] at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:706) ~[hadoop-common-2.6.2.jar:na] at org.apache.hadoop.ipc.Client$Connection.access$2800(Client.java:369) ~[hadoop-common-2.6.2.jar:na] at org.apache.hadoop.ipc.Client.getConnection(Client.java:1522) ~[hadoop-common-2.6.2.jar:na] at org.apache.hadoop.ipc.Client.call(Client.java:1439) ~[hadoop-common-2.6.2.jar:na] ... 16 common frames omitted 2016-12-08 17:21:32,559 WARN [LeaseRenewer:ARSI@sandbox.hortonworks.com:8020] org.apache.hadoop.hdfs.LeaseRenewer Failed to renew lease for [DFSClient_NONMAPREDUCE_620414152_117, DFSClient_NONMAPREDUCE_-186846005_117] for 3489 seconds. Will retry shortly ... java.net.ConnectException: Call From PCARSI01/10.20.115.30 to sandbox.hortonworks.com:8020 failed on connection exception: java.net.ConnectException: Connection refused: no further information; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused at sun.reflect.GeneratedConstructorAccessor92.newInstance(Unknown Source) ~[na:na]