Support Questions

- Cloudera Community

- Support

- Support Questions

- PutHiveQL and putHiveStreaming processors in Apach...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

PutHiveQL and putHiveStreaming processors in Apache Nifi are very slow

- Labels:

-

Apache NiFi

Created on 06-20-2017 06:52 AM - edited 08-17-2019 08:41 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am using the PutHiveSql to insert data into hive table. But it is very slow. It is approximately inserting each row in 2 to 3 secs.

Is there a way to increase the speed of insertion ?

It took around 3 days to insert 15000 rows !

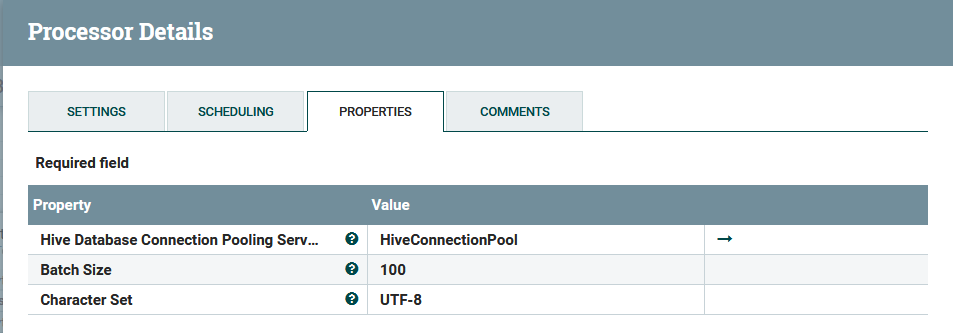

please find below the puthiveQl processor configuration:

Complete flow:

Created 06-20-2017 07:47 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What version of NiFi/HDF are you using? As of NiFi 1.2.0 / HDF 3.0.0, PutHiveQL can accept multiple statements in one flow file, so if you are currently dealing with one INSERT statement per flow file, try MergeContent to batch them up into a single flow file. This should increase performance, but since Hive is an auto-commit database, PutHiveQL is probably not the best choice for large/fast ingest needs. You may be better off putting the data into HDFS and creating/loading a table from it.

For PutHiveStreaming, there is a known issue that can reduce performance, it also was fixed in NiFi 1.2.0 / HDF 3.0.0.

Created 06-20-2017 07:47 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What version of NiFi/HDF are you using? As of NiFi 1.2.0 / HDF 3.0.0, PutHiveQL can accept multiple statements in one flow file, so if you are currently dealing with one INSERT statement per flow file, try MergeContent to batch them up into a single flow file. This should increase performance, but since Hive is an auto-commit database, PutHiveQL is probably not the best choice for large/fast ingest needs. You may be better off putting the data into HDFS and creating/loading a table from it.

For PutHiveStreaming, there is a known issue that can reduce performance, it also was fixed in NiFi 1.2.0 / HDF 3.0.0.

Created 02-10-2019 10:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What is the most straightforward way to load data into Hive tables using Nifi? We use Hive 1.1 and have ingested the data and put it into HDFS as Avro files. @Matt Burgess

Created 02-11-2019 06:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In an upcoming release you'll be able to use Hive 1.1 processors, so in your case you'd want to keep what you have (Avro in HDFS) and use PutHive_1_1QL to issue a LOAD DATA or CREATE EXTERNAL TABLE statement so Hive can see your data.