Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Pyspark + PyCharm - java.util.NoSuchElementExc...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Pyspark + PyCharm - java.util.NoSuchElementException: key not found: _PYSPARK_DRIVER_CALLBACK_HOST

- Labels:

-

Apache Falcon

-

Apache Spark

-

Gateway

Created on 05-16-2019 12:14 AM - edited 09-16-2022 07:23 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello

- i'm using PyCharm 2019, python 2.7.6, Apache Spark 2.2.0

for pyspark development, and running into issues when i try to run any spark code.

Code :

#! /bin/python

import os

import sys

from pyspark.sql import SparkSession

from pyspark import SparkConf, SparkContext

import pandas as pd

import numpy as np

import pyarrow.parquet as pq

import pyarrow as pa

import pprint as pp

from pyspark.sql.functions import col, lit

import datetime

today = datetime.date.today().__format__("yyyymmdd")

print (" formatted date => " + today)

# d = {'a':1, 'b':2, 'c':3}

d = [(1, "20190101", "99990101", "false"), (2, "20190501", "99990101", "false"), (3, "20190101", "20190414", "false"), (3, "20190415", "99990101", "false")]

print(os.environ)

print(" spark_home -> ", os.environ['SPARK_HOME'])

print(" pythonpath -> ", os.environ['PYTHONPATH'])

print(" PYSPARK_SUBMIT_ARGS -> ", os.environ['PYSPARK_SUBMIT_ARGS'])

os.environ['PYSPARK_SUBMIT_ARGS'] = "--master spark://Karans-MacBook-Pro-4.local:7077 pyspark-shell"

print(" JAVA_HOME => " + os.environ['JAVA_HOME'])

print(" PATH => " + os.environ['PATH'])

print(" CLASSPATH => " + os.environ['CLASSPATH'])

sys.path.append(os.path.join(os.environ['SPARK_HOME'], "python"))

sys.path.append(os.path.join(os.environ['SPARK_HOME'], "python/lib/py4j-0.10.4-src.zip"))

# ERROR OBTAINED WHEN I CREATE SaprkSession object

spark = SparkSession.builder.master("local").appName("CreatingDF").getOrCreate()

sparkdf = spark.createDataFrame(d, ['pnalt', 'begda', 'endda', 'ref_flag'])

print(sparkdf)

ERROR (when i create the SparkSession object)

19/05/15 16:58:06 ERROR SparkUncaughtExceptionHandler: Uncaught exception in thread Thread[main,5,main]

java.util.NoSuchElementException: key not found: _PYSPARK_DRIVER_CALLBACK_HOST

at scala.collection.MapLike$class.default(MapLike.scala:228)

at scala.collection.AbstractMap.default(Map.scala:59)

at scala.collection.MapLike$class.apply(MapLike.scala:141)

at scala.collection.AbstractMap.apply(Map.scala:59)

at org.apache.spark.api.python.PythonGatewayServer$$anonfun$main$1.apply$mcV$sp(PythonGatewayServer.scala:50)

at org.apache.spark.util.Utils$.tryOrExit(Utils.scala:1262)

at org.apache.spark.api.python.PythonGatewayServer$.main(PythonGatewayServer.scala:37)

at org.apache.spark.api.python.PythonGatewayServer.main(PythonGatewayServer.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:497)

at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:755)

at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:180)

at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:205)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:119)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

Traceback (most recent call last):

File "/Users/karanalang/PycharmProjects/Python2/karan/python2/falcon/py_createDF.py", line 55, in <module>

spark = SparkSession.builder.master("local").appName("CreatingDF").getOrCreate()

File "/Users/karanalang/PycharmProjects/Python2/venv/lib/python2.7/site-packages/pyspark/sql/session.py", line 173, in getOrCreate

sc = SparkContext.getOrCreate(sparkConf)

File "/Users/karanalang/PycharmProjects/Python2/venv/lib/python2.7/site-packages/pyspark/context.py", line 367, in getOrCreate

SparkContext(conf=conf or SparkConf())

File "/Users/karanalang/PycharmProjects/Python2/venv/lib/python2.7/site-packages/pyspark/context.py", line 133, in __init__

SparkContext._ensure_initialized(self, gateway=gateway, conf=conf)

File "/Users/karanalang/PycharmProjects/Python2/venv/lib/python2.7/site-packages/pyspark/context.py", line 316, in _ensure_initialized

SparkContext._gateway = gateway or launch_gateway(conf)

File "/Users/karanalang/PycharmProjects/Python2/venv/lib/python2.7/site-packages/pyspark/java_gateway.py", line 46, in launch_gateway

return _launch_gateway(conf)

File "/Users/karanalang/PycharmProjects/Python2/venv/lib/python2.7/site-packages/pyspark/java_gateway.py", line 108, in _launch_gateway

raise Exception("Java gateway process exited before sending its port number")

Exception: Java gateway process exited before sending its port number

forums on internet seem to be suggesting that it is a version related issue, however, i'm not sure how to debug/fix this issue.

Any help on this is really appreciated.

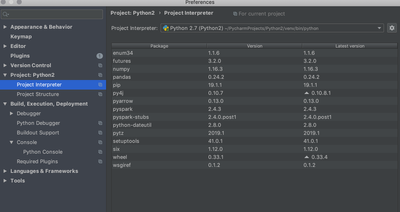

Attaching screenshot of the Pycharm Project interpretor as well.

Created 05-16-2019 12:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi @saichand akella - any ideas on this ?

Created 05-16-2019 12:21 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Ravi Kumar Lanke, @saichand akella - any ideas on this ?

Created 05-16-2019 11:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It may be a version compatibility issue

Created 05-17-2019 05:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Ravi Kumar Lanke , thanks for the response

- when i run the same code on command-line, it works fine. When i run this on PyCharm, it is failing.

$PATH, $JAVA_HOME, $SPARK_HOME, $PYTHON_PATH on command line & PyCharm is the same,

I've tried setting it manually as well

On PySpark Command Line :

>>> os.environ['PATH']' /Library/Frameworks/Python.framework/Versions/2.7/bin:/usr/local/bin:/usr/bin:/bin:/usr/sbin:/sbin:/opt/X11/bin:/usr/local/bin/scala/bin:/Users/karanalang/Documents/Technology/IncortaAnalytics/spark/bin:/Users/karanalang/Documents/Technology/kafka/kafka_2.11-0.9.0.1/bin:/Users/karanalang/Documents/Technology/maven/apache-maven-3.3.9//bin:/bin:/Users/karanalang/Documents/Technology/Storm/zookeeper/zookeeper-3.4.8/bin:/Users/karanalang/Documents/Technology/kafka/confluent-3.2.2/bin:/usr/local/etc/:/Users/karanalang/Documents/Technology/kafka/confluent-3.2.2/bin:/Users/karanalang/Documents/Technology/Gradle/gradle-4.3.1/bin:/Users/karanalang/Documents/Technology/HadoopInstallation-local/hadoop-2.6.5/bin:/Users/karanalang/Documents/Technology/HadoopInstallation-local/hadoop-2.6.5/sbin'

>>> os.environ['JAVA_HOME'] '/Library/Java/JavaVirtualMachines/jdk1.8.0_45.jdk/Contents/Home/

>>> os.environ['SPARK_HOME'] '/Users/karanalang/Documents/Technology/IncortaAnalytics/spark'

>>> os.environ['PYTHONPATH'] '/Users/karanalang/Documents/Technology/IncortaAnalytics/spark/python/lib/py4j-0.10.4-src.zip:/Users/karanalang/Documents/Technology/IncortaAnalytics/spark/python/:/Users/karanalang/Documents/Technology/IncortaAnalytics/spark/python/lib/py4j-0.10.4-src.zip:/Users/karanalang/Documents/Technology/IncortaAnalytics/spark/python/:'

On PyCharm :

(' spark_home -> ', '/Users/karanalang/Documents/Technology/IncortaAnalytics/sparkJAVA_HOME => /Library/Java/JavaVirtualMachines/jdk1.8.0_45.jdk/Contents/Home/ PATH => /Users/karanalang/PycharmProjects/Python2/venv/bin:/Library/Frameworks/Python.framework/Versions/2.7/bin:/usr/local/bin:/usr/bin:/bin:/usr/sbin:/sbin:/opt/X11/bin:/usr/local/bin/scala/bin:/Users/karanalang/Documents/Technology/Hive/apache-hive-3.1.0-bin/bin:/Users/karanalang/Documents/Technology/IncortaAnalytics/spark/bin:/Users/karanalang/Documents/Technology/kafka/kafka_2.11-0.9.0.1/bin:/Users/karanalang/Documents/Technology/maven/apache-maven-3.3.9//bin:/bin:/Users/karanalang/Documents/Technology/Storm/zookeeper/zookeeper-3.4.8/bin:/Users/karanalang/Documents/Technology/kafka/confluent-3.2.2/bin:/usr/local/etc/:/Users/karanalang/Documents/Technology/kafka/confluent-3.2.2/bin:/Users/karanalang/Documents/Technology/Gradle/gradle-4.3.1/bin:/Users/karanalang/Documents/Technology/HadoopInstallation-local/hadoop-2.6.5/bin:/Users/karanalang/Documents/Technology/HadoopInstallation-local/hadoop-2.6.5/sbin

PYTHONPATH -> /Users/karanalang/Documents/Technology/IncortaAnalytics/spark/python/lib/py4j-0.10.4-src.zip:/Users/karanalang/Documents/Technology/IncortaAnalytics/spark/python/:/Users/karanalang/Documents/Technology/IncortaAnalytics/spark/python/lib/py4j-0.10.4-src.zip:/Users/karanalang/Documents/Technology/IncortaAnalytics/spark/python/:

On PyCharm (based on similar issues on SO & other forums),

i've tried setting PYSPARK_SUBMIT_ARGS, but it doesnt seems to be working

os.environ['PYSPARK_SUBMIT_ARGS'] = "--master spark://Karans-MacBook-Pro-4.local:7077 pyspark-shell"

Any input on which version might be a mismatch ? or what the root cause might be ?

Created 05-19-2019 06:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The above question and the entire thread below was originally posted in the Community Help track. On Sun May 19 18:43 UTC 2019, a member of the HCC moderation staff moved it to the Data Science & Advanced Analytics Track. The Community Help Track is intended for questions about using the HCC site itself.

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.