Support Questions

- Cloudera Community

- Support

- Support Questions

- Python connect to Hadoop using Hive with Kerberos ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Python connect to Hadoop using Hive with Kerberos authentication

- Labels:

-

Apache Hive

Created 11-07-2016 04:24 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

conn_config ={'krb_host':'hostname','krb_service':'hive'}

pyhs2.connect(host='hostname',

port=10000,

authMechanism="KERBEROS",

password="********",

user='hostname@XXXXXXXXXXXXXXXXXXXX')Error Encountered:

user='XXXXXXX@XXXXXXXXXXXXXXXXXXXX') File "build\bdist.win-amd64\egg\pyhs2\__init__.py", line 7, in connect File "build\bdist.win-amd64\egg\pyhs2\connections.py", line 46, in __init__ File "build\bdist.win-amd64\egg\pyhs2\cloudera\thrift_sasl.py", line 66, in open thrift.transport.TTransport.TTransportException: Could not start SASL: Error in sasl_client_start (-4) SASL(-4): no mechanism available: Unable to find a callback: 2

checked below string but still have same problem http://stackoverflow.com/questions/29814207/python-connect-to-hive-use-pyhs2-and-kerberos-authentica... Can please some one help to solve ths problem. Your help is much appreciated

Created 11-07-2016 04:24 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Artem Ervits can you please help me to solve this issue.

it is much appreciated

Created 11-07-2016 04:24 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Dale Bradman can you please help me to solve this issue.

it is much appreciated

Created 11-09-2016 01:53 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

this may be a dependency issue, please look at the proposed fixes in the following threads

https://github.com/BradRuderman/pyhs2/issues/41

https://github.com/BradRuderman/pyhs2/issues/19

http://stackoverflow.com/questions/30705576/python-cannot-connect-hiveserver2

Created on 01-07-2017 08:56 AM - edited 08-18-2019 04:10 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1. You must install kerberos client on your PC.

2. You mast get and kinit a ticket for kerberos.

3. And then you must install Pyhive model.

4. Then test.py like this:

#!/usr/bin/env python

# -*- coding: utf-8 -*-

# hive util with hive server2

from impala.dbapi import connect

class HiveClient:

def __init__(self, db_host, port, authMechanism, user, password, database, kbservice):

self.conn = connect(host=db_host,

port=port,

auth_mechanism=authMechanism,

user=user,

password=password,

database=database,

kerberos_service_name=kbservice

)

def query(self, sql):

with self.conn.cursor() as cursor:

cursor.execute(sql)

return cursor.fetchall()

def close(self):

self.conn.close()

if __name__ == '__main__':

hive_client = HiveClient(db_host='namenode02.xxx.com', port=10000, authMechanism='GSSAPI', user='hive', password='',

database='data_mp_raw', kbservice='hive')

sql = "select dt, hour, vid, sum(1) as play_num from tbl_mp_stream where dt='20161204' and hour='12' and vid is not null group by dt, hour, vid order by play_num desc limit 50"

sql = "SHOW TABLES"

result = hive_client.query(sql)

hive_client.close()

for x in result:

print(x)

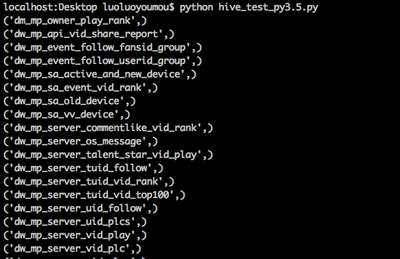

5. The result like this( My python version is 3.5.2 )

Created 01-18-2017 05:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hive_client = HiveClient(db_host='XXXXXXXXX.scglobaluat.aduat.scotiacapital.com', port=10000, authMechanism='GSSAPI', user='XXXXXXX', password='XXXXXX',database='tsz', kbservice='hive')

The below is error code:

Please let me know where is the issue

Traceback (most recent call last):

File "C:/Users/speesary/connct_server", line 33, in <module> database='tsz', kbservice='hive') File "C:/Users/speesary/connct_server", line 17, in __init__ kerberos_service_name=kbservice

File "C:\Users\speesary\AppData\Roaming\Python\Python27\site-packages\impala\dbapi.py", line 147, in connect auth_mechanism=auth_mechanism)

File "C:\Users\speesary\AppData\Roaming\Python\Python27\site-packages\impala\hiveserver2.py", line 658, in connect transport.open()

File "C:\Users\speesary\AppData\Roaming\Python\Python27\site-packages\thrift_sasl\__init__.py", line 72, in open message=("Could not start SASL: %s" % self.sasl.getError())) thrift.transport.TTransport.TTransportException:

Could not start SASL: Error in sasl_client_start (-4) SASL(-4): no mechanism available: Unable to find a callback: 2 Process finished with exit code 1

Created 01-07-2017 08:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

pyhs2 can't support Python 3.

Created 03-30-2020 09:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The DSN-less connection string below FINALLY worked for me, in windows 10. I created a file DSN, then copy/pasted the string into the python code, as a template.

Three lessons that I learned from this struggle:

1) kerberos is CASE SENSITIVE. Your kerberos realm in the string MUST be uppercase.

2) The Cloudera driver doesn't like spaces in between the semicolons in the string. Avoid them.

3) If you don't need connection pooling, turn it off with a pyodbc.pooling = False statement.

import pyodbc

strFileDSNAsAstring = "DRIVER=Cloudera ODBC Driver for Apache Hive;USEUNICODESQLCHARACTERTYPES=1; \

SSL=0;SERVICEPRINCIPALCANONICALIZATION=0;SERVICEDISCOVERYMODE=0;SCHEMA=database;PORT=port; \

KRBSERVICENAME=hive;KRBREALM=uppercaserealm;KRBHOSTFQDN=hostfqdndomain;INVALIDSESSIONAUTORECOVER=1; \

HOST=host;HIVESERVERTYPE=2;GETTABLESWITHQUERY=0;ENABLETEMPTABLE=0;DESCRIPTION=Hive; \

DELEGATEKRBCREDS=0;AUTHMECH=1;ASYNCEXECPOLLINTERVAL=100;APPLYSSPWITHQUERIES=1;CAIssuedCertNamesMismatch=1;"

try:

pyodbc.pooling = False

conn = pyodbc.connect(strFileDSNAsAstring, autocommit=True)

except:

print("failure.")

else:

conn.close()

print("success.")