Support Questions

- Cloudera Community

- Support

- Support Questions

- Python script has been killed due to timeout after...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Python script has been killed due to timeout after waiting 300 secs in service check

Created on 10-06-2017 05:52 AM - edited 09-16-2022 05:21 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

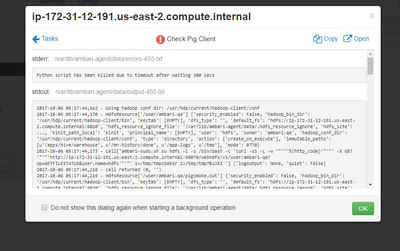

2017-10-06 05:17:44,162 - Using hadoop conf dir: /usr/hdp/current/hadoop-client/conf

2017-10-06 05:17:44,170 - HdfsResource['/user/ambari-qa'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/hdp/current/hadoop-client/bin', 'keytab': [EMPTY], 'dfs_type': '', 'default_fs': 'hdfs://ip-172-31-12-191.us-east-2.compute.internal:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': 'kinit', 'principal_name': [EMPTY], 'user': 'hdfs', 'owner': 'ambari-qa', 'hadoop_conf_dir': '/usr/hdp/current/hadoop-client/conf', 'type': 'directory', 'action': ['create_on_execute'], 'immutable_paths': [u'/apps/hive/warehouse', u'/mr-history/done', u'/app-logs', u'/tmp'], 'mode': 0770}

2017-10-06 05:17:44,173 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET '"'"'http://ip-172-31-12-191.us-east-2.compute.internal:50070/webhdfs/v1/user/ambari-qa?op=GETFILESTATUS&user.name=hdfs'"'"' 1>/tmp/tmpzOeEsr 2>/tmp/tmp7BuJX3''] {'logoutput': None, 'quiet': False}

2017-10-06 05:17:44,218 - call returned (0, '')

2017-10-06 05:17:44,219 - HdfsResource['/user/ambari-qa/pigsmoke.out'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/hdp/current/hadoop-client/bin', 'keytab': [EMPTY], 'dfs_type': '', 'default_fs': 'hdfs://ip-172-31-12-191.us-east-2.compute.internal:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': 'kinit', 'principal_name': [EMPTY], 'user': 'hdfs', 'owner': 'ambari-qa', 'hadoop_conf_dir': '/usr/hdp/current/hadoop-client/conf', 'type': 'directory', 'action': ['delete_on_execute'], 'immutable_paths': [u'/apps/hive/warehouse', u'/mr-history/done', u'/app-logs', u'/tmp']}

2017-10-06 05:17:44,220 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET '"'"'http://ip-172-31-12-191.us-east-2.compute.internal:50070/webhdfs/v1/user/ambari-qa/pigsmoke.out?op=GETFILESTATUS&user.name=hdfs'"'"' 1>/tmp/tmpwdjQAo 2>/tmp/tmp4k4Dme''] {'logoutput': None, 'quiet': False}

2017-10-06 05:17:44,265 - call returned (0, '')

2017-10-06 05:17:44,267 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X DELETE '"'"'http://ip-172-31-12-191.us-east-2.compute.internal:50070/webhdfs/v1/user/ambari-qa/pigsmoke.out?op=DELETE&recursive=True&user.name=hdfs'"'"' 1>/tmp/tmpecNoes 2>/tmp/tmpxvvwEZ''] {'logoutput': None, 'quiet': False}

2017-10-06 05:17:44,312 - call returned (0, '')

2017-10-06 05:17:44,313 - HdfsResource['/user/ambari-qa/passwd'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/hdp/current/hadoop-client/bin', 'keytab': [EMPTY], 'source': '/etc/passwd', 'dfs_type': '', 'default_fs': 'hdfs://ip-172-31-12-191.us-east-2.compute.internal:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': 'kinit', 'principal_name': [EMPTY], 'user': 'hdfs', 'owner': 'ambari-qa', 'hadoop_conf_dir': '/usr/hdp/current/hadoop-client/conf', 'type': 'file', 'action': ['create_on_execute'], 'immutable_paths': [u'/apps/hive/warehouse', u'/mr-history/done', u'/app-logs', u'/tmp']}

2017-10-06 05:17:44,314 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET '"'"'http://ip-172-31-12-191.us-east-2.compute.internal:50070/webhdfs/v1/user/ambari-qa/passwd?op=GETFILESTATUS&user.name=hdfs'"'"' 1>/tmp/tmpriVL9x 2>/tmp/tmp7s8cyv''] {'logoutput': None, 'quiet': False}

2017-10-06 05:17:44,358 - call returned (0, '')

2017-10-06 05:17:44,359 - Creating new file /user/ambari-qa/passwd in DFS

2017-10-06 05:17:44,359 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X PUT --data-binary @/etc/passwd -H '"'"'Content-Type: application/octet-stream'"'"' '"'"'http://ip-172-31-12-191.us-east-2.compute.internal:50070/webhdfs/v1/user/ambari-qa/passwd?op=CREATE&user.name=hdfs&overwrite=True'"'"' 1>/tmp/tmpbHi8Ml 2>/tmp/tmpmAOKuW''] {'logoutput': None, 'quiet': False}

2017-10-06 05:17:44,432 - call returned (0, '')

2017-10-06 05:17:44,433 - HdfsResource[None] {'security_enabled': False, 'hadoop_bin_dir': '/usr/hdp/current/hadoop-client/bin', 'keytab': [EMPTY], 'dfs_type': '', 'default_fs': 'hdfs://ip-172-31-12-191.us-east-2.compute.internal:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': 'kinit', 'principal_name': [EMPTY], 'user': 'hdfs', 'action': ['execute'], 'hadoop_conf_dir': '/usr/hdp/current/hadoop-client/conf', 'immutable_paths': [u'/apps/hive/warehouse', u'/mr-history/done', u'/app-logs', u'/tmp']}

2017-10-06 05:17:44,433 - File['/var/lib/ambari-agent/tmp/pigSmoke.sh'] {'content': StaticFile('pigSmoke.sh'), 'mode': 0755}

2017-10-06 05:17:44,435 - Writing File['/var/lib/ambari-agent/tmp/pigSmoke.sh'] because it doesn't exist

2017-10-06 05:17:44,435 - Changing permission for /var/lib/ambari-agent/tmp/pigSmoke.sh from 644 to 755

2017-10-06 05:17:44,435 - Execute['pig /var/lib/ambari-agent/tmp/pigSmoke.sh'] {'logoutput': True, 'path': [u'/usr/hdp/current/pig-client/bin:/usr/sbin:/sbin:/usr/local/bin:/bin:/usr/bin'], 'tries': 3, 'user': 'ambari-qa', 'try_sleep': 5}

17/10/06 05:17:45 INFO pig.ExecTypeProvider: Trying ExecType : LOCAL

17/10/06 05:17:45 INFO pig.ExecTypeProvider: Trying ExecType : MAPREDUCE

17/10/06 05:17:45 INFO pig.ExecTypeProvider: Picked MAPREDUCE as the ExecType

2017-10-06 05:17:45,556 [main] INFO org.apache.pig.Main - Apache Pig version 0.16.0.2.5.3.0-37 (rexported) compiled Nov 30 2016, 02:28:11

2017-10-06 05:17:45,556 [main] INFO org.apache.pig.Main - Logging error messages to: /home/ambari-qa/pig_1507267065554.log

2017-10-06 05:17:46,241 [main] INFO org.apache.pig.impl.util.Utils - Default bootup file /home/ambari-qa/.pigbootup not found

2017-10-06 05:17:46,355 [main] INFO org.apache.pig.backend.hadoop.executionengine.HExecutionEngine - Connecting to hadoop file system at: hdfs://ip-172-31-12-191.us-east-2.compute.internal:8020

2017-10-06 05:17:46,812 [main] INFO org.apache.pig.PigServer - Pig Script ID for the session: PIG-pigSmoke.sh-77b352b2-4ead-4b2a-9d1b-c4e92ec93769

2017-10-06 05:17:47,169 [main] INFO org.apache.hadoop.yarn.client.api.impl.TimelineClientImpl - Timeline service address: http://ip-172-31-3-20.us-east-2.compute.internal:8188/ws/v1/timeline/

2017-10-06 05:17:47,261 [main] INFO org.apache.pig.backend.hadoop.PigATSClient - Created ATS Hook

2017-10-06 05:17:48,005 [main] INFO org.apache.pig.tools.pigstats.ScriptState - Pig features used in the script: UNKNOWN

2017-10-06 05:17:48,051 [main] INFO org.apache.pig.data.SchemaTupleBackend - Key [pig.schematuple] was not set... will not generate code.

2017-10-06 05:17:48,088 [main] INFO org.apache.pig.newplan.logical.optimizer.LogicalPlanOptimizer - {RULES_ENABLED=[AddForEach, ColumnMapKeyPrune, ConstantCalculator, GroupByConstParallelSetter, LimitOptimizer, LoadTypeCastInserter, MergeFilter, MergeForEach, PartitionFilterOptimizer, PredicatePushdownOptimizer, PushDownForEachFlatten, PushUpFilter, SplitFilter, StreamTypeCastInserter]}

2017-10-06 05:17:48,153 [main] INFO org.apache.pig.impl.util.SpillableMemoryManager - Selected heap (PS Old Gen) of size 699400192 to monitor. collectionUsageThreshold = 489580128, usageThreshold = 489580128

2017-10-06 05:17:48,201 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.MRCompiler - File concatenation threshold: 100 optimistic? false

2017-10-06 05:17:48,235 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.MultiQueryOptimizer - MR plan size before optimization: 1

2017-10-06 05:17:48,236 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.MultiQueryOptimizer - MR plan size after optimization: 1

2017-10-06 05:17:48,407 [main] INFO org.apache.hadoop.yarn.client.api.impl.TimelineClientImpl - Timeline service address: http://ip-172-31-3-20.us-east-2.compute.internal:8188/ws/v1/timeline/

2017-10-06 05:17:48,414 [main] INFO org.apache.hadoop.yarn.client.RMProxy - Connecting to ResourceManager at ip-172-31-3-20.us-east-2.compute.internal/172.31.3.20:8050

2017-10-06 05:17:48,562 [main] INFO org.apache.hadoop.yarn.client.AHSProxy - Connecting to Application History server at ip-172-31-3-20.us-east-2.compute.internal/172.31.3.20:10200

2017-10-06 05:17:48,640 [main] INFO org.apache.pig.tools.pigstats.mapreduce.MRScriptState - Pig script settings are added to the job

2017-10-06 05:17:48,646 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.JobControlCompiler - mapred.job.reduce.markreset.buffer.percent is not set, set to default 0.3

2017-10-06 05:17:48,648 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.JobControlCompiler - This job cannot be converted run in-process

2017-10-06 05:17:48,970 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.JobControlCompiler - Added jar file:/usr/hdp/2.5.3.0-37/pig/pig-0.16.0.2.5.3.0-37-core-h2.jar to DistributedCache through /tmp/temp-620740268/tmp-1469178571/pig-0.16.0.2.5.3.0-37-core-h2.jar

2017-10-06 05:17:48,990 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.JobControlCompiler - Added jar file:/usr/hdp/2.5.3.0-37/pig/lib/automaton-1.11-8.jar to DistributedCache through /tmp/temp-620740268/tmp1280680167/automaton-1.11-8.jar

2017-10-06 05:17:49,008 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.JobControlCompiler - Added jar file:/usr/hdp/2.5.3.0-37/pig/lib/antlr-runtime-3.4.jar to DistributedCache through /tmp/temp-620740268/tmp-245846803/antlr-runtime-3.4.jar

2017-10-06 05:17:49,037 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.JobControlCompiler - Added jar file:/usr/hdp/2.5.3.0-37/hadoop/lib/joda-time-2.8.1.jar to DistributedCache through /tmp/temp-620740268/tmp1888445821/joda-time-2.8.1.jar

2017-10-06 05:17:49,046 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.JobControlCompiler - Setting up single store job

2017-10-06 05:17:49,053 [main] INFO org.apache.pig.data.SchemaTupleFrontend - Key [pig.schematuple] is false, will not generate code.

2017-10-06 05:17:49,053 [main] INFO org.apache.pig.data.SchemaTupleFrontend - Starting process to move generated code to distributed cacche

2017-10-06 05:17:49,053 [main] INFO org.apache.pig.data.SchemaTupleFrontend - Setting key [pig.schematuple.classes] with classes to deserialize []

2017-10-06 05:17:49,093 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.MapReduceLauncher - 1 map-reduce job(s) waiting for submission.

2017-10-06 05:17:49,178 [JobControl] INFO org.apache.hadoop.yarn.client.api.impl.TimelineClientImpl - Timeline service address: http://ip-172-31-3-20.us-east-2.compute.internal:8188/ws/v1/timeline/

2017-10-06 05:17:49,179 [JobControl] INFO org.apache.hadoop.yarn.client.RMProxy - Connecting to ResourceManager at ip-172-31-3-20.us-east-2.compute.internal/172.31.3.20:8050

2017-10-06 05:17:49,179 [JobControl] INFO org.apache.hadoop.yarn.client.AHSProxy - Connecting to Application History server at ip-172-31-3-20.us-east-2.compute.internal/172.31.3.20:10200

2017-10-06 05:17:49,406 [JobControl] WARN org.apache.hadoop.mapreduce.JobResourceUploader - No job jar file set. User classes may not be found. See Job or Job#setJar(String).

2017-10-06 05:17:49,471 [JobControl] INFO org.apache.pig.builtin.PigStorage - Using PigTextInputFormat

2017-10-06 05:17:49,480 [JobControl] INFO org.apache.hadoop.mapreduce.lib.input.FileInputFormat - Total input paths to process : 1

2017-10-06 05:17:49,480 [JobControl] INFO org.apache.pig.backend.hadoop.executionengine.util.MapRedUtil - Total input paths to process : 1

2017-10-06 05:17:49,505 [JobControl] INFO org.apache.pig.backend.hadoop.executionengine.util.MapRedUtil - Total input paths (combined) to process : 1

2017-10-06 05:17:49,982 [JobControl] INFO org.apache.hadoop.mapreduce.JobSubmitter - number of splits:1

2017-10-06 05:17:50,126 [JobControl] INFO org.apache.hadoop.mapreduce.JobSubmitter - Submitting tokens for job: job_1507266152786_0006

2017-10-06 05:17:50,243 [JobControl] INFO org.apache.hadoop.mapred.YARNRunner - Job jar is not present. Not adding any jar to the list of resources.

2017-10-06 05:17:50,519 [JobControl] INFO org.apache.hadoop.yarn.client.api.impl.YarnClientImpl - Submitted application application_1507266152786_0006

2017-10-06 05:17:50,553 [JobControl] INFO org.apache.hadoop.mapreduce.Job - The url to track the job: http://ip-172-31-3-20.us-east-2.compute.internal:8088/proxy/application_1507266152786_0006/

2017-10-06 05:17:50,553 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.MapReduceLauncher - HadoopJobId: job_1507266152786_0006

2017-10-06 05:17:50,553 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.MapReduceLauncher - Processing aliases A,B

2017-10-06 05:17:50,553 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.MapReduceLauncher - detailed locations: M: A[16,4],B[17,4] C: R:

2017-10-06 05:17:50,562 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.MapReduceLauncher - 0% complete

2017-10-06 05:17:50,562 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.MapReduceLauncher - Running jobs are [job_1507266152786_0006]

Command failed after 1 triesCreated on 10-12-2017 07:54 PM - edited 08-17-2019 08:26 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

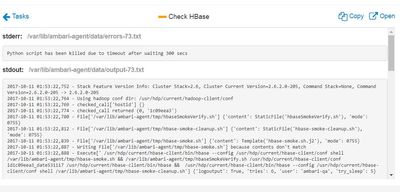

I am also having same problem with Check Habse Operation,

Python script has been killed due to timeout after waiting 300 secs

2017-10-11 01:53:22,752 - Stack Feature Version Info: Cluster Stack=2.6, Cluster Current Version=2.6.2.0-205, Command Stack=None, Command Version=2.6.2.0-205 -> 2.6.2.0-205

2017-10-11 01:53:22,764 - Using hadoop conf dir: /usr/hdp/current/hadoop-client/conf

2017-10-11 01:53:22,769 - checked_call['hostid'] {}

2017-10-11 01:53:22,774 - checked_call returned (0, '1c09eea3')

2017-10-11 01:53:22,780 - File['/var/lib/ambari-agent/tmp/hbaseSmokeVerify.sh'] {'content': StaticFile('hbaseSmokeVerify.sh'), 'mode': 0755}

2017-10-11 01:53:22,812 - File['/var/lib/ambari-agent/tmp/hbase-smoke-cleanup.sh'] {'content': StaticFile('hbase-smoke-cleanup.sh'), 'mode': 0755}

2017-10-11 01:53:22,839 - File['/var/lib/ambari-agent/tmp/hbase-smoke.sh'] {'content': Template('hbase-smoke.sh.j2'), 'mode': 0755}

2017-10-11 01:53:22,887 - Writing File['/var/lib/ambari-agent/tmp/hbase-smoke.sh'] because contents don't match

2017-10-11 01:53:22,888 - Execute[' /usr/hdp/current/hbase-client/bin/hbase --config /usr/hdp/current/hbase-client/conf shell /var/lib/ambari-agent/tmp/hbase-smoke.sh && /var/lib/ambari-agent/tmp/hbaseSmokeVerify.sh /usr/hdp/current/hbase-client/conf id1c09eea3_date531117 /usr/hdp/current/hbase-client/bin/hbase && /usr/hdp/current/hbase-client/bin/hbase --config /usr/hdp/current/hbase-client/conf shell /var/lib/ambari-agent/tmp/hbase-smoke-cleanup.sh'] {'logoutput': True, 'tries': 6, 'user': 'ambari-qa', 'try_sleep': 5}

ERROR: Can't get the location for replica 0

Here is some help for this command:

Start disable of named table:

hbase> disable 't1'

hbase> disable 'ns1:t1'

ERROR: Can't get the location for replica 0

Here is some help for this command:

Drop the named table. Table must first be disabled:

hbase> drop 't1'

hbase> drop 'ns1:t1'

ERROR: org.apache.hadoop.hbase.PleaseHoldException: Master is initializing

at org.apache.hadoop.hbase.master.HMaster.checkInitialized(HMaster.java:2672)

at org.apache.hadoop.hbase.master.HMaster.checkNamespaceManagerReady(HMaster.java:2677)

at org.apache.hadoop.hbase.master.HMaster.ensureNamespaceExists(HMaster.java:2915)

at org.apache.hadoop.hbase.master.HMaster.createTable(HMaster.java:1686)

at org.apache.hadoop.hbase.master.MasterRpcServices.createTable(MasterRpcServices.java:483)

at org.apache.hadoop.hbase.protobuf.generated.MasterProtos$MasterService$2.callBlockingMethod(MasterProtos.java:59846)

at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:2150)

at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:112)

at org.apache.hadoop.hbase.ipc.RpcExecutor$Handler.run(RpcExecutor.java:187)

at org.apache.hadoop.hbase.ipc.RpcExecutor$Handler.run(RpcExecutor.java:167)

Here is some help for this command:

Creates a table. Pass a table name, and a set of column family

specifications (at least one), and, optionally, table configuration.

Column specification can be a simple string (name), or a dictionary

(dictionaries are described below in main help output), necessarily

including NAME attribute.

Examples:

Create a table with namespace=ns1 and table qualifier=t1

hbase> create 'ns1:t1', {NAME => 'f1', VERSIONS => 5}

Create a table with namespace=default and table qualifier=t1

hbase> create 't1', {NAME => 'f1'}, {NAME => 'f2'}, {NAME => 'f3'}

hbase> # The above in shorthand would be the following:

hbase> create 't1', 'f1', 'f2', 'f3'

hbase> create 't1', {NAME => 'f1', VERSIONS => 1, TTL => 2592000, BLOCKCACHE => true}

hbase> create 't1', {NAME => 'f1', CONFIGURATION => {'hbase.hstore.blockingStoreFiles' => '10'}}

Table configuration options can be put at the end.

Examples:

hbase> create 'ns1:t1', 'f1', SPLITS => ['10', '20', '30', '40']

hbase> create 't1', 'f1', SPLITS => ['10', '20', '30', '40']

hbase> create 't1', 'f1', SPLITS_FILE => 'splits.txt', OWNER => 'johndoe'

hbase> create 't1', {NAME => 'f1', VERSIONS => 5}, METADATA => { 'mykey' => 'myvalue' }

hbase> # Optionally pre-split the table into NUMREGIONS, using

hbase> # SPLITALGO ("HexStringSplit", "UniformSplit" or classname)

hbase> create 't1', 'f1', {NUMREGIONS => 15, SPLITALGO => 'HexStringSplit'}

hbase> create 't1', 'f1', {NUMREGIONS => 15, SPLITALGO => 'HexStringSplit', REGION_REPLICATION => 2, CONFIGURATION => {'hbase.hregion.scan.loadColumnFamiliesOnDemand' => 'true'}}

You can also keep around a reference to the created table:

hbase> t1 = create 't1', 'f1'

Which gives you a reference to the table named 't1', on which you can then

call methods.

Command failed after 1 tries

Created 10-13-2017 06:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Timeout is coming from the finite timeout that Ambari has put for Service Check python scripts in order to bail out, rather than running forever.

The point to note here is that there may be a problem in terms of HBase health in general, which is either making HBase service check to not finish within 300 secs (performance) or HBase process is not responding at all.

Can you check the logs for HBase and the the services it depends on to verify their workable state ?

CC @Chinmay Das

Created 01-23-2018 06:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have the same problem , What can I do now ?

Created 01-24-2018 08:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Edit /var/lib/ambari-server/resources/common-services/OOZIE/your_version_number/metainfo.xml <time_out>300<time_out>