Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Query is not running on hadoop-----spark error

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Query is not running on hadoop-----spark error

- Labels:

-

Apache Spark

-

Apache YARN

Created on 01-15-2019 10:21 AM - edited 09-16-2022 07:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi,

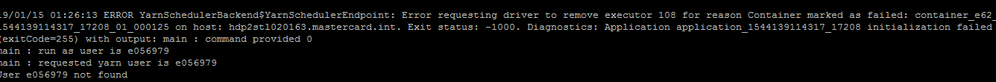

while running spark code its throwing the attached error , however in yarn job is showing as succeeded and finished. When i checked spark log the error below thrown.

YarnSchedulerBackend$YarnSchedulerEndpoint: Container marked as failed:

Please clarify me on this.

Thanks

Yasmin

Created 01-15-2019 07:01 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @yasmin

Thanks for posting your query.

From the error message attached I see the Nodemanager node is complaining that the user ID is not present on the node. Please check if the username is present on the node (where AM is running) or if you are using AD/LDAP just make sure the node is able to resolve the particular username

You can also check more on container logs, by running

#yarn logs -applicationId <applicationID> -appOwner <username_who_triggered_job>

Satz

Created 01-23-2019 01:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hey Satz,

Hope you are fine and doing well, thanks much for your response.

As per your reply it says that user who has triggered job, his user id is not listed on that particular node ? Is my understanding correct?

Also, please help me understand this scenario !!

I have a job that today took much longer on the new cluster that it had taken on old – in fact more than yesterday

There are factors that can make it take longer of course, as the inopuyt varies – but I also saw one stage take 7 hours when it normally takes 2 or 3 (for total)

I saw a lot of errors like

ExecutorLostFailure (executor 1480 exited caused by one of the running tasks) Reason: Stale executor after cluster manager re-registered.

I had 438 failures on 869 taks, that is a huge rate, another part has 873 out of 1236

Thanks

Yasmeen

Created 01-23-2019 05:23 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @yasmin,

Thanks for reaching us !

As per your reply it says that user who has triggered job, his user id is not listed on that particular node ? Is my understanding correct? ==> Yes you're right !

And your query regarding job slowness, we should consider the factors you mentioned along with below messages as well

~~~

ExecutorLostFailure (executor 1480 exited caused by one of the running tasks) Reason: Stale executor after cluster manager re-registered.

I had 438 failures on 869 taks, that is a huge rate, another part has 873 out of 1236

~~~

Here it seems the executors are getting lost and as a results tasks were dying.

Could you please check the yarn logs for the application (by using #yarn logs command in my previous reply) and see if there is any errors in executor logs. This will help us to see if there is any spcific reason for executor failures

Are you running job in spark-client mode or cluster mode?

Satz