Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: QueryDatabaseTable Problem

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

QueryDatabaseTable Problem

- Labels:

-

Apache NiFi

Created on 12-12-2023 11:31 AM - edited 12-12-2023 11:37 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

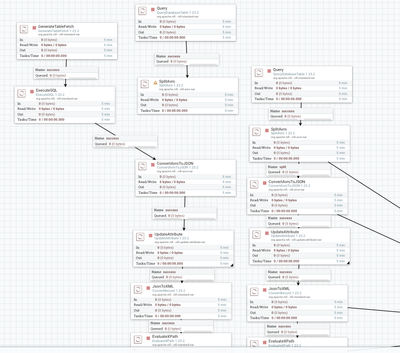

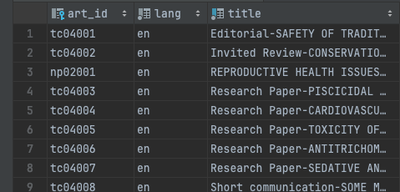

Hi, Guys. I am new to use NiFi flow. I am kind of confused about Query database table processor. So now, I am try to get all data from Postgres database and convert them into json and then convert them into xml.

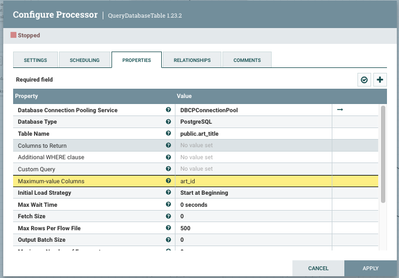

But sometimes, the Query database table processor will have a lot of Flow file and then the queue just mess up. I try to set the art_id to be maximum-value column but it doesn't work well because art_id is string.(not sure)

This is a screenshot from a sample table called title and it has about 500 rows. How to query them just one time and split them into 500 flowfile and each of flowfile has only one row from table title without any duplicates. Can someone help me?

Thank you so much. Following screenshots are my progress so far.

Created 12-14-2023 07:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It seems that you need to process the 500 records once. In this case you dont need to start the ExecuteSQL processor nor worry about scheduling it unless you want to run it again in the future. Keep the processor stopped then right click on it and select Run Once. This should load the total number of records one time.

Created 12-12-2023 12:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@FrankHaha Welcome to the Cloudera Community!

To help you get the best possible solution, I have tagged our NiFi experts @SAMSAL @cotopaul who may be able to assist you further.

Please keep us updated on your post, and we hope you find a satisfactory solution to your query.

Regards,

Diana Torres,Senior Community Moderator

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Learn more about the Cloudera Community:

Created 12-12-2023 06:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @FrankHaha ,

The QueryDatabaseTable processor is used mainly when you are having a dynamic data table where the data is continuously being added\updated. The provided Maximum Value Column will always help tack the delta by saving the last max value retrieved from the last generated query and so on. This Max value column works better for columns where values increase over time for example numerical , datetime values. For string values it can work if that added\changed is always guaranteed to be greater than previous max value otherwise you might get unpredictable results.

In your case, when you say that the "queue will have a lot of flowfile and it will mess up" can you explain that more? for example are you getting more flowfiles than whats in the table, getting duplicates or getting error? what exactly happen?

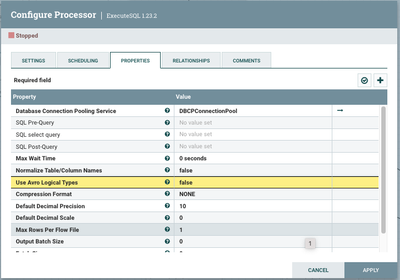

If you have fixed number of records that is not going to change in the future as you are indicating there is 500 records and you want to process the data onetime , then you dont need to use QueryDatabaseTable with max value column, you can use ExecuteSQL or ExecuteSQLRecord and specify the select statement in the "SQL Select Query" property , In the ExecuteSQL you will get the result in Avro format but if you use the ExecuteSQLRecord you can decide the output format in the RecordWriter , for example if you are looking to convert data into Json or XML right away without having to go through Avro.

If that helps please accept solution.

Thanks

Created 12-13-2023 10:14 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your reply.

Yes, "queue will have a lot of flowfile and it will mess up" means that there are a lot of data that I don't really want. I just want the 500 row in my table.

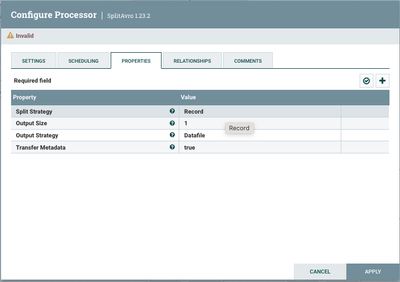

My flow so far is ExecuteSQL and then split avro and then convert to json and then convert to xml. I want each flow file have one row in my table.

For the executeSQL, do I need to set up schedule time? If I run executeSQL, will I get exactly 500 flowfile?

Thank you in advance.

Created on 12-13-2023 10:52 AM - edited 12-13-2023 10:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is there only 500 records in your table and that is all the records you want to processes? If so then you dont need to create schedule , you can run it once using the query in the "SQL Select Query" property:

select * from public.art_title

If you use the ExecuteSQLRecord then you can set the RecordWriter to Json using JsonRecordSetWriter, or XML using XMLRecordSetWriter to get the needed format, this way you save yourself having to convert from Avro to Json then Json to XML. You can get the result in XML right way.

Also if you set the property "Max Rows Per FlowFile" to 1 , then it will create only one record per flowfile so there is no need to split.

Hope that helps.

Created 12-14-2023 06:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

but it looks like still a lot of information, not only 500 flowfiles in the queue. Above pic is my configuration.

Created 12-14-2023 06:23 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

By the way , on the upper right corner of NiFi page, there are some errors that I can't understand. Can you explain it to me? Thank you so much!!!

Created on 12-14-2023 06:44 AM - edited 12-14-2023 06:45 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I need to understand the following:

1- Does the table have total of 500 records and if so are those records static or are they going to be modified over time and you need to keep processing them ?

2- Do you need to run the ExecuteSQL multiple times to process the same\updated 500 records or do you just need to process them once ? each run of ExecuteSQL with the provided select query will fetch the total records, so if you start the processor to start running indefinitly where the Run Schedule under the Scheduling tab is set to 0 then it will keep fetching the same total records again and again and it will fill up the queue .

Created 12-14-2023 06:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1. These data is static and there are only 500 rows in the table. I don't need to modify them in the future and I don't need to keep processing them. I only need to get all 500 rows from table and then done.

2. I just need to process them once. Do I need to set the schedule time longer so that the ExecuteSQL only process once and this once will contain 500 rows?

Created on 12-14-2023 06:52 AM - edited 12-14-2023 06:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Another things is that if I found some record contain data that doesn't exist in my table(art_title). I don't know why. I can confirm my art_title table only contain 500 rows for sure.