Support Questions

- Cloudera Community

- Support

- Support Questions

- Ranger: Error running solr query

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Ranger: Error running solr query

Created 11-18-2021 12:07 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

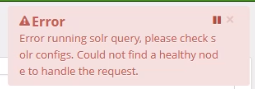

Whenever we try to check the audit on ranger we get the following message and the audit does not appear:

After a quick log check in solr server nodes

Node1 and 2 have this following message:

2021-11-18 09:53:40,685 WARN [ ] org.apache.solr.handler.admin.MetricsHistoryHandler (MetricsHistoryHandler.java:337) - Unknown format of leader id, skipping: 250349913310626188-xx-xxx-xx-xxxx.xxxxx.xx:8886_solr-n_0000000168

Node 3 has the following message:

2021-11-18T14:57:59,723 [MetricsHistoryHandler-8-thread-1] WARN [ ] org.apache.solr.handler.admin.MetricsHistoryHandler (MetricsHistoryHandler.java:322) - Could not obtain overseer's address, skipping.

org.apache.zookeeper.KeeperException$AuthFailedException: KeeperErrorCode = AuthFailed for /overseer_elect/leader

at org.apache.zookeeper.KeeperException.create(KeeperException.java:126) ~[zookeeper-3.4.11.jar:3.4.11-37e277162d567b55a07d1755f0b31c32e93c01a0]

at org.apache.zookeeper.KeeperException.create(KeeperException.java:54) ~[zookeeper-3.4.11.jar:3.4.11-37e277162d567b55a07d1755f0b31c32e93c01a0]

at org.apache.zookeeper.ZooKeeper.getData(ZooKeeper.java:1215) ~[zookeeper-3.4.11.jar:3.4.11-37e277162d567b55a07d1755f0b31c32e93c01a0]

at org.apache.solr.common.cloud.SolrZkClient.lambda$getData$5(SolrZkClient.java:341) ~[solr-solrj-7.4.0.jar:7.4.0 9060ac689c270b02143f375de0348b7f626adebc - jpountz - 2018-06-18 16:55:14]

at org.apache.solr.common.cloud.ZkCmdExecutor.retryOperation(ZkCmdExecutor.java:60) ~[solr-solrj-7.4.0.jar:7.4.0 9060ac689c270b02143f375de0348b7f626adebc - jpountz - 2018-06-18 16:55:14]

at org.apache.solr.common.cloud.SolrZkClient.getData(SolrZkClient.java:341) ~[solr-solrj-7.4.0.jar:7.4.0 9060ac689c270b02143f375de0348b7f626adebc - jpountz - 2018-06-18 16:55:14]

at org.apache.solr.client.solrj.impl.ZkDistribStateManager.getData(ZkDistribStateManager.java:84) ~[solr-solrj-7.4.0.jar:7.4.0 9060ac689c270b02143f375de0348b7f626adebc - jpountz - 2018-06-18 16:55:14]

at org.apache.solr.client.solrj.cloud.DistribStateManager.getData(DistribStateManager.java:55) ~[solr-solrj-7.4.0.jar:7.4.0 9060ac689c270b02143f375de0348b7f626adebc - jpountz - 2018-06-18 16:55:14]

at org.apache.solr.handler.admin.MetricsHistoryHandler.getOverseerLeader(MetricsHistoryHandler.java:316) ~[solr-core-7.4.0.jar:7.4.0 9060ac689c270b02143f375de0348b7f626adebc - jpountz - 2018-06-18 16:55:13]

at org.apache.solr.handler.admin.MetricsHistoryHandler.amIOverseerLeader(MetricsHistoryHandler.java:349) ~[solr-core-7.4.0.jar:7.4.0 9060ac689c270b02143f375de0348b7f626adebc - jpountz - 2018-06-18 16:55:13]

at org.apache.solr.handler.admin.MetricsHistoryHandler.amIOverseerLeader(MetricsHistoryHandler.java:344) ~[solr-core-7.4.0.jar:7.4.0 9060ac689c270b02143f375de0348b7f626adebc - jpountz - 2018-06-18 16:55:13]

at org.apache.solr.handler.admin.MetricsHistoryHandler.collectGlobalMetrics(MetricsHistoryHandler.java:440) ~[solr-core-7.4.0.jar:7.4.0 9060ac689c270b02143f375de0348b7f626adebc - jpountz - 2018-06-18 16:55:13]

at org.apache.solr.handler.admin.MetricsHistoryHandler.collectMetrics(MetricsHistoryHandler.java:368) ~[solr-core-7.4.0.jar:7.4.0 9060ac689c270b02143f375de0348b7f626adebc - jpountz - 2018-06-18 16:55:13]

at org.apache.solr.handler.admin.MetricsHistoryHandler.lambda$new$0(MetricsHistoryHandler.java:230) ~[solr-core-7.4.0.jar:7.4.0 9060ac689c270b02143f375de0348b7f626adebc - jpountz - 2018-06-18 16:55:13]

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) [?:1.8.0_222]

at java.util.concurrent.FutureTask.runAndReset(FutureTask.java:308) [?:1.8.0_222]

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$301(ScheduledThreadPoolExecutor.java:180) [?:1.8.0_222]

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:294) [?:1.8.0_222]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) [?:1.8.0_222]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) [?:1.8.0_222]

at java.lang.Thread.run(Thread.java:748) [?:1.8.0_222]Any idea on what are the steps we need to follow in order to fix this issue?

Thank you for your help.

Created 11-19-2021 01:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Koffi,

Please delete and recreate the Solr Shards.

If you open Solr Web Ui, you will observe node/nodes to be down.

Please login to SSH, and check the data directory for Infra Solr on the node that is down, you will notice that no core directories exist. Also check the same on another Infra Solr node which is active, and you will see core directories present. This is the cause of all cores being down on a specific node.

To fix this issue, delete the ranger_audits collection & let it create again.

1.

Stop Solr Infra and Ranger services

2.

Delete :

### curl -k --negotiate -u : "http://$(hostname -f):8886/solr/admin/collections?action=DELETE&name=ranger_audits"

3.

Start Ranger and Solr.

Hopefully this will resolve the issue.

Regards,

Vaishnavi Nalawade

Created 12-01-2021 03:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Increase Solr Heap and restart the service, See if it fixes the issue