Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Read Database tables and convert into CSV form...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Read Database tables and convert into CSV format

- Labels:

-

Apache NiFi

Created on 03-08-2018 07:50 AM - edited 08-18-2019 02:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Team,

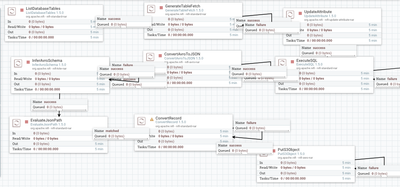

I'm trying to read the data from MySQL database and trying to place the data in S3. I was able to read the data from MySQL and able to place the data in S3 bucket as well. However whatever the data I'm placing in S3 bucket is not a CSV data. It's JSON data. Below is the flow I have right now. I have total 6 tables that I need to read from database and each table have different schema.

I want to place the CSV data in S3 bucket. For that I got suggestions from experts and I was bit puzzled whatever I'm doing is correct or not. Any other possible solutions/suggestions to place the CSV data in S3 bucket will be helpful for me.

Suggestions I got:

https://stackoverflow.com/questions/49145832/convert-json-to-csv-in-nifi

Created 03-08-2018 12:05 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

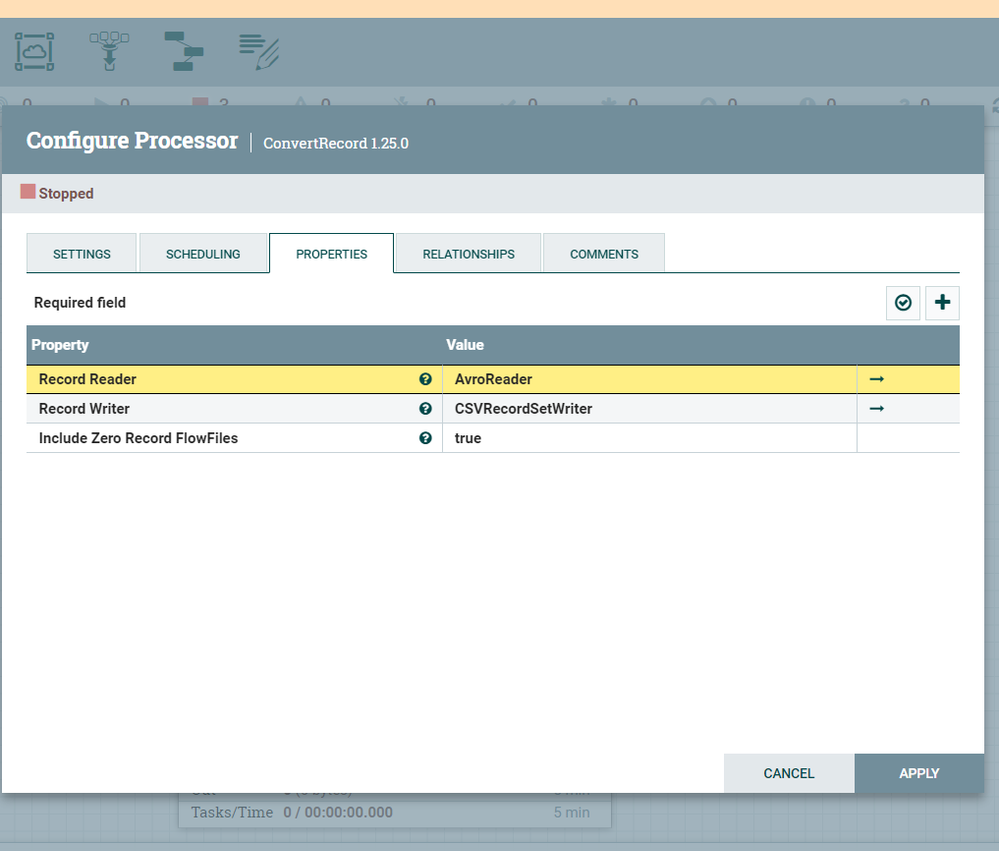

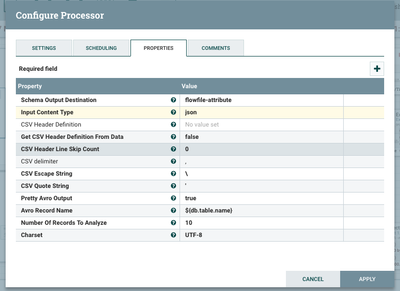

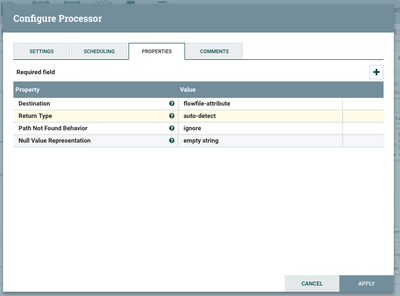

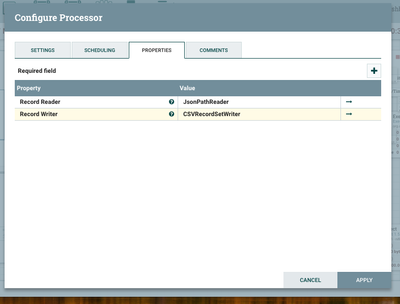

As you are using NiFi 1.5 you can use Avroreader as RecordReader and CsvRecordSetWriter as Record Writer in ConvertRecord processor.

ConvertRecord processor

RecordReader-->AvroReader//reads the incoming avro format flowfile contents RecordWriter-->CsvRecordSetWriter//write the output results in csv format

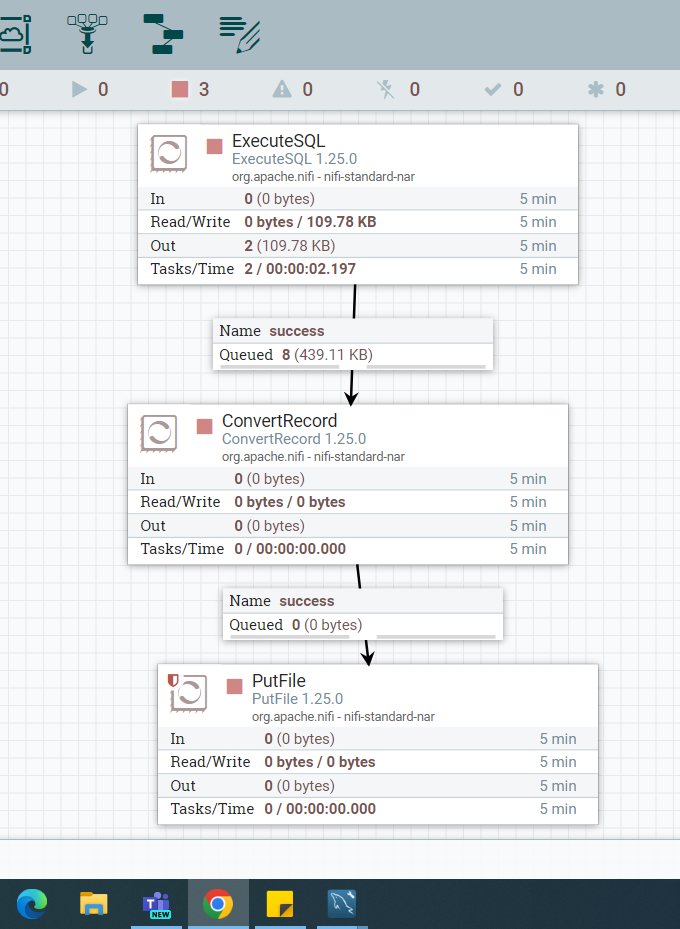

Flow:-

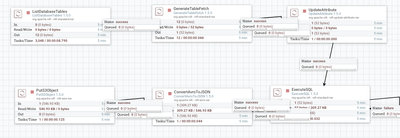

- ListDatabaseTables

- GenerateTableFetch

- UpdateAttribute(are you changing the filename to UUID?)

- ConvertRecord

- PutS3Object

Please refer to below link to configure AvroReader

https://community.hortonworks.com/questions/175208/how-to-store-the-output-of-a-query-to-one-text-fi...

If you are still facing issues then share us sample of 10 records in csv (or) json format to recreate your scenario on our side.

Created 03-08-2018 12:05 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As you are using NiFi 1.5 you can use Avroreader as RecordReader and CsvRecordSetWriter as Record Writer in ConvertRecord processor.

ConvertRecord processor

RecordReader-->AvroReader//reads the incoming avro format flowfile contents RecordWriter-->CsvRecordSetWriter//write the output results in csv format

Flow:-

- ListDatabaseTables

- GenerateTableFetch

- UpdateAttribute(are you changing the filename to UUID?)

- ConvertRecord

- PutS3Object

Please refer to below link to configure AvroReader

https://community.hortonworks.com/questions/175208/how-to-store-the-output-of-a-query-to-one-text-fi...

If you are still facing issues then share us sample of 10 records in csv (or) json format to recreate your scenario on our side.

Created on 03-08-2018 04:47 PM - edited 08-18-2019 02:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Shu,

I made the changes as you suggested and it worked. I added one extra processor(Execute SQL). I'm posting the flow here so that it might help someone like me in future who are having same requirement. Really appreciate your help. Thanks a lot.

Created 03-08-2018 09:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Awesome,thankyou very much for sharing the flow with us 🙂

Created 03-24-2024 01:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Shu_ashu, @ramesh_ganginen