Hi All,

One of the disks in our data node has gone bad, and I wanted to hotswap the same. I followed the instructions in the Cloudera documentation to do it. (https://www.cloudera.com/documentation/enterprise/latest/topics/admin_dn_swap.html)

I removed the `dfs.datanode.data.dir` configuration only for the affected instance of HDFS as per the guide and clicked on Refresh Configuration in the Actions.

It has been running for 3 hours now and I'm getting worried. It hasn't shown any progress updates as well. So I can't really comment on whether something is taking place.

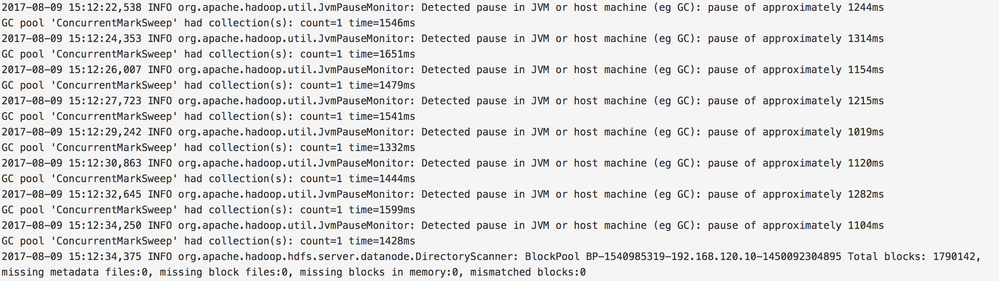

The role logs look as below and keep getting updated periodically:

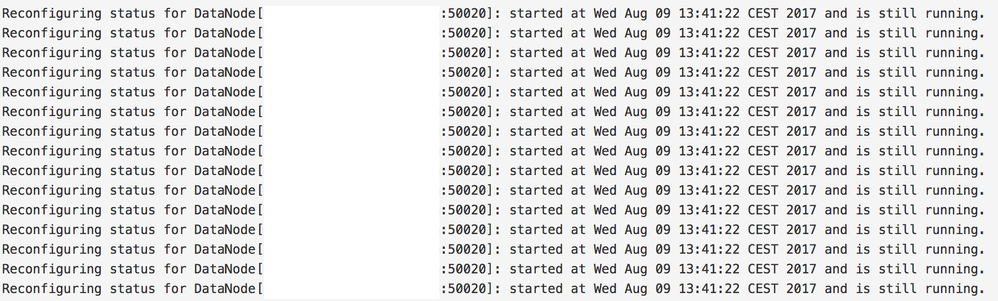

The only thing I see in the logs for the command itself is below:

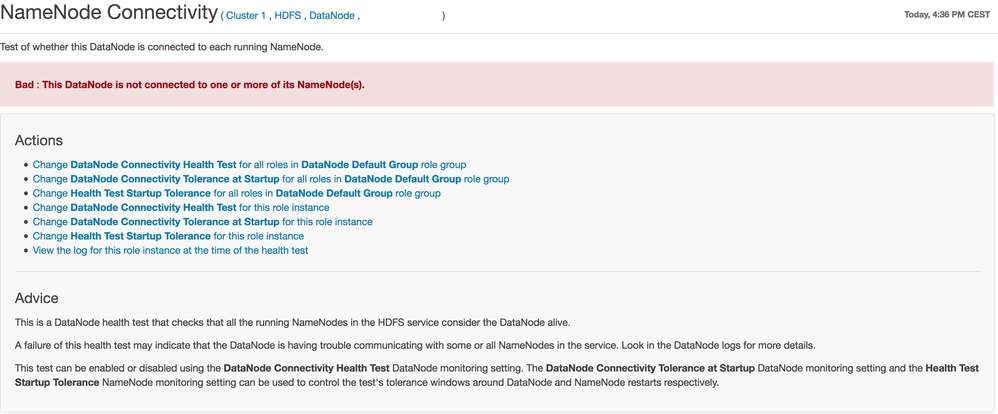

The Data Node itself is showing a Red Icon, and when I click on it, I see this. Is the lack of connectivity to Name Node causing the Refresh Configuration process to hang?

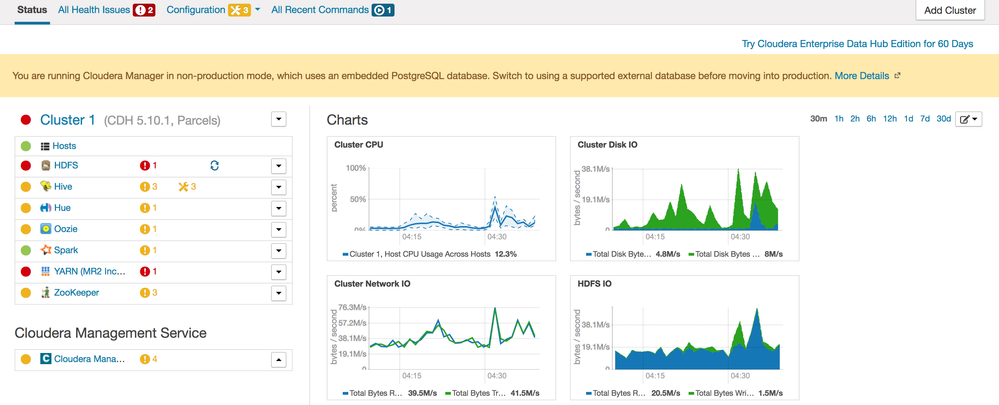

Cloudera Configuration:

Cloudera Community Edition

Cloudera Manager v5.10.1

CDH v5.10.1

Hadoop v2.6 (the one that comes with the above versions of Cloudera)

I also see this on my Cluster's homepage:

As you can see, there's a Stale Configuration icon next to the HDFS role. However, when I click on it, it tells me that I have no stale configuration and that everything is alright.

Additional Information:

When I ran the `hdfs fsck / -files -blocks -locations > dfs-new-fsck-2.log` command, it tells me that the Health Check is OK. However, it only tells me that 3 Data Nodes are connected instead of 4.

The cluster is in a sane state otherwise. All other roles are running fine, and HDFS itself is running fine from what I can see. Our Oozie jobs are continuing to run and write data. We are able to use Spark to access the data without any issues as well.

I'm guessing I'm in a safe position because the Data Node is not plugged in to the Name Node. However, I can't be sure.

I have two questions:

1) How long does it normally take for the Refresh Data Node command to run?

2) Since I can see an Abort button on the page, is it OK for me to safely abort the Refresh Data Node command without any data loss?

Any help on this would be greatly appreciated? Thank you in advance.