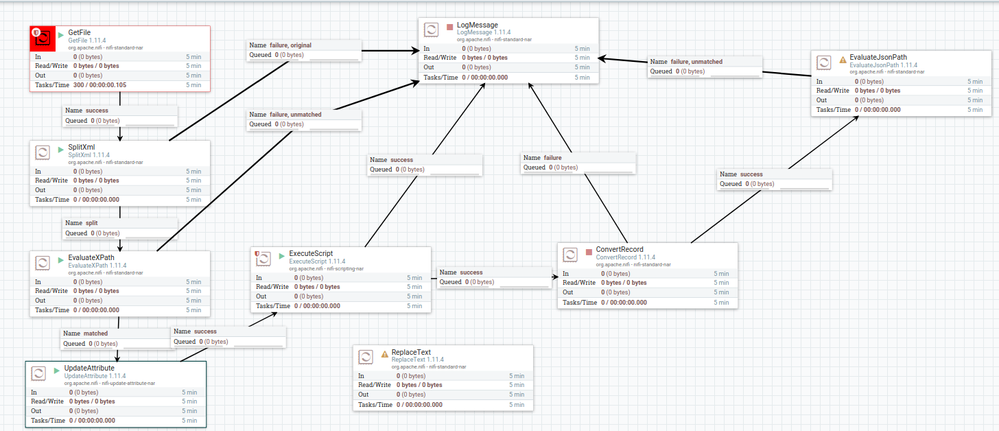

Here is the process to the point I got stuck. So I have the original xmls, I do a split to separate the child xmls that are the target of my analysis but they contain duplicate fields. I didn’t find a way to use the ReplaceText to change only the 1st encountered element in order to append an ID so it may be unique. Another approach that I found was to use a Python script attached below, that did the job, but I wonder if is there way to it in one single process.

import json

import java.io

from org.apache.commons.io import IOUtils

from java.nio.charset import StandardCharsets

from org.apache.nifi.processor.io import StreamCallback

class PyStreamCallback(StreamCallback):

def __init__(self, flowfile):

self.ff = flowfile

pass

def process(self, inputStream, outputStream):

text = IOUtils.toString(inputStream, StandardCharsets.UTF_8)

text = text.replace("<Latitude>", "<Latitude1>", 1)

outputStream.write(bytearray(text.encode('utf-8')))

flowFile = session.get()

if (flowFile != None):

flowFile = session.write(flowFile,PyStreamCallback(flowFile))

session.transfer(flowFile, REL_SUCCESS)