Support Questions

- Cloudera Community

- Support

- Support Questions

- ReplaceText doesn't work properly

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

ReplaceText doesn't work properly

- Labels:

-

Apache NiFi

Created 03-29-2018 11:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

replacetext.jpgDear all,

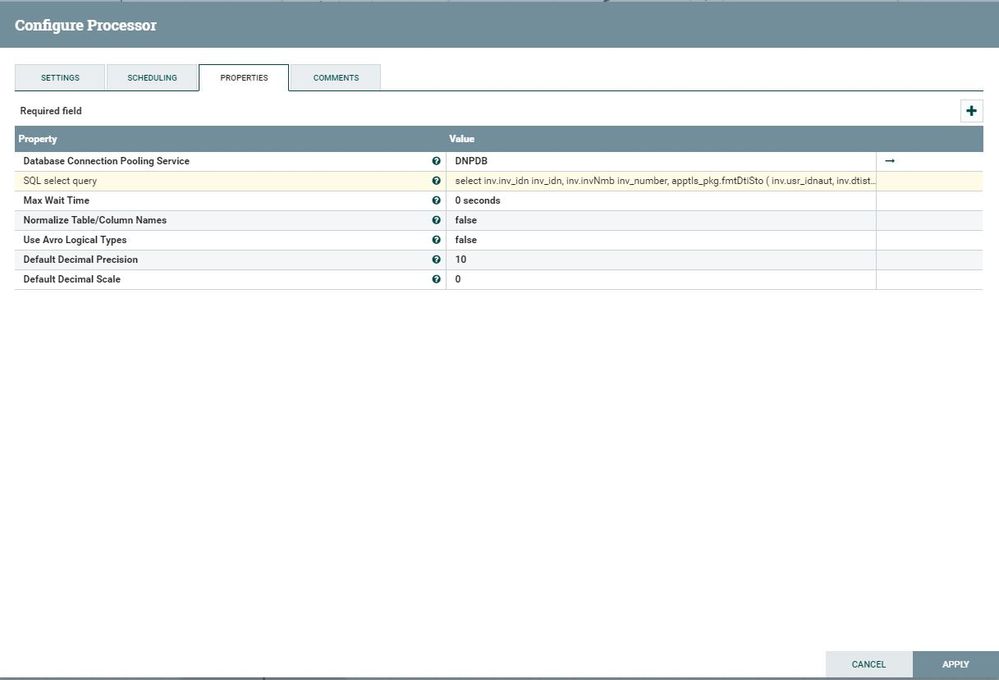

I want to create a CSV file on a monthly bases by executing a SQL script. For this I am using ExecuteSQL processor and ConvertAvroToJSON, SplitJSON, EvaluateJsonPath, ReplaceText and PutFile.

My problem is with ReplaceText processor. Provide a list with empty values even if the JSON values are there and are valid. Please see the attached files. I tried every recommended solution provided here on community but didn't help me.

Kind regards,

Stefan

Created on 03-30-2018 02:45 PM - edited 08-17-2019 10:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

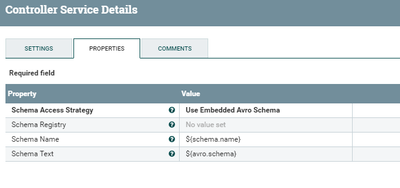

As you are doing ExecuteSql to pull data the format of output flowfile is in Avro and having embedded schema with the content so we can use Embedded AvroSchema as the Schema Access Strategy in ConvertRecord processor by using embedded schema we are skipping defining Schema Registry in both Reader and Writer controller services.

Convert Record processor Configs:-

Record Reader

AvroReader

Avro Reader Configs:-

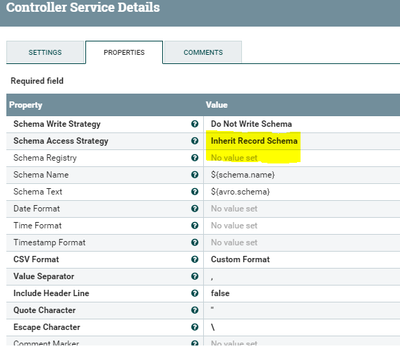

Record Writer

CSVRecordSetWriter

Csv set writer Configs:-

With this configs we are reading the Avro data and converting to Csv, if you want header then keep includer header line property to True.

Flow xml for your reference avro-to-csv.xml

Created on 03-29-2018 12:14 PM - edited 08-17-2019 10:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Could you please change the below properties in Replace text processor and run again

Make sure you are using same attribute names(case sensitive) in replace text processor those are matching with evaluatejson path processor.

(or)

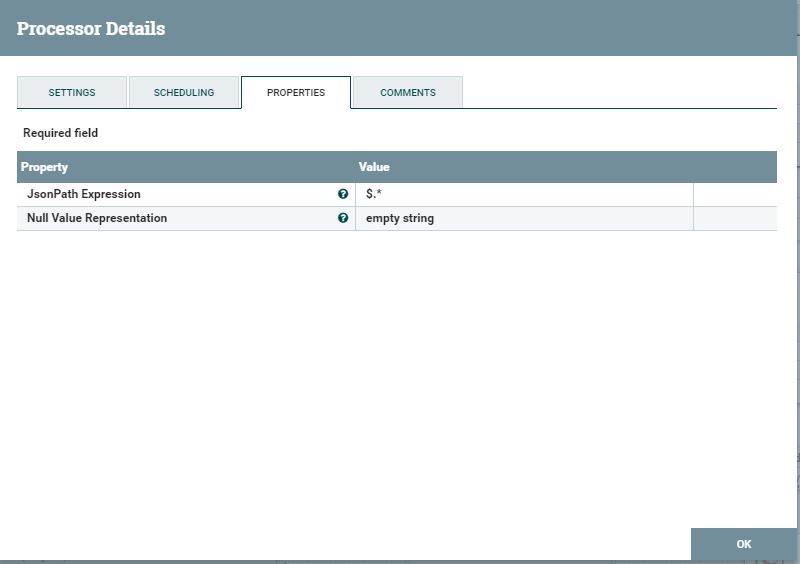

More optimal way of doing this use case is if you are using NiFi 1.2+ then use ConvertRecord processor with Avro Reader as record reader and CSV Set writer as Record setwriter this processor even accepts array of json messages and does conversion to CSV.

Flow:-

Executesql

ConvertRecord

PutFile

This Link describes how to use Convert Record processor.

Let us know if you are having any issues..!!

Created 03-29-2018 04:11 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @Shu,

Unfortunately, both flows didn't work. First one, with ReplaceText having the properties you mentioned still outputted empty values even though the attributes are the same.

For the second one with ConvertRecord, I receive this error: Failed to process StandardFlowFileRecord[...] will route to failure: java.lang.ArrayIndexOutOfBoundsException: -40

java.lang.ArrayIndexOutOfBoundsException: -40

I even try to convert the Avro to JSON and then to pass to ConvertRecord but still the same error...

Any help will be welcomed ! Thank you !

Kind regards,

Stefan

Created 03-30-2018 02:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you are using NiFi 1.2+, I would highly recommend NOT using EvaluateJSONPath. Now talking about the alternate approach, which is using ConvertRecord where you are facing arrayIndexOutOfBoundException, the problem probably is with your schema. Use default null values for your columns in your schema for ConvertRecord Processor like.

{

"type":"record",

"name":"nifi_logs",

"fields":[

{"name":"column1","type":["null","string"]},

{"name":"column2","type":["null","string"]},

{"name":"column3","type":["null","string"]},

{"name":"column4","type":["null","string"]}

]

}Try this and let know if you still face any problems.

Cheers!

Created 03-30-2018 08:10 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @Rahul Soni,

Since beggining I tried with null like default values but still I receive that error. Also I validated the JSON so is OK, here is my JSON:

{

"type": "record",

"name": "DnpReport",

"fields" : [

{"name": "inv_idn", "type": ["null", "string"]},

{"name": "inv_number", "type": ["null", "string"]},

{"name": "usr_mdf", "type": ["null", "string"]},

{"name": "invIssDat", "type": ["null", "string"]},

{"name": "invCliCod", "type": ["null", "string"]},

{"name": "invCliNam", "type": ["null", "string"]},

{"name": "invCli_RegCountry", "type": ["null", "string"]},

{"name": "invoiceClass", "type": ["null", "string"]},

{"name": "corCliCod", "type": ["null", "string"]},

{"name": "ofoChiNmb", "type": ["null", "string"]},

{"name": "bsnCtgDsc", "type": ["null", "string"]},

{"name": "bsnCtgCod", "type": ["null", "string"]},

{"name": "bsnCtgDspCod", "type": ["null", "string"]},

{"name": "invTyp", "type": ["null", "string"]},

{"name": "invStu", "type": ["null", "string"]},

{"name": "invOrgCod", "type": ["null", "string"]},

{"name": "cryCod", "type": ["null", "string"]},

{"name": "amnWthVat", "type": ["null", "string"]},

{"name": "amnWthVatInEur", "type": ["null", "string"]},

{"name": "mediaFeesInEur", "type": ["null", "string"]},

{"name": "kcFeesInEur", "type": ["null", "string"]}

]

}

Kind regards,

Stefan

Created 03-30-2018 10:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @Shu, @Rahul Soni,

Do you have any other ideas ? Thank you !

Kind regards,

Stefan

Created 03-30-2018 10:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No problem.if your existing approach is not working. Please.share.some.info regarding your convert record processor etc and same dataset it is passing for and is failing for

I can look in further

Created 03-30-2018 12:12 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Json input: [ {

"INV_IDN" : "247048764",

"INV_NUMBER" : "181120060",

"USR_MDF" : "15/03/2018 08:34:00 by LDL",

"INVISSDAT" : "15/03/2018",

"INVCLICOD" : "FUNDQ",

"INVCLINAM" : "FUNDQUEST",

"INVCLI_REGCOUNTRY" : "FR",

"INVOICECLASS" : "CUS - assujetti in EU outside Lx",

"CORCLICOD" : "BNPAMFR",

"OFOCHINMB" : "20173748",

"BSNCTGDSC" : "Fund Data Management",

"BSNCTGCOD" : "LIS",

"BSNCTGDSPCOD" : "FDM",

"INVTYP" : "Credit Note",

"INVSTU" : "Validated",

"INVORGCOD" : "LU",

"CRYCOD" : "EUR",

"AMNWTHVAT" : "-7,543.23",

"AMNWTHVATINEUR" : "-7,543.23",

"MEDIAFEESINEUR" : "0",

"KCFEESINEUR" : "-7543.23"

} ]

Json schema: {

"type": "record",

"name": "DnpReport",

"fields" : [

{"name": "inv_idn", "type": ["null", "string"]},

{"name": "inv_number", "type": ["null", "string"]},

{"name": "usr_mdf", "type": ["null", "string"]},

{"name": "invIssDat", "type": ["null", "string"]},

{"name": "invCliCod", "type": ["null", "string"]},

{"name": "invCliNam", "type": ["null", "string"]},

{"name": "invCli_RegCountry", "type": ["null", "string"]},

{"name": "invoiceClass", "type": ["null", "string"]},

{"name": "corCliCod", "type": ["null", "string"]},

{"name": "ofoChiNmb", "type": ["null", "string"]},

{"name": "bsnCtgDsc", "type": ["null", "string"]},

{"name": "bsnCtgCod", "type": ["null", "string"]},

{"name": "bsnCtgDspCod", "type": ["null", "string"]},

{"name": "invTyp", "type": ["null", "string"]},

{"name": "invStu", "type": ["null", "string"]},

{"name": "invOrgCod", "type": ["null", "string"]},

{"name": "cryCod", "type": ["null", "string"]},

{"name": "amnWthVat", "type": ["null", "string"]},

{"name": "amnWthVatInEur", "type": ["null", "string"]},

{"name": "mediaFeesInEur", "type": ["null", "string"]},

{"name": "kcFeesInEur", "type": ["null", "string"]}

]

}

Created 03-30-2018 01:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The issue is with your json schema because schema key names are not matching with json message key names as json schema having lower case,camel case in name value but json message having upper case names (inv_idn,INV_IDN)

Change the schema in Avro Schema registry to match with incoming json names(i.e upper case) everything will work as expected

Json Schema:-

{

"type": "record",

"name": "DnpReport",

"fields" : [

{"name": "INV_IDN", "type": ["null", "string"]},

{"name": "INV_NUMBER", "type": ["null", "string"]},

{"name": "USR_MDF", "type": ["null", "string"]},

{"name": "INVISSDAT", "type": ["null", "string"]},

{"name": "INVCLICOD", "type": ["null", "string"]},

{"name": "INVCLINAM", "type": ["null", "string"]},

{"name": "INVCLI_REGCOUNTRY", "type": ["null", "string"]},

{"name": "INVOICECLASS", "type": ["null", "string"]},

{"name": "CORCLICOD", "type": ["null", "string"]},

{"name": "OFOCHINMB", "type": ["null", "string"]},

{"name": "BSNCTGDSC", "type": ["null", "string"]},

{"name": "BSNCTGCOD", "type": ["null", "string"]},

{"name": "BSNCTGDSPCOD", "type": ["null", "string"]},

{"name": "INVTYP", "type": ["null", "string"]},

{"name": "INVSTU", "type": ["null", "string"]},

{"name": "INVORGCOD", "type": ["null", "string"]},

{"name": "CRYCOD", "type": ["null", "string"]},

{"name": "AMNWTHVAT", "type": ["null", "string"]},

{"name": "AMNWTHVATINEUR", "type": ["null", "string"]},

{"name": "MEDIAFEESINEUR", "type": ["null", "string"]},

{"name": "KCFEESINEUR", "type": ["null", "string"]}

]

}Output:-

INV_IDN,INV_NUMBER,USR_MDF,INVISSDAT,INVCLICOD,INVCLINAM,INVCLI_REGCOUNTRY,INVOICECLASS,CORCLICOD,OFOCHINMB,BSNCTGDSC,BSNCTGCOD,BSNCTGDSPCOD,INVTYP,INVSTU,INVORGCOD,CRYCOD,AMNWTHVAT,AMNWTHVATINEUR,MEDIAFEESINEUR,KCFEESINEUR 247048764,181120060,15/03/2018 08:34:00 by LDL,15/03/2018,FUNDQ,FUNDQUEST,FR,CUS - assujetti in EU outside Lx,BNPAMFR,20173748,Fund Data Management,LIS,FDM,Credit Note,Validated,LU,EUR,"-7,543.23","-7,543.23",0,-7543.23

Reference template:-

Created on 03-30-2018 02:02 PM - edited 08-17-2019 10:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you want header with lower and camel case names then in csvsetwriter controller change the below property to false.

Include Header Line

false

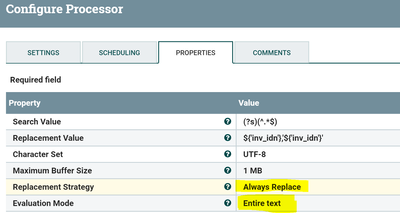

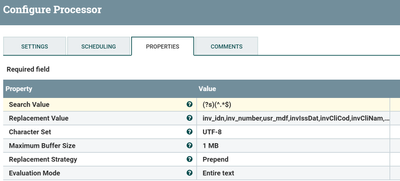

now we are not going to get the header line included in the output flowfile, then use Replace text processor to add our own header to the file

Replace text configs:-

Search Value

(?s)(^.*$)

Replacement Value

inv_idn,inv_number,usr_mdf,invIssDat,invCliCod,invCliNam,invCli_RegCountry,invoiceClass,corCliCod,ofoChiNmb,bsnCtgDsc,bsnCtgCod,bsnCtgDspCod,invTyp,invStu,invOrgCod,cryCod,amnWthVat,amnWthVatInEur,mediaFeesInEur,kcFeesInEur

Character Set

UTF-8

Maximum Buffer Size

1 MB //needs to change the value according to the file size that we are getting after convertrecord processor

Replacement Strategy

Prepend //we are prepend the the content with the above header line

Evaluation Mode

Entire text

Input content:-

247048764,181120060,15/03/2018 08:34:00 by LDL,15/03/2018,FUNDQ,FUNDQUEST,FR,CUS - assujetti in EU outside Lx,BNPAMFR,20173748,Fund Data Management,LIS,FDM,Credit Note,Validated,LU,EUR,"-7,543.23","-7,543.23",0,-7543.23

Output Content:-

inv_idn,inv_number,usr_mdf,invIssDat,invCliCod,invCliNam,invCli_RegCountry,invoiceClass,corCliCod,ofoChiNmb,bsnCtgDsc,bsnCtgCod,bsnCtgDspCod,invTyp,invStu,invOrgCod,cryCod,amnWthVat,amnWthVatInEur,mediaFeesInEur,kcFeesInEur 247048764,181120060,15/03/2018 08:34:00 by LDL,15/03/2018,FUNDQ,FUNDQUEST,FR,CUS - assujetti in EU outside Lx,BNPAMFR,20173748,Fund Data Management,LIS,FDM,Credit Note,Validated,LU,EUR,"-7,543.23","-7,543.23",0,-7543.23