Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Run Oozie Shell Action (spark2-submit) cannot...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Run Oozie Shell Action (spark2-submit) cannot access hive warehouse

- Labels:

-

Apache Oozie

-

Kerberos

Created 03-08-2022 10:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

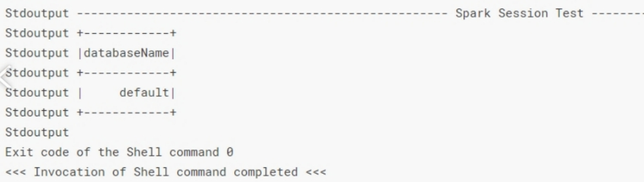

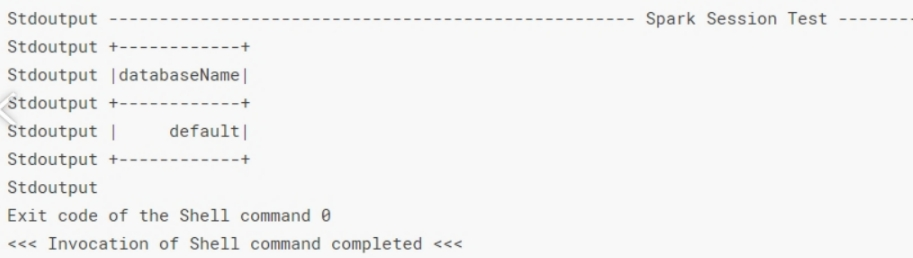

We try to run oozie shell action on kerberos environment (Cloudera Enterprise 5.16.2) with command spark2-submit. In script we use HiveContext(sc) to show databases and the result shown that it shows only default database.

Result

Here is my script:

job.properties

WF_ID="$1"

NOMINAL_TIME="$2"

PRINCIPAL="${3}"

KEYTAB="${4}"

VALIDATE_JAR="`ls -1 SparkSQLTest.jar`"

val sc = new SparkContext(new SparkConf().setAppName("spark-test"))

var conf: SparkConf = new SparkConf()

val spark = SparkSession.builder.config(conf).enableHiveSupport().getOrCreate()

spark.sql("show databases").show()

val sqlContext = new HiveContext(sc)

sqlContext.sql("show databases").show()

Created 03-10-2022 08:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @moth

Can you please verify if a hive gateway role (CM > Hive Service > Instances > Add role instance > Gateway) and Spark2 gateway role (CM > Spark2 Service > Instances > Add role instance > Gateway) are installed on every Nodemanager host? Oozie shell actions run as yarn jobs, so the spark2-submit may be run on any NM host in the cluster, so the corresponding gateways need to be installed as well

Created 03-21-2022 07:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have the same problem, any solution?