Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Running Sqoop via Oozie causes ERROR 42X05: Ta...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Running Sqoop via Oozie causes ERROR 42X05: Table/View 'DBS' does not exist

- Labels:

-

Apache Hive

-

Apache Oozie

-

Apache Sqoop

Created 03-17-2018 02:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello everyone,

I'm stuck with running sqoop job via Oozie.

I can run the same sqoop job via command line.

It fails via Oozie.

Please note that Hive metastore is on MySQL not in Derby.

HDP version: 2.6.4.0-91

Any help appreciated - thanks in advance!

Caused by: ERROR 42X05: Table/View 'DBS' does not exist. at org.apache.derby.iapi.error.StandardException.newException(Unknown Source) at org.apache.derby.impl.sql.compile.FromBaseTable.bindTableDescriptor(Unknown Source) at org.apache.derby.impl.sql.compile.FromBaseTable.bindNonVTITables(Unknown Source) at org.apache.derby.impl.sql.compile.FromList.bindTables(Unknown Source) at org.apache.derby.impl.sql.compile.SelectNode.bindNonVTITables(Unknown Source) at org.apache.derby.impl.sql.compile.DMLStatementNode.bindTables(Unknown Source) at org.apache.derby.impl.sql.compile.DMLStatementNode.bind(Unknown Source) at org.apache.derby.impl.sql.compile.CursorNode.bindStatement(Unknown Source) at org.apache.derby.impl.sql.GenericStatement.prepMinion(Unknown Source) at org.apache.derby.impl.sql.GenericStatement.prepare(Unknown Source) at org.apache.derby.impl.sql.conn.GenericLanguageConnectionContext.prepareInternalStatement(Unknown Source) ... 98 more

Oozie workflow:

<workflow-app name="smoke-test"

xmlns="uri:oozie:workflow:0.5">

<global>

<configuration>

<property>

<name>oozie.use.system.libpath</name>

<value>true</value>

</property>

<property>

<name>oozie.action.sharelib.for.sqoop</name>

<value>hive,hive2,hcatalog,sqoop</value>

</property>

<property>

<name>oozie.libpath</name>

<value>/user/oozie/share/lib</value>

</property>

</configuration>

</global>

<action name="sqoop_1">

<sqoop

xmlns="uri:oozie:sqoop-action:0.4">

<job-tracker>${resourceManager}</job-tracker>

<name-node>${nameNode}</name-node>

<arg>import</arg>

<arg>--connect</arg>

<arg>jdbc:sqlserver://dbserver:1433</arg>

<arg>--username</arg>

<arg>user</arg>

<arg>--password-file</arg>

<arg>/user/xx/xx.pwd</arg>

<arg>--hcatalog-database</arg>

<arg>smoketest</arg>

<arg>--hcatalog-table</arg>

<arg>table-name</arg>

<arg>--hcatalog-storage-stanza</arg>

<arg>stored as orcfile</arg>

<arg>--split-by</arg>

<arg>id</arg>

<arg>--query</arg>

<arg>select field from table WHERE $CONDITIONS</arg>

<arg>--m</arg>

<arg>1</arg>

<arg>--verbose</arg>

</sqoop>

<ok to="end"/>

<error to="kill"/>

</action>

<kill name="kill">

<message>${wf:errorMessage(wf:lastErrorNode())}</message>

</kill>

<end name="end"/>

</workflow-app>

Created 03-17-2018 06:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am not an oozie expert but the error "Caused by: ERROR 42X05: Table/View 'DBS' does not exist." where does it pick the DBS table name from?

But in the oozie workflow I don't see the name of the table shouldn't it be an argument before the --hcatalog-storage-stanza

<arg>table-name</arg> <arg>--hcatalog-storage-stanza</arg>

Ain't you missing the location ie --hcatalog-storage-stanza "stored as orcfile LOCATION /bla/bla"

Please revert

Created 03-18-2018 01:16 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Geoffrey,

Thanks a lot for your answer.

I've checked your suggestion by using LOCATION part but I still have same error.

Please note that the same job runs successfully from command line.

It seems to me, that for some reason metastore is tried to be accessed from Derby instead of from MySQL.

Regarding your question, about ERROR 42X05, here is bottom part of stack trace:

Caused by: MetaException(message:Version information not found in metastore. ) at org.apache.hadoop.hive.metastore.ObjectStore.checkSchema(ObjectStore.java:6933) at org.apache.hadoop.hive.metastore.ObjectStore.verifySchema(ObjectStore.java:6911) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at org.apache.hadoop.hive.metastore.RawStoreProxy.invoke(RawStoreProxy.java:103) at com.sun.proxy.$Proxy20.verifySchema(Unknown Source) at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.getMSForConf(HiveMetaStore.java:600) at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.getMS(HiveMetaStore.java:593) at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultDB(HiveMetaStore.java:647) at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.init(HiveMetaStore.java:433) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invokeInternal(RetryingHMSHandler.java:147) at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invoke(RetryingHMSHandler.java:105) at org.apache.hadoop.hive.metastore.RetryingHMSHandler.<init>(RetryingHMSHandler.java:78) ... 60 more

Created 03-18-2018 02:50 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just to validate can you logon to metastore database in MySQL, in my example the metastore DB is called hive

$ mysql -u hive -p{hive_password}

mysql> use hive; Database changed

mysql> show tables;

You should see a table TBLS.... Run a select

mysql> select * from TBLS;

Do you get any output? If Mysql is being used as Metastore DB you should have some output.

If not try this to validate on the Metastore server.

# su - hive $ hive hive> create table artur(id int, name string); hive> describe artur; hive> insert into artur values (6,"Errors");

Now go back to the previous step in Mysql and run a select * against the TBLS table there should be some output if not then your hive is using the derby database.

Created 03-18-2018 03:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Geoffrey,

Thanks for checking!

I think, all is fine with metastore - please see output for your statements:

mysql> use hive; Database changed mysql> show tables; +---------------------------+ | Tables_in_hive | +---------------------------+ | AUX_TABLE | | BUCKETING_COLS | | CDS | | COLUMNS_V2 | | COMPACTION_QUEUE | | COMPLETED_COMPACTIONS | | COMPLETED_TXN_COMPONENTS | | DATABASE_PARAMS | | DBS | | DB_PRIVS | | DELEGATION_TOKENS | | FUNCS | | FUNC_RU | | GLOBAL_PRIVS | | HIVE_LOCKS | | IDXS | | INDEX_PARAMS | | KEY_CONSTRAINTS | | MASTER_KEYS | | NEXT_COMPACTION_QUEUE_ID | | NEXT_LOCK_ID | | NEXT_TXN_ID | | NOTIFICATION_LOG | | NOTIFICATION_SEQUENCE | | NUCLEUS_TABLES | | PARTITIONS | | PARTITION_EVENTS | | PARTITION_KEYS | | PARTITION_KEY_VALS | | PARTITION_PARAMS | | PART_COL_PRIVS | | PART_COL_STATS | | PART_PRIVS | | ROLES | | ROLE_MAP | | SDS | | SD_PARAMS | | SEQUENCE_TABLE | | SERDES | | SERDE_PARAMS | | SKEWED_COL_NAMES | | SKEWED_COL_VALUE_LOC_MAP | | SKEWED_STRING_LIST | | SKEWED_STRING_LIST_VALUES | | SKEWED_VALUES | | SORT_COLS | | TABLE_PARAMS | | TAB_COL_STATS | | TBLS | | TBL_COL_PRIVS | | TBL_PRIVS | | TXNS | | TXN_COMPONENTS | | TYPES | | TYPE_FIELDS | | VERSION | | WRITE_SET | +---------------------------+ 57 rows in set (0.00 sec) mysql> select * from TBLS; +--------+-------------+-------+------------------+-------+-----------+-------+-----------+---------------+--------------------+--------------------+ | TBL_ID | CREATE_TIME | DB_ID | LAST_ACCESS_TIME | OWNER | RETENTION | SD_ID | TBL_NAME | TBL_TYPE | VIEW_EXPANDED_TEXT | VIEW_ORIGINAL_TEXT | +--------+-------------+-------+------------------+-------+-----------+-------+-----------+---------------+--------------------+--------------------+ | 6 | 1519669737 | 6 | 0 | dm | 0 | 6 | addresses | MANAGED_TABLE | NULL | NULL | +--------+-------------+-------+------------------+-------+-----------+-------+-----------+---------------+--------------------+--------------------+ 1 row in set (0.00 sec)

Now second part:

hive> create table artur(id int, name string);

OK

Time taken: 3.358 seconds

hive> describe artur;

OK

id int

name string

Time taken: 0.531 seconds, Fetched: 2 row(s)

hive> insert into artur values (6,"Errors");

Query ID = hive_20180318162126_ce71dcd8-26ec-4ea5-9d3d-426975dc81d5

Total jobs = 1

Launching Job 1 out of 1

Status: Running (Executing on YARN cluster with App id application_1521294403728_0006)

--------------------------------------------------------------------------------

VERTICES STATUS TOTAL COMPLETED RUNNING PENDING FAILED KILLED

--------------------------------------------------------------------------------

Map 1 .......... SUCCEEDED 1 1 0 0 0 0

--------------------------------------------------------------------------------

VERTICES: 01/01 [==========================>>] 100% ELAPSED TIME: 4.19 s

--------------------------------------------------------------------------------

Loading data to table default.artur

Table default.artur stats: [numFiles=1, numRows=1, totalSize=9, rawDataSize=8]

OK

Time taken: 6.754 seconds

and output from MySQL:

mysql> use hive; Reading table information for completion of table and column names You can turn off this feature to get a quicker startup with -A Database changed mysql> select * from TBLS; +--------+-------------+-------+------------------+-------+-----------+-------+-----------+---------------+--------------------+--------------------+ | TBL_ID | CREATE_TIME | DB_ID | LAST_ACCESS_TIME | OWNER | RETENTION | SD_ID | TBL_NAME | TBL_TYPE | VIEW_EXPANDED_TEXT | VIEW_ORIGINAL_TEXT | +--------+-------------+-------+------------------+-------+-----------+-------+-----------+---------------+--------------------+--------------------+ | 6 | 1519669737 | 6 | 0 | dm | 0 | 6 | addresses | MANAGED_TABLE | NULL | NULL | | 11 | 1521386475 | 1 | 0 | hive | 0 | 11 | artur | MANAGED_TABLE | NULL | NULL | +--------+-------------+-------+------------------+-------+-----------+-------+-----------+---------------+--------------------+--------------------+ 2 rows in set (0.00 sec)

Created 03-18-2018 04:17 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Causedby: ERROR 42X05:Table/View'DBS' does not exist. The issue should be with your Hcat Classpath is missing from the Hadoop Classpath.

Solution

To resolve this issue, run the job with the following:

HADOOP_CLASSPATH=$(hcat -classpath) export HADOOP_CLASSPATH

And let me know

Created 03-18-2018 04:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, I've seen this solution - but how/where to actually set it so that when executed from Oozie it is set so?

I did it on command line level it works fine - but not from Oozie.

Created 03-18-2018 05:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Great we are almost there. 🙂

You can set the HADOOP_CLASSPATH in the system that runs oozie server. So, sending it every time in request is not required. Otherwise, we can set it in the xml. In file oozie-site.xml set:

<property>

<name>oozie.service.HadoopAccessorService.hadoop.configurations</name>

<value>*=/home/user/oozie/etc/hadoop</value>

</property> Where /home/user/oozie/etc/hadoop is the absolute path where hadoop configuration files are located.

Please let me know whether it worked.

Created on 03-18-2018 07:23 PM - edited 08-18-2019 12:51 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So far, no success.

Here is this property in my /etc/oozie/conf/oozie-site.xml

<property>

<name>oozie.service.HadoopAccessorService.hadoop.configurations</name>

<value>*=/etc/hadoop/conf</value>

</property>

Oozie was restarted.

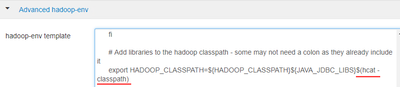

Additionally (just in case), I modified hadoop-env-template via Ambari (HDFS -> Configs -> Advanced -> Advanced hadoop-env)

Then restarted all required services.

I've checked that now in /etc/hadoop/conf/hadoop-env.sh there is line:

export HADOOP_CLASSPATH=${HADOOP_CLASSPATH}${JAVA_JDBC_LIBS}$(hcat -classpath)

But error while running from Oozie is still the same.

Created 03-20-2018 07:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Do you have any other idea how to make it work?