Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: SQL loader using PutSQL

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

SQL loader using PutSQL

- Labels:

-

Apache NiFi

Created on 11-20-2016 07:08 PM - edited 08-19-2019 04:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

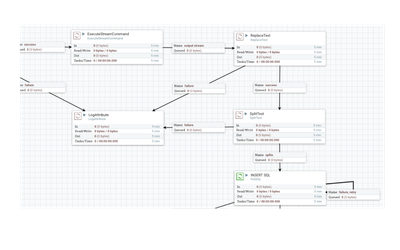

Am trying to build SQL loader using NiFi, the thing which is a bit strange is that I have to split the input file (containing insert statments) to multiple files each containing only one SQL insert, is this the best practice ? why I cant send the whole file to putSQL processor ?

Thanks

Created 11-21-2016 01:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

PutSQL has a mechanism for batching together statements that were split by processors such as SplitText. Set the "Support Fragmented Transactions" property to true, and PutSQL will wait until all flow files with the same fragment.identifier have arrived, then it will process them all as a single batch.

There has also been talk of implementing the same improvement for PutSQL as is being done for PutHiveQL (NIFI-3031), to support multiple statements from a single flow file. Please feel free to file a Jira for this if you like.

Created 11-21-2016 01:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

PutSQL has a mechanism for batching together statements that were split by processors such as SplitText. Set the "Support Fragmented Transactions" property to true, and PutSQL will wait until all flow files with the same fragment.identifier have arrived, then it will process them all as a single batch.

There has also been talk of implementing the same improvement for PutSQL as is being done for PutHiveQL (NIFI-3031), to support multiple statements from a single flow file. Please feel free to file a Jira for this if you like.

Created 11-22-2016 12:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Matt, very helpful

is there anyway to use "Load into" instead of SQL insert ?

Created 11-22-2016 06:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, PutSQL accepts any SQL statement (except Callable statements like stored procedures) that does not return a result set, so DDL/DML commands such as LOAD INTO or CREATE TABLE, etc. are supported.

Created 11-22-2016 07:49 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

big thanks really...