Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: SSH Fence not working?

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

SSH Fence not working?

Created on 07-11-2016 12:59 PM - edited 08-19-2019 03:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

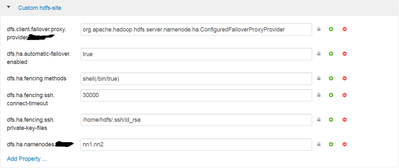

So SSHFence never seem to have worked for me with failover activated.

I enabled sshfence, made an hdfs user on ambari, generated an ssh-keygen key for passwordless session, manually tested the said ssh passwordless connection.... everythinjg to have been set yet still not working... whenever one of my namenode failed over in the backend ambari falsely reported both as active.

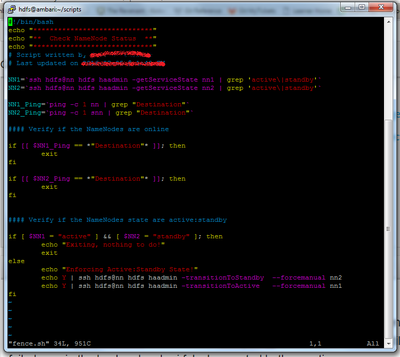

so I went to default and used the following script as its the only way I can get my primary nn to stay active and secondary as standby.

Essentially the script pings the NN's every minute and if they respond it checks for the current status and forces them into an active:standby state.

Theoretically having the snn as active and nn as standby should be fine, however on ambari it never reports the status correctly and unless I force transition the nodes it doesnt report them active:standby and the hdfs://ClusterName fails to work....

If someone has a better solution I'd love to hear it....

For those wondering I'm running on Ambari 2.2.2.0 and HDP 2.4.2.0 on a CentOS 6 x64 environment.

Additionally looking at the documentation it implies creating a user and making and ssh script to run fencing approach... what I dont get is what is the point of running a said script if nn complains that "failover is activated... you cannot manually failover the nodes" or something along that line.

There's something I'm definitely missing.

Anyhow the solution above has been working for me but it doesnt feel clean and I'd like to know how to community handles HA and what scripting approach you use....

Created 07-11-2016 04:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think the majority of people do not use ssh fencing at all. The reason for this is that Namenode HA works fine without it. The only issue can be that during a network partitioning old connections to the old standby might still exist and get stale old date during read-only operations.

- They cannot do any write transactions since the Journalnode majority prohibits that

- Normally if zkfc works correctly an active namenode will not go into zombie mode, he is dead or not.

So the chances of a split brain are low and the impact is pretty limited.

If you use ssh fencing the important part is that your script cannot block other wise the failover will be stopped, you need to have all scripts return in a sensible amount of time even if the access is not possible. Fencing by definition is always an attempt. Since most of the time the node is simply down. And they need to return success in the end. So you need a fork with a timeout and then return true.

Created 07-11-2016 01:03 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Additionally I configured HA and followed: https://docs.hortonworks.com/HDPDocuments/Ambari-2.2.1.1/bk_Ambari_Users_Guide/content/_how_to_confi...

and

https://docs.hortonworks.com/HDPDocuments/HDP2/HDP-2.4.2/bk_hadoop-ha/content/ha-nn-config-cluster.h... Still no cigar...

Created 07-11-2016 04:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think the majority of people do not use ssh fencing at all. The reason for this is that Namenode HA works fine without it. The only issue can be that during a network partitioning old connections to the old standby might still exist and get stale old date during read-only operations.

- They cannot do any write transactions since the Journalnode majority prohibits that

- Normally if zkfc works correctly an active namenode will not go into zombie mode, he is dead or not.

So the chances of a split brain are low and the impact is pretty limited.

If you use ssh fencing the important part is that your script cannot block other wise the failover will be stopped, you need to have all scripts return in a sensible amount of time even if the access is not possible. Fencing by definition is always an attempt. Since most of the time the node is simply down. And they need to return success in the end. So you need a fork with a timeout and then return true.