Support Questions

- Cloudera Community

- Support

- Support Questions

- [Solved] : sqoop-import MsSQL table into HDFS

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

[Solved] : sqoop-import MsSQL table into HDFS

- Labels:

-

Apache Hadoop

-

Apache Hive

-

Apache Sqoop

Created on 05-28-2018 01:52 PM - edited 08-17-2019 09:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All,

I have one table in MsSQL Database.

I want to import one table into Hive by using --target-dir parameter.

I have selected default Database in MsSQL for Hive.

My Observation:

sqoop import --connect jdbc:sqlserver://<HOST>:<PORT> --username XXXX --password XXXX --table <mssql_table> --hive-import --hive-table <hivedatabase.hivetable> --create-hive-table --target-dir '<PATH_WHERE_TO_STORE_IMPORTED_DATA>' --fields-terminated-by',' -m 1

P.S.: I have tried with --warehouse-dir also.

Regards,

Jay.

Created 05-28-2018 02:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

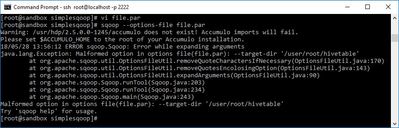

in your file.par file keep --target-dir argument value in new line not in the same line that --target-dir

I think now you are having in options file --target-dir as below

--target-dir '/user/root/hivetable'

Change the --target-dir argument value to newline i.e

--target-dir

'/user/root/hivetable'

Example:

Sample file.par i have tried

bash$ cat file.par

import --connect 'jdbc:sqlserver:/'<HOST>:<PORT>' --username XXXX --password XXXX --table <tab_name> --hive-import --hive-table default.<tab_name> --create-hive-table --target-dir '/user/root/hivetable' --fields-terminated-by

',' -m 1

Created 05-28-2018 02:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

in your file.par file keep --target-dir argument value in new line not in the same line that --target-dir

I think now you are having in options file --target-dir as below

--target-dir '/user/root/hivetable'

Change the --target-dir argument value to newline i.e

--target-dir

'/user/root/hivetable'

Example:

Sample file.par i have tried

bash$ cat file.par

import --connect 'jdbc:sqlserver:/'<HOST>:<PORT>' --username XXXX --password XXXX --table <tab_name> --hive-import --hive-table default.<tab_name> --create-hive-table --target-dir '/user/root/hivetable' --fields-terminated-by

',' -m 1

Created 05-29-2018 05:37 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 05-29-2018 10:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Shu,

Thank you for the positive reply. But my observation did not import MsSQL table into selective target directory in HDFS means not importing into '/user/root/hivetable'. It is storing a table into '/apps/hive/warehouse/' directory.

Jay.

Created 05-29-2018 10:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

While running hive import target-dir argument value controls where the data needs to store temporarily before loading into Hive table, but target-dir doesn't create hive table in that location.

If you want to import to specific directory then use target-dir without hive-import argument and create hive table on top of HDFS directory.

(or)

Create Hive external table pointing to your target-dir then in sqoop import remove --create-hive-table argument and --target-dir.

-

your issue in comments is because of --target-dir already exists, so comment it out (or) remove the --target-dir arguments in your options-file then run sqoop import again.

Created on 05-29-2018 02:12 PM - edited 08-17-2019 09:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for respond @Shu.

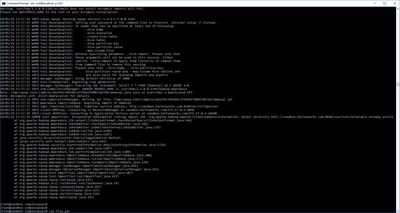

Yes, I deleted --create-hive and --hive-import argument and created external hive table into '/user/root/hivetable/' directory and execute the following command;

sqoop import --connect jdbc:sqlserver://<HOST>:<PORT> --username XXXX --password XXXX --table <mssql_table> --hive-table <hivedatabase.hivetable> --target-dir '/user/root/hivetable/' --fields-terminated-by ',' -m 1

But it says "File already exists".

Regards,

Jay.

Created 05-29-2018 03:20 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The error that you are facing is because of missing --hive-import argument.

Sqoop job storing the data to /user/root/hivetable/(because you are running sqoop import as root user and the table name is hive table).

if you have already created hive external table then your sqoop options file needs to be like below.

import --connect jdbc:sqlserver://<HOST>:<PORT> --username XXXX --password XXXX --table <mssql_table> --hive-import --hive-table <hivedatabase.hivetable> --fields-terminated-by "," -m 1

by using this way we are going to append the data into hive table every time.

Created 05-30-2018 06:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you so much. Your command works for me.

So as per my observation for `sqoop-import` command;

We can not use --hive-import and --target-dir/--warehouse-dir both arguments at once. If we have already created external hive table at target directory.

Note:

- If we want to import data of RDBMS table into Hadoop and into the specific directory in HDFS, then the user only --target-dir argument.

- If we want to import RDBMS table into Hive table into the specific HDFS directory; then, first of all, create external hive table and use only --hive-import argument.

- if when we want to use --query argument. we can use both arguments at once.i.e., --hive-import and --target-dir/warehouse-dir

Regards,

Jay.