Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Spark : How to speedup foreachRDD?

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Spark : How to speedup foreachRDD?

- Labels:

-

Apache Spark

Created on 03-03-2017 07:22 AM - edited 09-16-2022 04:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We have a Spark streaming application which ingests data @10,000/ sec ... We use the foreachRDD operation on our DStream( since spark doesn't execute unless it finds the output operation on DStream)

so we have to use the foreachRDD output operation like this , it takes upto to 3 hours ...to write a singlebatch of data (10,000) which is slow

requestsWithState is a DStream

CodeSnippet 1:

requestsWithState.foreachRDD { rdd =>

rdd.foreach {

case (topicsTableName, hashKeyTemp, attributeValueUpdate) => {

val client = new AmazonDynamoDBClient()

val request = new UpdateItemRequest(topicsTableName, hashKeyTemp, attributeValueUpdate)

try client.updateItem(request)

catch {

case se: Exception => println("Error executing updateItem!\nTable ", se)

}

}

case null =>

}

}

} So i thought the code inside foreachRDD might be the problem so commented it out to see how much time it takes ....to my surprise ...even with nocode inside the foreachRDD it still run's for 3 hours

CodeSnippet 2:

requestsWithState.foreachRDD {

rdd => rdd.foreach {

// No code here still takes a lot of time ( there used to be code but removed it to see if it's any faster without code) //

}

} Please let us know if we are missing anything or an alternative way to do this as i understand without a output operation on DStream spark streaming application will not run .. at this time i can't use other output operations

Note : To isolate the problem and make sure that dynamo code is not problem ...i ran with empty loop .....look's like foreachRDD is slow on it's own when iterating over a huge record set coming in @10,000/sec ...and not the dynamo code as empty foreachRDD and with dynamo code took the same time ...

Created 03-03-2017 07:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The major problem here is that you are making a client for every single data element. That's incredibly slow. Make one client per RDD partition. You need rdd.foreachPartition instead.

Created on 03-03-2017 07:59 AM - edited 03-03-2017 08:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@sroweni tried with foreachPartition too ........it didn't improve any time ..also if as you said if my creating connection is the problem as i explained in the question how come the empty loop took the same time 3 hours ...could you please explain that ?.... jus to isolate the problem if the connection code is the cause of slowness i ran the test with empty loop....and looks like it's not ....

Created 03-03-2017 08:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It's likely a problem then in how you are loading the streaming data -- bottlenecked on reading it or something for example. There are a lot of unknowns here. You can look at streaming UI stats to get more information on what is taking a while

Created on 03-03-2017 11:27 AM - edited 03-03-2017 11:27 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

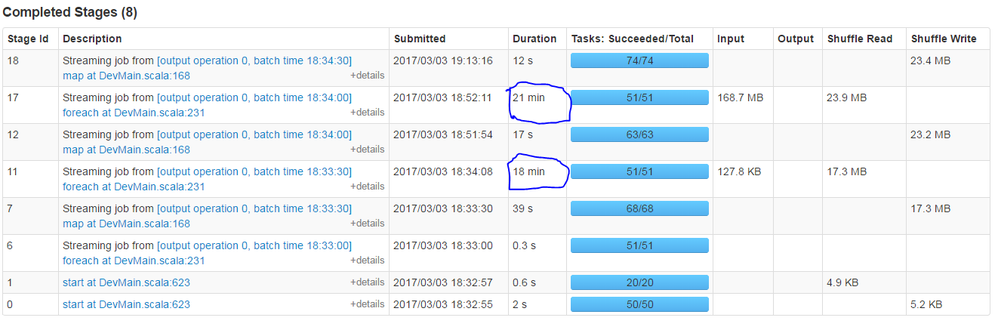

@srowen i don't think the upstream transformations and getting the stream's are not causing any delays ...as highlighted in the pic below .....only thing that's in minutes is foreachRDD (Eventhough there is no code in in it)