Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Spark SQL Internally

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Spark SQL Internally

- Labels:

-

Apache Spark

Created 05-04-2016 05:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Im executing tpc queries over hive tables using Spark SQL as below:

var hiveContext = new org.apache.spark.sql.hive.HiveContext(sc)

var query = hiveContext.sql(" SELECT ...");

query.showI learn the process about configure and use Spark SQL until this point. But now I would like to learn about how Spark SQL works internally to execute this queries over hive tables, things like execution plans, logical and physical plan, optimization. To understand better how Spark SQL or what Spark SQL uses to decide which is the best execution plan.

Im trying to find information about this but nothing in concrete, someone can give a overview about this so I can understand the basics to try then find more concrete information, or do you know some articles or something that explain this? And also do you know where or what is the command to see the logical and physical plans that Spark SQL uses when exute the queries?

Created 05-04-2016 08:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

First of all, you can show the EXPLAIN PLAN with this syntax:

spark-sql> EXPLAIN SELECT * FROM mytable WHERE key = 1;

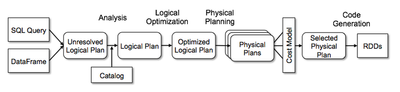

Yes, Spark SQL will always use the Catalyst optimizer. In addition, DataFrames operations will also use it now. This is shown in the diagram where the Sql Query (AST parser output) and DataFrames both feed into the Analysis phase of the optimizer.

Also, be aware that there are 2 types of contexts, SQLContext and HiveContext, which provides a superset of the functionality provided by the basic SQLContext. Additional features include the ability to write queries using the more complete HiveQL parser, access to Hive UDFs, and the ability to read data from Hive tables.

Created 05-04-2016 05:10 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I found a nice article by databricks to understand query execution,optimization, logical and physical plan, I hope it will help you.

https://databricks.com/blog/2015/04/13/deep-dive-into-spark-sqls-catalyst-optimizer.html

Created 05-04-2016 07:47 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your answer. But Spark SQL uses that catalyst component always? It is part of Spark SQL? Everytime we execute a query it uses that component? And do you know to show the logical and physical plains of the queries?

Created 05-04-2016 08:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

First of all, you can show the EXPLAIN PLAN with this syntax:

spark-sql> EXPLAIN SELECT * FROM mytable WHERE key = 1;

Yes, Spark SQL will always use the Catalyst optimizer. In addition, DataFrames operations will also use it now. This is shown in the diagram where the Sql Query (AST parser output) and DataFrames both feed into the Analysis phase of the optimizer.

Also, be aware that there are 2 types of contexts, SQLContext and HiveContext, which provides a superset of the functionality provided by the basic SQLContext. Additional features include the ability to write queries using the more complete HiveQL parser, access to Hive UDFs, and the ability to read data from Hive tables.

Created 05-04-2016 10:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your answer, now I can see the plans. And the diagram that appears in the spark user interface about each job, the DAG Visualization what is? Is the logical or physical plan? Or its another thing? And the diagram that you refer in your first phrase is which?

Created on 05-05-2016 03:54 PM - edited 08-19-2019 02:25 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is the image showing the phases of the optimizer (from @Rajkumar Singh link above)

Created 05-06-2016 07:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your help. And do you know if the diagram of the jobs executed after we execute a query, the DAG visualization is about what? That visualization shows the physical or logical plan?