Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Spark gateway not starting

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Spark gateway not starting

- Labels:

-

Apache HBase

-

Apache Spark

Created on 01-02-2017 01:11 PM - edited 09-16-2022 03:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All,

I have 3 node cluster having Cloudera 5.9

I am new in spark. I have installed it using cloudera add service feature. However I notice Spark gateway is not running on any of the node. When I try to start , it gives the error " Command Start is not currently available for execution "

I am not sure why is this error coming. Do i have to chose the option from CM UI Install spark jars ? I am using Spark to use Yarn Resource Manager i.e. it not a stand alone Spark.

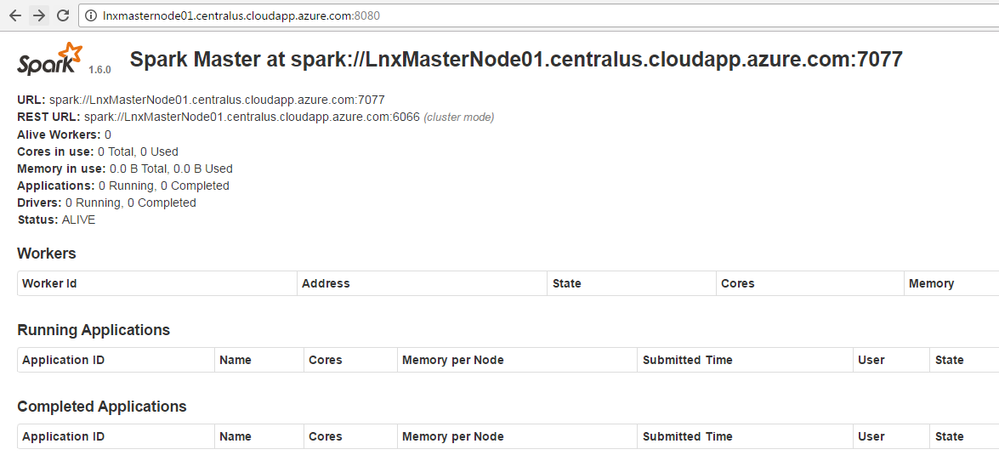

I have 7077 707818080 18081 18088 and 4040 ports open for them. History server UI is opening. Also, I am able to launch and use spark-shell.

However I cannot open Spark UI using ¨http://lnxmasternode01.centralus.cloudapp.azure.com:4040/¨ It is giving error ¨the site cant be reached¨

Please advice what should I do.

-bash-4.1$ spark-shell

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel).

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 1.6.0

/_/

Using Scala version 2.10.5 (Java HotSpot(TM) 64-Bit Server VM, Java 1.7.0_67)

Type in expressions to have them evaluated.

Type :help for more information.

Spark context available as sc (master = yarn-client, app id = application_1483386427562_0003).

SQL context available as sqlContext.

scala>

Thanks,

Shilpa

Created 01-02-2017 04:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Shilpa,

I am assuming that you selected the Spark service from the base CDH 5.9 parcels/packages and didn't fetch is separate and therefor are running a standalone Spark cluster alongside the Hadoop cluster.

In any case, the Spark Gateway, as well as the other Gateway's (HDFS, YARN, HBase, etc.), only install the libraries, binaries, and configuration files required to use the command line tools. In this case it is spark-submit and spark-shell (maybe the pyspark files as well, not 100% sure on it). There is not service to start and stop or monitor. That is why you can't start it and why it is gray instead of green.

In the case of the above assumption, it automatically sets up the tools to run in YARN mode. This means that the spark application runs in YARN instead of a standalone Spark cluster. Find the ResourceManager UI. You will find the application listed there and can access the AM and worker logs. You will be direct to the Spark History server UI (so you could go directly here if you wanted but it is good to get used to the RM interface).

Created 01-03-2017 09:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Those scripts, master and slave, are for running a Standalone Spark cluster. They are not needed if you are running Spark in YARN. The preference is to have Spark running in YARN so you don't have to divy up the cluster resources.

The history script is for the Spark History server. You can start and stop that through CM.

My best guess re: the UI not showing up when you start the slave is that the master was no longer running. Were you able to launch the spark-shell afterwards but not access the UI?

Created 01-02-2017 04:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Shilpa,

I am assuming that you selected the Spark service from the base CDH 5.9 parcels/packages and didn't fetch is separate and therefor are running a standalone Spark cluster alongside the Hadoop cluster.

In any case, the Spark Gateway, as well as the other Gateway's (HDFS, YARN, HBase, etc.), only install the libraries, binaries, and configuration files required to use the command line tools. In this case it is spark-submit and spark-shell (maybe the pyspark files as well, not 100% sure on it). There is not service to start and stop or monitor. That is why you can't start it and why it is gray instead of green.

In the case of the above assumption, it automatically sets up the tools to run in YARN mode. This means that the spark application runs in YARN instead of a standalone Spark cluster. Find the ResourceManager UI. You will find the application listed there and can access the AM and worker logs. You will be direct to the Spark History server UI (so you could go directly here if you wanted but it is good to get used to the RM interface).

Created 01-03-2017 08:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks a lot @mbigelow I thought earlier, if it is in YARN mode then those gateways should run hence was trying. I can see the spark enteries in RM UI because I opened spark-shell.

However just one question, in my spark directory (basically spark/sbin) I can see various Stop, Start scripts for Spark Master, slave, history server etc. I tried to start Spark master from the command and now the UI is opening. Do you think I did the right thing? Or it is NOT required? But if I run start slave script, worker UI is Not opening.

Can you help me again pls.

Thanks,

Shilpa

Created 01-03-2017 09:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Those scripts, master and slave, are for running a Standalone Spark cluster. They are not needed if you are running Spark in YARN. The preference is to have Spark running in YARN so you don't have to divy up the cluster resources.

The history script is for the Spark History server. You can start and stop that through CM.

My best guess re: the UI not showing up when you start the slave is that the master was no longer running. Were you able to launch the spark-shell afterwards but not access the UI?

Created on 01-03-2017 10:20 AM - edited 01-03-2017 10:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, previously(without starting master from the script) i was able to work on spark-shell but not open the master UI. However after I started master through script, I was able to open it.

But as you said we dont need to do it as we need it in standalone spark mode. I would shut it down. What do you say?

Thanks,

Shilpa

Created 01-03-2017 10:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good luck

Created 01-03-2017 10:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have another question, if you could help. This is why I need spark https://community.cloudera.com/t5/Advanced-Analytics-Apache-Spark/Run-SparkR-or-R-package-on-my-Clou...