Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Spark job fails in cluster mode.

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Spark job fails in cluster mode.

- Labels:

-

Apache Spark

Created on 08-11-2017 10:15 AM - edited 09-16-2022 05:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All

I have been trying to submit below spark job in cluster mode through a bash shell.

Client mode submit works perfectly fine. But when i switch to cluster mode, this fails with error, no app file present.

App file refers to missing application.conf.

spark-submit \

--master yarn \

--deploy-mode cluster \

--class myCLASS \

--properties-file /home/abhig/spark.conf \

--files /home/abhig/application.conf \

--conf "spark.executor.extraJavaOptions=-Dconfig.resource=application.conf -Dlog4j.configuration=/home/abhig/log4.properties" \

--driver-java-options "-Dconfig.file=/home/abhig/application.conf -Dlog4j.configuration=/home/abhig/log4.properties" \

/loca/project/gateway/mypgm.jar

I followed the link below on similar post

This solution mentioned is still not clear.

I even tried

--files $CONFIG_FILE#application.conf

Still it doesn't work.

Any help will be appreciated.

Thanks

AB

Created 08-14-2017 08:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All

Thanks @Yuexin Zhang for the response.

I figured out the solution for this.

Below is the actual submit which worked for me.

The catch here is that when we submit in cluster mode, it uploads the file to a staging dir on hdfs.

Now the path and name of the file is different on hdfs then what it expects in the program.

To make that file available in the program, u have to make an alias for that file with '#' like mentioned below. (thats the only trick).

Now everywhere, u need to refer to that file, just mention that alias on spark submit command.

I mentioned the complete walkthrough and how to reach the solution in below links i referred to.

Issue also discussed here - https://community.cloudera.com/t5/Advanced-Analytics-Apache-Spark/Spark-File-not-found-error-works-f... - (Didn't actually helped me resolved, so i posted it separately)

Section "Important notes" in http://spark.apache.org/docs/latest/running-on-yarn.html ( Kinda have to read between the lines)

Blog explaining the reason - http://progexc.blogspot.com/2014/12/spark-configuration-mess-solved.html (Nice blog 🙂 )

spark-submit \ --master yarn \ --deploy-mode cluster \ --class myCLASS \ --properties-file /home/abhig/spark.conf \ --files /home/abhig/application.conf#application.conf,/home/abhig/log4.properties#log4j \ --conf "spark.executor.extraJavaOptions=-Dconfig.resource=application.conf -Dlog4j.configuration=log4j" \ --conf spark.driver.extraJavaOptions="-Dconfig.file=application.conf -Dlog4j.configuration=log4j" \ /local/project/gateway/mypgm.jar

Hope this helps the next person facing similar issue!

Created on 08-13-2017 03:54 AM - edited 08-13-2017 04:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Two points:

1) in cluster mode, you should use "--conf spark.driver.extraJavaOptions=" instead of "--driver-java-options"

2) you only provide application.conf in --file list, there's no log4.properties. So either you have this log4.properties distributed on each YARN node, or you should add this log4.properties file to --file list, and reference it with "-Dlog4j.configuration=./log4.properties"

For cluster mode, the full command should look like the following:

spark-submit \ --master yarn \ --deploy-mode cluster \ --class myCLASS \ --properties-file /home/abhig/spark.conf \ --files /home/abhig/application.conf,/home/abhig/log4.propertie \ --conf "spark.executor.extraJavaOptions=-Dconfig.resource=application.conf -Dlog4j.configuration=./log4.properties" \ --conf spark.driver.extraJavaOptions="-Dconfig.file=./application.conf -Dlog4j.configuration=./log4.properties" \ /loca/project/gateway/mypgm.jar

Created 08-14-2017 08:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All

Thanks @Yuexin Zhang for the response.

I figured out the solution for this.

Below is the actual submit which worked for me.

The catch here is that when we submit in cluster mode, it uploads the file to a staging dir on hdfs.

Now the path and name of the file is different on hdfs then what it expects in the program.

To make that file available in the program, u have to make an alias for that file with '#' like mentioned below. (thats the only trick).

Now everywhere, u need to refer to that file, just mention that alias on spark submit command.

I mentioned the complete walkthrough and how to reach the solution in below links i referred to.

Issue also discussed here - https://community.cloudera.com/t5/Advanced-Analytics-Apache-Spark/Spark-File-not-found-error-works-f... - (Didn't actually helped me resolved, so i posted it separately)

Section "Important notes" in http://spark.apache.org/docs/latest/running-on-yarn.html ( Kinda have to read between the lines)

Blog explaining the reason - http://progexc.blogspot.com/2014/12/spark-configuration-mess-solved.html (Nice blog 🙂 )

spark-submit \ --master yarn \ --deploy-mode cluster \ --class myCLASS \ --properties-file /home/abhig/spark.conf \ --files /home/abhig/application.conf#application.conf,/home/abhig/log4.properties#log4j \ --conf "spark.executor.extraJavaOptions=-Dconfig.resource=application.conf -Dlog4j.configuration=log4j" \ --conf spark.driver.extraJavaOptions="-Dconfig.file=application.conf -Dlog4j.configuration=log4j" \ /local/project/gateway/mypgm.jar

Hope this helps the next person facing similar issue!

Created 03-27-2021 05:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

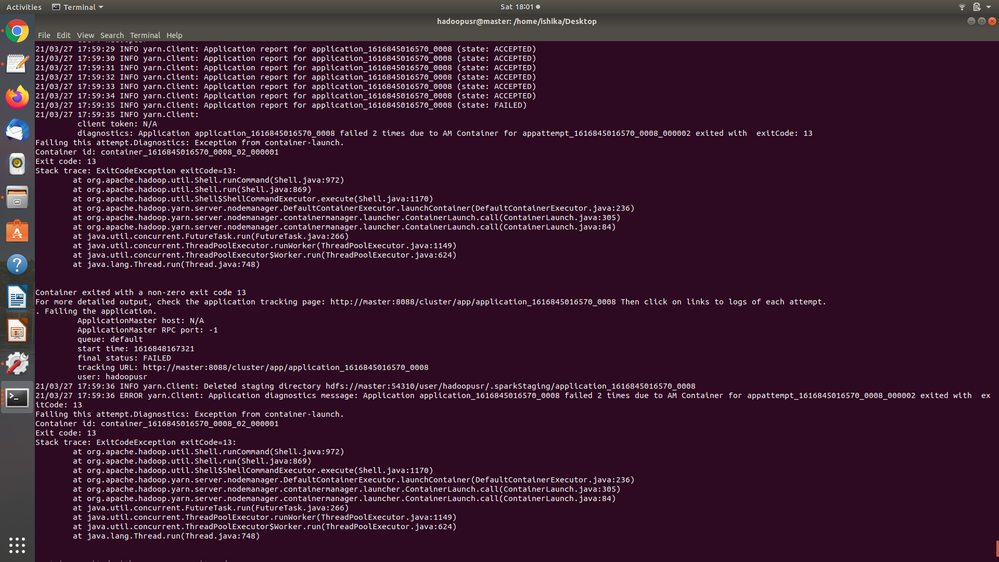

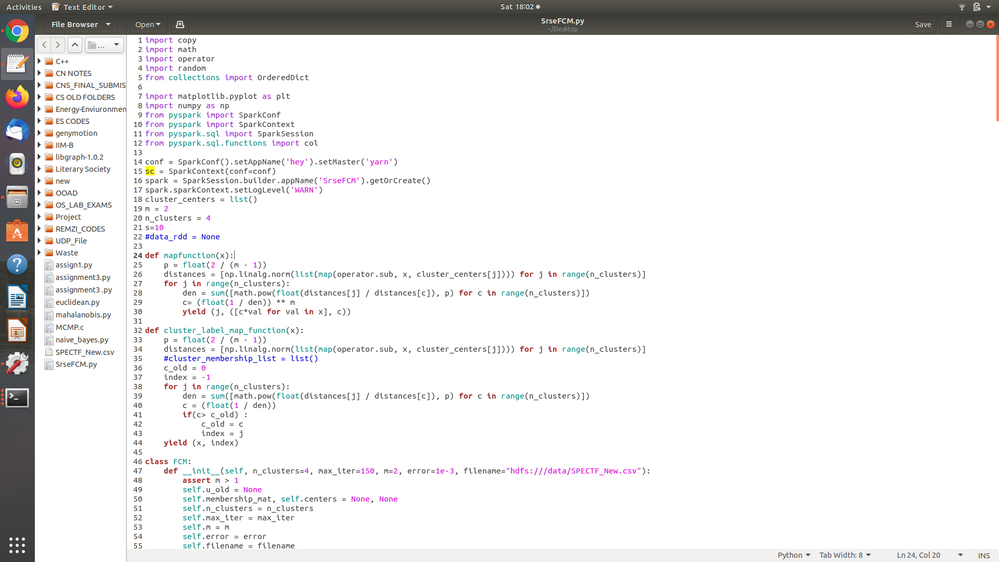

Hey @ABaaya ,

Could you help me with a similar issue wherein we have already uploaded the file on hdfs which is being accessed in the code? Could you suggest what should the spark-submit command look like for a case like this to run in the cluster mode?

I have attached the error snippet and the code snippet where we are trying to access the file.

Kindly suggest.

Thank you.

Created 03-29-2021 12:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@ishika as this is an older post, you would have a better chance of receiving a resolution by starting a new thread. This will also be an opportunity to provide details specific to your environment that could aid others in assisting you with a more accurate answer to your question. You can link this thread as a reference in your new post.

Regards,

Vidya Sargur,Community Manager

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Learn more about the Cloudera Community: