Support Questions

- Cloudera Community

- Support

- Support Questions

- Spark shuffle spill (Memory)

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Spark shuffle spill (Memory)

- Labels:

-

Apache Spark

Created on 07-04-2018 11:58 AM - edited 08-18-2019 01:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

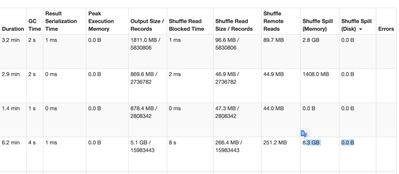

Running jobs with spark 2.2, I noted in the spark webUI that spill occurs for some tasks :

I understand that on the reduce side, the reducer fetched the needed partitions (shuffle read), then performed the reduce computation using the execution memory of the executor. As there was not enough execution memory some data was spilled.

My questions:

- Am I correct ?

- Where the data is spilled ? Spark webUI states some data is spilled to memory shuffle spilled (memory), but nothing is spilled to disk shuffle spilled (disk)

Thanks in advance for your help

Created 07-04-2018 01:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Shuffle data is serialized over the network so when deserialized its spilled to memory and this metric is aggregated on the shuffle spilled (memory) that you see in the UI

Please review this:

http://apache-spark-user-list.1001560.n3.nabble.com/What-is-shuffle-spill-to-memory-td10158.html

"Shuffle spill (memory) is the size of the deserialized form of the data in memory at the time when we spill it, whereas shuffle spill (disk) is the size of the serialized form of the data on disk after we spill it. This is why the latter tends to be much smaller than the former. Note that both metrics are aggregated over the entire duration of the task (i.e. within each task you can spill multiple times)."

HTH

*** If you found this answer addressed your question, please take a moment to login and click the "accept" link on the answer.

Created 07-04-2018 03:24 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Felix thanks for your input.

Shuffle data is serialized over the network so when deserialized its spilled to memory

==> From my understanding, operators spill data to disk if it does not fit in memory. So it's not directly related to the shuffle process. Furthermore, I have plenty of jobs with shuffles where no data spills.

Shuffle spill (memory) is the size of the deserialized form of the data in memory at the time when we spill it, whereas shuffle spill (disk) is the size of the serialized form of the data on disk after we spill it. This is why the latter tends to be much smaller than the former

==> In the present case the size of the shuffle spill (disk) is null. So I am still unsure of what happened to the "shuffle spilled (memory) data"

Created 02-23-2019 05:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I agree with 1. It shouldn't call just Shuffle Spill. Title should be more generic then that. for 2, I think it's tasks' Max deserialized data in memory that it used until one point or ever if task is finished. It could be GCd from that executor.

Created 02-23-2019 06:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

for example, in one of my DAG, all that those task do is Sort WithinPartition (so no shuffle) still it spills data on disk because partition size is huge and spark resort to ExternalMergeSort. As a result, I have a high Shuffle Spill (memor) and also some Shuffle Spill(Disk). There is no shuffle here. but on the other hand you can argue that Sorting process moves data in order to sort so it's kind of internal shuffle 🙂