Support Questions

- Cloudera Community

- Support

- Support Questions

- Specifying delimiters for Hive table with nested c...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Specifying delimiters for Hive table with nested complex type columns

- Labels:

-

Apache Hive

Created 06-09-2017 08:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have a Hive table whose columns are nested complex types – columns are ‘array of arrays of structs’ and ‘struct of arrays and structs’. I am writing the data with the delimiters to the underlying Hadoop file directly. Now the delimiters can occur in my data and I need to know how to escape it.

1) In the table creation statement, only one delimiter can be specified for collections along with the escape character. It does not allow me to specify multiple delimiters (I get syntax error, if I tried to). For e.g

hive>create table delimiter_test( c1 struct< l1a:struct<l2a:string, l2b:string>, l1b:struct<l2c:array<string>, l2d:string> >, c2 array<struct<l1a:struct<l2a:string, l2b:string>, l1b:string>> ) row format delimited fields terminated by 'a' collection items terminated by 'b', 'c', 'd', 'e' ESCAPED BY '\\' STORED AS TEXTFILE;

NoViableAltException(10@[1757:103: ( tableRowFormatMapKeysIdentifier )?]) at org.antlr.runtime.DFA.noViableAlt(DFA.java:158) at org.antlr.runtime.DFA.predict(DFA.java:144) at org.apache.hadoop.hive.ql.parse.HiveParser.rowFormatDelimited(HiveParser.java:34142) at org.apache.hadoop.hive.ql.parse.HiveParser.tableRowFormat(HiveParser.java:34377) at org.apache.hadoop.hive.ql.parse.HiveParser.createTableStatement(HiveParser.java:5003) at org.apache.hadoop.hive.ql.parse.HiveParser.ddlStatement(HiveParser.java:2364) at org.apache.hadoop.hive.ql.parse.HiveParser.execStatement(HiveParser.java:1586) at org.apache.hadoop.hive.ql.parse.HiveParser.statement(HiveParser.java:1062) at org.apache.hadoop.hive.ql.parse.ParseDriver.parse(ParseDriver.java:201) at org.apache.hadoop.hive.ql.parse.ParseDriver.parse(ParseDriver.java:166) at org.apache.hadoop.hive.ql.Driver.compile(Driver.java:404) at org.apache.hadoop.hive.ql.Driver.compile(Driver.java:305) at org.apache.hadoop.hive.ql.Driver.compileInternal(Driver.java:1119) at org.apache.hadoop.hive.ql.Driver.runInternal(Driver.java:1167) at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1055) at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1045) at org.apache.hadoop.hive.cli.CliDriver.processLocalCmd(CliDriver.java:207) at org.apache.hadoop.hive.cli.CliDriver.processCmd(CliDriver.java:159) at org.apache.hadoop.hive.cli.CliDriver.processLine(CliDriver.java:370) at org.apache.hadoop.hive.cli.CliDriver.executeDriver(CliDriver.java:757) at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:675) at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:615) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at org.apache.hadoop.util.RunJar.run(RunJar.java:221) at org.apache.hadoop.util.RunJar.main(RunJar.java:136) FAILED: ParseException line 1:287 cannot recognize input near ',' ''c'' ',' in serde properties specification

2) In the table creation statement, if I don’t specify the delimiters and go with the default ^A, ^B, ^C etc. characters then the default escape character ‘\’ does not have any effect. If my data has any of the default delimiters, on retrieval using Hive CLI, it becomes garbage.

Any suggestions on how I can handle this issue?

Created 06-11-2017 08:48 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you provide your sample input entry.?

Created on 06-12-2017 03:56 PM - edited 08-17-2019 09:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I created the table using the statement (this statement uses the default delimiters, ^A, ^B etc.):

create table delimiter_test( c1 struct< l1a:struct<l2a:string, l2b:string>, l1b:struct<l2c:array<string>, l2d:string> >, c2 array<struct<l1a:struct<l2a:string, l2b:string>, l1b:string>> ) row format delimited STORED AS TEXTFILE;

I inserted one row using the statement:

insert into table delimiter_test select named_struct("l1a",named_struct("l2a","111", "l2b","222"), "l1b",named_struct("l2c",array("333", "333"), "l2d","444")), array(named_struct("l1a",named_struct("l2a","555", "l2b","666"), "l1b","777"), named_struct("l1a",named_struct("l2a","888", "l2b","999"), "l1b","000")) from z_dummy;

Note the above sample data does not have any default delimiters in it.

Next, I go to the underlying HDFS file and edit it, so that the data has default delimiters. When I put a delimiter in the data, I also put an escape char \ in front of it. I have attached the screenshots of the data in hex, before and after editing it.

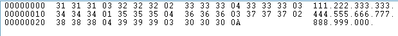

Contents of file before editing to have a delimiter in the data.

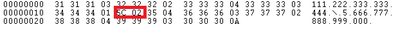

Contents of file after editing to have a delimiter in the data.

Once I have default delimiters in the data, it becomes unreadable (escaping didnt help)

Created 06-12-2017 01:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If your sample data is like this: 1,abc,40000,maths$physics$chemistry,99@90@87,pf#500$epf#200,hyd$ap$500001 then you can use the below query.

create table struct_tables(id int,name string,sal int,sub array<string>, pfstatus map<string,int>,address struct<city:string,state:string,pincode:bigint>) row format delimited fields terminated by ',' collection items terminated by '$' map keys terminated by '#';

In general FILELDS TERMINATED BY does not support multi-character delimiters for collections in Hive. Hence If your data is in this format 1,abc,40000,maths$physics$chemistry,99@90@87,pf#500$epf#200,hyd$ap$500001 then you need to replace either one of the delimiters with one (i.e either # with @ or @ with #) and then you can use the create statement.

Also refer the below link for using multiple delimiters :

https://cwiki.apache.org/confluence/display/Hive/MultiDelimitSerDe

http://hadoopinrealworld.com/how-to-use-multi-character-delimiter-in-a-hive-table/

Hope this info helps you..