Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Split JSON after Convert Record (CSVtoJSON) cr...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Split JSON after Convert Record (CSVtoJSON) creating 10,000 duplicate split records

Created on 12-09-2017 03:16 PM - edited 08-17-2019 07:20 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sample Data:-

1,Michael,Jackson

2,Jim,Morrisson

3,John,Lennon

4,Freddie,Mercury

5,Elton,John

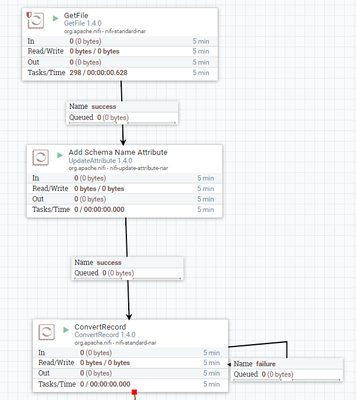

refer image CSVtoJSONJSON created successfully

Result After Convert Record (CSVtoJSON)

[ { "id" : 1, "firstName" : "Michael", "lastName" : "Jackson" },

{ "id" : 2, "firstName" : "Jim", "lastName" : "Morrisson" },

{ "id" : 3, "firstName" : "John", "lastName" : "Lennon" },

{ "id" : 4, "firstName" : "Freddie", "lastName" : "Mercury" },

{ "id" : 5, "firstName" : "Elton", "lastName" : "John" } ]

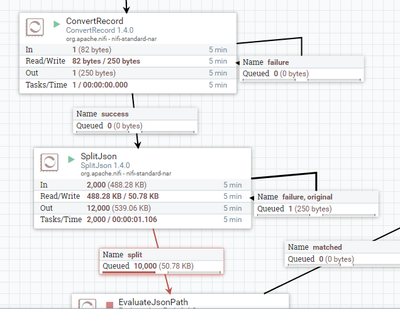

Applying SplitJSON to the convertrecord processor with jsonpath = $.* creates 10,000 splits in the queue

refer image SplitJSON10000splits

I need to split the array of JSON into individuall JSON records and apply some transformation to these records

Created on 12-09-2017 04:39 PM - edited 08-17-2019 07:20 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You have connected Failure and Original relationships of split json looped back to same processor,

What does Original relationship means The original FlowFile that was split into segments. If the FlowFile fails processing, nothing will be sent to this relationship i.e your original flowfile will be transferred to this relationship.

Example of Original Flowfile:-

This message will be original flowfile

[{"id":1,"fname":"Michael","lname":"Jackson"},{"id":2,"fname":"Jim","lname":"Morrisson"},{"id":3,"fname":"John","lname":"Lennon"},{"id":4,"fname":"Freddie","lname":"Mercury"},{"id":5,"fname":"Elton","lname":"John"}]in your case original relation loop back to split json and generating duplicates.

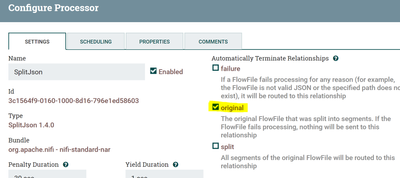

To resolve this issue auto terminate original relation by

- Right Click on the Split json processor

- Goto Settings tab

- Click on Check box before Original relation

- Then click on Apply button Right Below of the screen.

Auto terminate original Relationship:-

Splitjson Configs:-

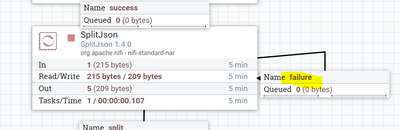

As you can see above screenshot only failure relationship is loop back to the processor and we have auto terminated original relationship.

Created on 12-09-2017 04:39 PM - edited 08-17-2019 07:20 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You have connected Failure and Original relationships of split json looped back to same processor,

What does Original relationship means The original FlowFile that was split into segments. If the FlowFile fails processing, nothing will be sent to this relationship i.e your original flowfile will be transferred to this relationship.

Example of Original Flowfile:-

This message will be original flowfile

[{"id":1,"fname":"Michael","lname":"Jackson"},{"id":2,"fname":"Jim","lname":"Morrisson"},{"id":3,"fname":"John","lname":"Lennon"},{"id":4,"fname":"Freddie","lname":"Mercury"},{"id":5,"fname":"Elton","lname":"John"}]in your case original relation loop back to split json and generating duplicates.

To resolve this issue auto terminate original relation by

- Right Click on the Split json processor

- Goto Settings tab

- Click on Check box before Original relation

- Then click on Apply button Right Below of the screen.

Auto terminate original Relationship:-

Splitjson Configs:-

As you can see above screenshot only failure relationship is loop back to the processor and we have auto terminated original relationship.

Created 12-09-2017 05:13 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@shu Thanks. It worked.