Support Questions

- Cloudera Community

- Support

- Support Questions

- SplitJson for nested json content

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

SplitJson for nested json content

- Labels:

-

Apache NiFi

Created 11-25-2022 02:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, unfortunately I couldn't find an answer for this question, although it seems to be easy enough. I have a json file of this format, which I want to generate separate flowfiles from singularizing the values in NestedKey2:

My input:

{

"Key1" : [ {

"NestedKey1" : "Value1",

"NestedKey2" : [ "Value2", "Value3", "Value4", "Value5"],

"NestedKey3" : "Value6"

} ]

}

Desired output:

Flowfile 1:

{

"Key1" : [ {

"NestedKey1" : "Value1",

"NestedKey2" : "Value2",

"NestedKey3" : "Value6"

} ]

}

Flowfile 2:

{

"Key1" : [ {

"NestedKey1" : "Value1",

"NestedKey2" : "Value3",

"NestedKey3" : "Value6"

} ]

} ...

and so on. Thanks for any suggestions.

Created 11-25-2022 05:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Fredi ,

The processor 'ForkRecord' is exactly what you are looking for. However, I am currently trying to configure it to split exactly as you have described and am having trouble getting it to work...

I am attempting to use the 'extract' mode, with a fork path of "/Key1[*]/NestedKey2" which, according to the documentation, is supposed to do exactly what you described (split on nested json). For some reason the output is coming out empty though. Perhaps someone more familiar with the processor could reply and explain how to use it correctly for your use-case.

Created 11-25-2022 09:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I just tried it out of the box with the example mentioned in documentation:

It seems to only produce correct result when you provide the schema for the record writer service. Not sure if that is related but when I tried without providing schema in the json record writer it gave me all the values as null!

Created 11-25-2022 10:25 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That's correct. I believe my earlier tests were failing because I used the "inherit record schema" setting, which obviously wouldn't work on the writer if the schema changes after forking.

Here is an avro schema I generated that should describe the output exactly as @Fredi described (generated using this website), however even when using this schema in my record writer, the output still comes out empty.

{

"name": "MyClass",

"type": "record",

"namespace": "myNamespace",

"fields": [

{

"name": "Key1",

"type": {

"type": "array",

"items": {

"name": "Key1_record",

"type": "record",

"fields": [

{

"name": "NestedKey1",

"type": "string"

},

{

"name": "NestedKey2",

"type": "string"

},

{

"name": "NestedKey3",

"type": "string"

}

]

}

}

}

]

}

I believe at this point the challenge is simply writing an accurate avro schema for the output data.

Created 11-25-2022 11:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Green_

After farther investigation I found the reason the result is coming as blank is because we are missing the point from the processor description itself:

"..The user must specify at least one Record Path, as a dynamic property, pointing to a field of type ARRAY containing RECORD objects..."

Since the values in the array are just actual values and not a record its probably not working as expected. When I make the input looks like below , it works with the path specified:

{

"Key1": [

{

"NestedKey1": "Value1",

"NestedKey2": [

{

"nestedValue": "Value2"

},

{

"nestedValue": "Value3"

},

{

"nestedValue": "Value4"

},

{

"nestedValue": "Value5"

}

],

"NestedKey3": "Value6"

}

]

}As suggestion - if that works with @Fredi - is to use Jolt transformation to convert the array into records as seen above and then use the Fork processor to achieve the desired result. The schema for the Json recrod writer can be as simple as the following :

{

"type": "record",

"name": "TestObject",

"namespace": "ca.dataedu",

"fields": [{

"name": "NestedKey1",

"type": ["null", "string"],

"default": null

}, {

"name": "NestedKey3",

"type": ["null", "string"],

"default": null

}, {

"name": "nestedValue",

"type": ["null", "string"],

"default": null

}]

}Hope that helps.

Thanks

Created 11-30-2022 01:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, thanks for your suggestions. I don't know a lot about nifi, so it took me long enough to figure out how to find the correct jolt transformation to obtain the structure above. Unfortunately I also don't know how to configure the record writer properly. I can't find a good manual or examples on the web.

Which strategy do I choose? What field can I give my wanted schema to?

Created on 11-30-2022 06:36 AM - edited 11-30-2022 06:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

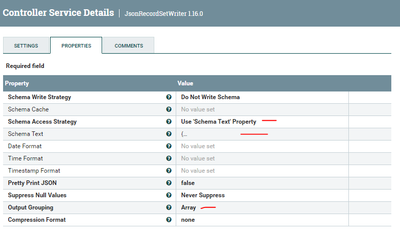

If you are able to do the jolt transformation to have the out put as specified above then in the ForkRecord ReocrdWriter should be set to "JsonRecordWriter" and the JsonRecordWriter can be set as follows:

The Schema Text property can be set to the following:

{

"type": "record",

"name": "TestObject",

"namespace": "ca.dataedu",

"fields": [{

"name": "NestedKey1",

"type": ["null", "string"],

"default": null

}, {

"name": "NestedKey3",

"type": ["null", "string"],

"default": null

}, {

"name": "NestedKey2",

"type": ["null", "string"],

"default": null

}]

}

This should give you the desired output you specified above.

For more information on the JsonRecorWriter please refer to :

Hope that helps, if it does please accept solution.

Thanks

Created on 12-01-2022 02:33 AM - edited 12-01-2022 03:25 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, thanks for the details. Unfortunately it is not working. I get an empty array [] as output. I have tried it with extract and split mode. I applied the schema text property as suggested with "NestedKey" and "nestedValue" as name. None gives me an output.

Meanwhile I have achieved what I wanted using SplitContent and then again another jolt processor. Of course it would be more elegant if I could make it work with ForkRecord.