Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Sqoop export to create table

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Sqoop export to create table

- Labels:

-

Apache Sqoop

Created 06-28-2021 11:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can Sqoop export be used to create a table?

Created 06-29-2021 02:32 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think the answer is yes! Below I will try to demonstrate by creating a table from an existing dataset copied to HDFS

[hdfs@bern sqoop]$ hdfs dfs -ls /tmp/sqoop

Found 1 items

-rw-r--r-- 3 hdfs hdfs 400 2021-06-29 10:14 /tmp/sqoop/hr.txtContents of the file hr.txt

[hdfs@bern sqoop]$ hdfs dfs -cat /tmp/sqoop/hr.txt

100,Geoffrey,manager,50000,Admin

101,Thomas,Oracle Consultant,15000,IT

102,Biden,Project Manager,28000,PM

103,Carmicheal,Bigdata developer,30000,BDS

104,Johnson,Treasurer,21000,Accounts

105,Gerald,Director,30000,Management

106,Paul,Director,30000,Management

105,Mark,CEO,90000,Management

105,Edward,Janitor,30000,Housing

105,Richard,Farm Manager,31000,Agriculture

105,Albert,System Engineer,21000,ITYou MUST pre-create the table and database

[root@bern sqoop]# mysql -uroot -p

Enter password:

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is 123

Server version: 5.5.65-MariaDB MariaDB Server

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MariaDB [(none)]> create database harsh8;

Query OK, 1 row affected (0.05 sec)

MariaDB [(none)]> use harsh8;

Database changedPre-create the table to receive the datasets

MariaDB [harsh8]> CREATE TABLE staff ( id INT NOT NULL PRIMARY KEY, Name VARCHAR(20), Position VARCHAR(20),Salary INT,Department VARCHAR(10));

Query OK, 0 rows affected (0.26 sec)

MariaDB [harsh8]> show tables;

+------------------+

| Tables_in_harsh8 |

+------------------+

| staff |

+------------------+

1 row in set (0.00 sec)Check the empty staff table structure

MariaDB [harsh8]> describe staff;

+------------+-------------+------+-----+---------+-------+

| Field | Type | Null | Key | Default | Extra |

+------------+-------------+------+-----+---------+-------+

| id | int(11) | NO | PRI | NULL | |

| Name | varchar(20) | YES | | NULL | |

| Position | varchar(20) | YES | | NULL | |

| Salary | int(11) | YES | | NULL | |

| Department | varchar(10) | YES | | NULL | |

+------------+-------------+------+-----+---------+-------+

5 rows in set (0.14 sec)The empty table before the export

MariaDB [harsh8]> select * from staff;

Empty set (0.00 sec)Run the export to import the HDFS data into the hasrh8.staff table

[hdfs@bern sqoop]$ sqoop export \

--connect jdbc:mysql://localhost/harsh8 \

--username root \

--password 'w3lc0m31' \

--table staff \

--export-dir /tmp/sqoop/hr.txtRunning sqoop job see the command snippet

21/06/29 10:23:05 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7.3.1.4.0-315

21/06/29 10:23:06 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

21/06/29 10:23:06 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

21/06/29 10:23:06 INFO tool.CodeGenTool: Beginning code generation

21/06/29 10:23:09 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `staff` AS t LIMIT 1

21/06/29 10:23:09 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `staff` AS t LIMIT 1

21/06/29 10:23:09 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /usr/hdp/3.1.4.0-315/hadoop-mapreduce

21/06/29 10:23:22 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hdfs/compile/5097d7a0a163272ca680207ac06da7d5/staff.jar

21/06/29 10:23:22 INFO mapreduce.ExportJobBase: Beginning export of staff

21/06/29 10:25:10 INFO client.RMProxy: Connecting to ResourceManager at bern.swiss.ch/192.168.0.139:8050

21/06/29 10:25:13 INFO client.AHSProxy: Connecting to Application History server at bern.swiss.ch/192.168.0.139:10200

21/06/29 10:25:17 INFO mapreduce.JobResourceUploader: Disabling Erasure Coding for path: /user/hdfs/.staging/job_1624952474858_0001

21/06/29 10:25:50 INFO input.FileInputFormat: Total input files to process : 1

21/06/29 10:25:50 INFO input.FileInputFormat: Total input files to process : 1

21/06/29 10:25:52 INFO mapreduce.JobSubmitter: number of splits:4

21/06/29 10:25:57 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1624952474858_0001

21/06/29 10:25:57 INFO mapreduce.JobSubmitter: Executing with tokens: []

21/06/29 10:25:59 INFO conf.Configuration: found resource resource-types.xml at file:/etc/hadoop/3.1.4.0-315/0/resource-types.xml

21/06/29 10:26:01 INFO impl.YarnClientImpl: Submitted application application_1624952474858_0001

21/06/29 10:26:01 INFO mapreduce.Job: The url to track the job: http://bern.swiss.ch:8088/proxy/application_1624952474858_0001/

21/06/29 10:26:01 INFO mapreduce.Job: Running job: job_1624952474858_0001

21/06/29 10:29:36 INFO mapreduce.Job: Job job_1624952474858_0001 running in uber mode : false

21/06/29 10:29:36 INFO mapreduce.Job: map 0% reduce 0%

21/06/29 10:33:15 INFO mapreduce.Job: map 75% reduce 0%

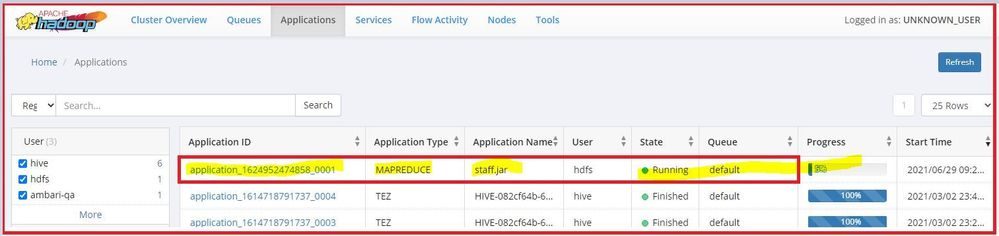

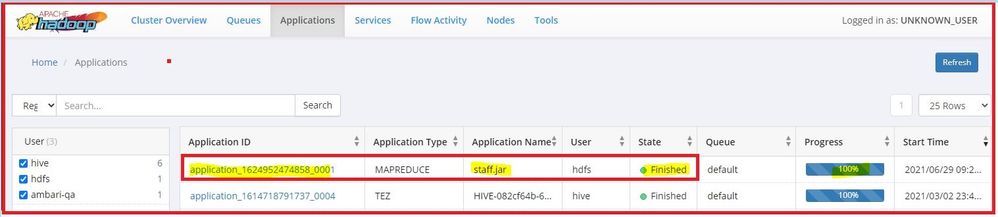

21/06/29 10:33:16 INFO mapreduce.Job: map 100% reduce 0%YARN UI showing the job running and completing

Completed successfully

Data now uploaded in the destination table

MariaDB [harsh8]> select * from staff;

+-----+------------+-------------------+--------+------------+

| id | Name | Position | Salary | Department |

+-----+------------+-------------------+--------+------------+

| 100 | Geoffrey | manager | 50000 | Admin |

| 101 | Thomas | Oracle Consultant | 15000 | IT |

| 102 | Biden | Project Manager | 28000 | PM |

| 103 | Carmicheal | Bigdata developer | 30000 | BDS |

| 104 | Johnson | Treasurer | 21000 | Accounts |

| 105 | Gerald | Director | 30000 | Management |

+-----+------------+-------------------+--------+------------+

6 rows in set (0.28 sec)I hope this answers your question

Created 07-01-2021 12:12 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 07-02-2021 04:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Shelton,

Thank you for your response, but I asked a different question that can Sqoop export command be used to create a table in RDBMS. Apologies if my question was not clear before.

Created 07-03-2021 02:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Happy to help with that question. The simple answer is YES below I am demonstrating with the table staff created in the previous post!

Before the import

[hdfs@bern ~]$ hdfs dfs -ls /tmp

Found 5 items

drwxrwxr-x - druid hadoop 0 2020-07-06 02:04 /tmp/druid-indexing

drwxr-xr-x - hdfs hdfs 0 2020-07-06 01:50 /tmp/entity-file-history

drwx-wx-wx - hive hdfs 0 2020-07-06 01:59 /tmp/hive

-rw-r--r-- 3 hdfs hdfs 1024 2020-07-06 01:57 /tmp/ida8c04300_date570620

drwxr-xr-x - hdfs hdfs 0 2021-06-29 10:14 /tmp/sqoopWhen you run the sqoop import to ensure the destination directory sqoop_harsh8 doesn't already exist in HDFS

$ sqoop import --connect jdbc:mysql://localhost/harsh8 --table staff --username root -P --target-dir /tmp/sqoop_harsh8 -m 1

Here I am importing the table harsh8.staff I created in the previous session. The sqoop export will create 2 files _SUCCESS and part-m-0000 in the HDFS directory as shown below.

After the export the directory /tmp/sqoop_harsh8 is newly created

[hdfs@bern ~]$ hdfs dfs -ls /tmp

Found 5 items

drwxrwxr-x - druid hadoop 0 2020-07-06 02:04 /tmp/druid-indexing

drwxr-xr-x - hdfs hdfs 0 2020-07-06 01:50 /tmp/entity-file-history

drwx-wx-wx - hive hdfs 0 2020-07-06 01:59 /tmp/hive

-rw-r--r-- 3 hdfs hdfs 1024 2020-07-06 01:57 /tmp/ida8c04300_date570620

drwxr-xr-x - hdfs hdfs 0 2021-06-29 10:14 /tmp/sqoop

-rw-r--r-- 3 hdfs hdfs 0 2021-07-03 22:04 /tmp/sqoop_harsh8Check the contents of /tmp/sqoop_harsh8

[hdfs@bern ~]$ hdfs dfs -ls /tmp/sqoop_harsh8

Found 2 items

-rw-r--r-- 3 hdfs hdfs 0 2021-07-03 22:04 /tmp/sqoop_harsh8/_SUCCESS

-rw-r--r-- 3 hdfs hdfs 223 2021-07-03 22:04 /tmp/sqoop_harsh8/part-m-00000The _SUCCESS is just a log file so cat the contents of part-m-00000 this is the data from our table harsh8.staff

[hdfs@bern ~]$ hdfs dfs -cat /tmp/sqoop_harsh8/part-m-00000

100,Geoffrey,manager,50000,Admin

101,Thomas,Oracle Consultant,15000,IT

102,Biden,Project Manager,28000,PM

103,Carmicheal,Bigdata developer,30000,BDS

104,Johnson,Treasurer,21000,Accounts

105,Gerald,Director,30000,ManagementI piped the contents to a text file hr2.txt in my local tmp directory to enable me run a sqoop import with an acceptable format

[hdfs@bern ~]$ hdfs dfs -cat /tmp/sqoop_harsh8/part-m-00000 > /tmp/hr2.txtValidate the hr2.txt contents

[hdfs@bern ~]$ cat /tmp/hr2.txt

100,Geoffrey,manager,50000,Admin

101,Thomas,Oracle Consultant,15000,IT

102,Biden,Project Manager,28000,PM

103,Carmicheal,Bigdata developer,30000,BDS

104,Johnson,Treasurer,21000,Accounts

105,Gerald,Director,30000,ManagementCopied the hr2.txt to HDFS and validated the file was copied

[hdfs@bern ~]$ hdfs dfs -copyFromLocal /tmp/hr2.txt /tmpValidation

[hdfs@bern ~]$ hdfs dfs -ls /tmp

Found 7 items

drwxrwxr-x - druid hadoop 0 2020-07-06 02:04 /tmp/druid-indexing

drwxr-xr-x - hdfs hdfs 0 2020-07-06 01:50 /tmp/entity-file-history

drwx-wx-wx - hive hdfs 0 2020-07-06 01:59 /tmp/hive

-rw-r--r-- 3 hdfs hdfs 223 2021-07-03 22:41 /tmp/hr2.txt

-rw-r--r-- 3 hdfs hdfs 1024 2020-07-06 01:57 /tmp/ida8c04300_date570620

drwxr-xr-x - hdfs hdfs 0 2021-06-29 10:14 /tmp/sqoop

drwxr-xr-x - hdfs hdfs 0 2021-07-03 22:04 /tmp/sqoop_harsh8Connected to MySQL and switch to harsh8 database

[root@bern ~]# mysql -uroot -p

Enter password:

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is 179

Server version: 5.5.65-MariaDB MariaDB Server

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MariaDB [(none)]> use harsh8;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changedCheck the existing tables in the harsh8 database before the export

MariaDB [harsh8]> show tables;

+------------------+

| Tables_in_harsh8 |

+------------------+

| staff |

+------------------+

1 row in set (0.00 sec)Pre-create a table staff2 to receive the hr2.txt data

MariaDB [harsh8]> CREATE TABLE staff2 ( id INT NOT NULL PRIMARY KEY, Name VARCHAR(20), Position VARCHAR(20),Salary INT,Department VARCHAR(10));

Query OK, 0 rows affected (0.57 sec)

MariaDB [harsh8]> show tables;

+------------------+

| Tables_in_harsh8 |

+------------------+

| staff |

| staff2 |

+------------------+

2 rows in set (0.00 sec)Load data into staff2 from a sqoop export !

sqoop]$ sqoop export --connect jdbc:mysql://localhost/harsh8 --username root --password 'w3lc0m31' --table staff2 --export-dir /tmp/hr2.txt

[hdfs@bern ~]$ sqoop export --connect jdbc:mysql://localhost/harsh8 --username root --password 'w3lc0m31' --table staff2 --export-dir /tmp/hr2.txt

...

21/07/03 22:44:36 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7.3.1.4.0-315

21/07/03 22:44:37 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

21/07/03 22:44:37 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

21/07/03 22:44:37 INFO tool.CodeGenTool: Beginning code generation

21/07/03 22:44:39 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `staff2` AS t LIMIT 1

21/07/03 22:44:40 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `staff2` AS t LIMIT 1

21/07/03 22:44:40 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /usr/hdp/3.1.4.0-315/hadoop-mapreduce

21/07/03 22:45:48 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hdfs/compile/a53bb813b88ab155201196658f3ee001/staff2.jar

21/07/03 22:45:48 INFO mapreduce.ExportJobBase: Beginning export of staff2

21/07/03 22:47:59 INFO client.RMProxy: Connecting to ResourceManager at bern.swiss.ch/192.168.0.139:8050

21/07/03 22:48:07 INFO client.AHSProxy: Connecting to Application History server at bern.swiss.ch/192.168.0.139:10200

21/07/03 22:48:18 INFO mapreduce.JobResourceUploader: Disabling Erasure Coding for path: /user/hdfs/.staging/job_1625340048377_0003

21/07/03 22:49:05 INFO input.FileInputFormat: Total input files to process : 1

21/07/03 22:49:05 INFO input.FileInputFormat: Total input files to process : 1

21/07/03 22:49:12 INFO mapreduce.JobSubmitter: number of splits:4

21/07/03 22:49:24 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1625340048377_0003

21/07/03 22:49:24 INFO mapreduce.JobSubmitter: Executing with tokens: []

21/07/03 22:49:26 INFO conf.Configuration: found resource resource-types.xml at file:/etc/hadoop/3.1.4.0-315/0/resource-types.xml

21/07/03 22:49:32 INFO impl.YarnClientImpl: Submitted application application_1625340048377_0003

21/07/03 22:49:33 INFO mapreduce.Job: The url to track the job: http://bern.swiss.ch:8088/proxy/application_1625340048377_0003/

21/07/03 22:49:33 INFO mapreduce.Job: Running job: job_1625340048377_0003

21/07/03 22:52:15 INFO mapreduce.Job: Job job_1625340048377_0003 running in uber mode : false

21/07/03 22:52:15 INFO mapreduce.Job: map 0% reduce 0%

21/07/03 22:56:45 INFO mapreduce.Job: map 75% reduce 0%

21/07/03 22:58:10 INFO mapreduce.Job: map 100% reduce 0%

21/07/03 22:58:13 INFO mapreduce.Job: Job job_1625340048377_0003 completed successfully

21/07/03 22:58:14 INFO mapreduce.Job: Counters: 32

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=971832

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=1132

HDFS: Number of bytes written=0

HDFS: Number of read operations=19

HDFS: Number of large read operations=0

HDFS: Number of write operations=0

Job Counters

Launched map tasks=4

Data-local map tasks=4

Total time spent by all maps in occupied slots (ms)=1733674

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=866837

Total vcore-milliseconds taken by all map tasks=866837

Total megabyte-milliseconds taken by all map tasks=1775282176

Map-Reduce Framework

Map input records=6

Map output records=6

Input split bytes=526

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=1565

CPU time spent (ms)=6710

Physical memory (bytes) snapshot=661999616

Virtual memory (bytes) snapshot=12958916608

Total committed heap usage (bytes)=462422016

Peak Map Physical memory (bytes)=202506240

Peak Map Virtual memory (bytes)=3244965888

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=0

21/07/03 22:58:14 INFO mapreduce.ExportJobBase: Transferred 1.1055 KB in 630.3928 seconds (1.7957 bytes/sec)

21/07/03 22:58:14 INFO mapreduce.ExportJobBase: Exported 6 records.Switch and log onto MariaDB, switch to harsh8 database, and query for the new table staff2

[root@bern ~]# mysql -uroot -pwelcome1

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is 266

Server version: 5.5.65-MariaDB MariaDB Server

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MariaDB [(none)]> use harsh8;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

MariaDB [harsh8]> show tables;

+------------------+

| Tables_in_harsh8 |

+------------------+

| staff |

| staff2 |

+------------------+

2 rows in set (2.64 sec)

MariaDB [harsh8]> SELECT NOW();

+---------------------+

| NOW() |

+---------------------+

| 2021-07-03 23:02:38 |

+---------------------+

1 row in set (0.00 sec)

After the import

MariaDB [harsh8]> select * from staff2;

+-----+------------+-------------------+--------+------------+

| id | Name | Position | Salary | Department |

+-----+------------+-------------------+--------+------------+

| 100 | Geoffrey | manager | 50000 | Admin |

| 101 | Thomas | Oracle Consultant | 15000 | IT |

| 102 | Biden | Project Manager | 28000 | PM |

| 103 | Carmicheal | Bigdata developer | 30000 | BDS |

| 104 | Johnson | Treasurer | 21000 | Accounts |

| 105 | Gerald | Director | 30000 | Management |

+-----+------------+-------------------+--------+------------+

6 rows in set (0.00 sec)Check the source table used in the export see the timestamp !

MariaDB [harsh8]> SELECT NOW();

+---------------------+

| NOW() |

+---------------------+

| 2021-07-03 23:04:50 |

+---------------------+

1 row in set (0.00 sec)Comparison

MariaDB [harsh8]> select * from staff;

+-----+------------+-------------------+--------+------------+

| id | Name | Position | Salary | Department |

+-----+------------+-------------------+--------+------------+

| 100 | Geoffrey | manager | 50000 | Admin |

| 101 | Thomas | Oracle Consultant | 15000 | IT |

| 102 | Biden | Project Manager | 28000 | PM |

| 103 | Carmicheal | Bigdata developer | 30000 | BDS |

| 104 | Johnson | Treasurer | 21000 | Accounts |

| 105 | Gerald | Director | 30000 | Management |

+-----+------------+-------------------+--------+------------+You have successfully created a table from a Sqoop export!

Et Voila

The conversion from part-m-00000 to txt did the trick, so this proves it's doable so you question is answered 🙂 You can revalidate by following my steps

Happy hadooping !