Support Questions

- Cloudera Community

- Support

- Support Questions

- Sqoop hook doesn't work for atlas?

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Sqoop hook doesn't work for atlas?

Created 08-17-2016 04:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I install atlas and sqoop respectively and haven't used HDP.

After execute this command:

sqoop import -connect jdbc:mysql://master:3306/hive -username root -password admin -table TBLS -hive-import -hive-table sqoophook1

It shows that sqoop import data into hive successfully, and never report error.

Then I check the Atlas UI, search the sqoop_process type, but I can't check any information. Why?

`

Here is my configuration process:

Step 1: Set the <sqoop-conf>/sqoop-site.xml

<property> <name>sqoop.job.data.publish.class</name> <value>org.apache.atlas.sqoop.hook.SqoopHook</value> </property>

Step 2: Copy the <atlas-conf>/atlas-application.properties to <sqoop-conf>

Step 3: Link <atlas-home>/hook/sqoop/*.jar in sqoop lib.

`

Are these configuration-steps wrong ?

Here is the output

sqoop import -connect jdbc:mysql://zte-1:3306/hive -username root -password admin -table TBLS -hive-import -hive-table sqoophook2 Warning: /var/local/hadoop/sqoop-1.4.6/../hcatalog does not exist! HCatalog jobs will fail. Please set $HCAT_HOME to the root of your HCatalog installation. Warning: /var/local/hadoop/sqoop-1.4.6/../accumulo does not exist! Accumulo imports will fail. Please set $ACCUMULO_HOME to the root of your Accumulo installation. 16/08/23 01:04:04 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6 16/08/23 01:04:04 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead. 16/08/23 01:04:04 INFO tool.BaseSqoopTool: Using Hive-specific delimiters for output. You can override 16/08/23 01:04:04 INFO tool.BaseSqoopTool: delimiters with --fields-terminated-by, etc. 16/08/23 01:04:05 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset. 16/08/23 01:04:05 INFO tool.CodeGenTool: Beginning code generation 16/08/23 01:04:05 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `TBLS` AS t LIMIT 1 16/08/23 01:04:06 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `TBLS` AS t LIMIT 1 16/08/23 01:04:06 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /var/local/hadoop/hadoop-2.6.0 Note: /tmp/sqoop-hdfs/compile/2606be5f25a97674311440065aac302d/TBLS.java uses or overrides a deprecated API. Note: Recompile with -Xlint:deprecation for details. 16/08/23 01:04:09 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hdfs/compile/2606be5f25a97674311440065aac302d/TBLS.jar 16/08/23 01:04:09 WARN manager.MySQLManager: It looks like you are importing from mysql. 16/08/23 01:04:09 WARN manager.MySQLManager: This transfer can be faster! Use the --direct 16/08/23 01:04:09 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path. 16/08/23 01:04:09 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql) 16/08/23 01:04:09 INFO mapreduce.ImportJobBase: Beginning import of TBLS SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/var/local/hadoop/hadoop-2.6.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/var/local/hadoop/hbase-1.1.5/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/S taticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] 16/08/23 01:04:10 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 16/08/23 01:04:10 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar 16/08/23 01:04:11 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job. maps 16/08/23 01:04:11 INFO client.RMProxy: Connecting to ResourceManager at zte-1/192.168.136.128:8032 16/08/23 01:04:16 INFO db.DBInputFormat: Using read commited transaction isolation 16/08/23 01:04:16 INFO db.DataDrivenDBInputFormat: BoundingValsQuery: SELECT MIN(`TBL_ID`), MAX(`TBL_ID`) FROM `TBLS` 16/08/23 01:04:17 INFO mapreduce.JobSubmitter: number of splits:4 16/08/23 01:04:17 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1471882959657_0001 16/08/23 01:04:19 INFO impl.YarnClientImpl: Submitted application application_1471882959657_0001 16/08/23 01:04:19 INFO mapreduce.Job: The url to track the job: http://zte-1:8088/proxy/application_147188295 9657_0001/ 16/08/23 01:04:19 INFO mapreduce.Job: Running job: job_1471882959657_0001 16/08/23 01:04:37 INFO mapreduce.Job: Job job_1471882959657_0001 running in uber mode : false 16/08/23 01:04:37 INFO mapreduce.Job: map 0% reduce 0% 16/08/23 01:05:05 INFO mapreduce.Job: map 25% reduce 0% 16/08/23 01:05:07 INFO mapreduce.Job: map 100% reduce 0% 16/08/23 01:05:08 INFO mapreduce.Job: Job job_1471882959657_0001 completed successfully 16/08/23 01:05:08 INFO mapreduce.Job: Counters: 30 File System Counters FILE: Number of bytes read=0 FILE: Number of bytes written=529788 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=426 HDFS: Number of bytes written=171 HDFS: Number of read operations=16 HDFS: Number of large read operations=0 HDFS: Number of write operations=8 Job Counters Launched map tasks=4 Other local map tasks=4 Total time spent by all maps in occupied slots (ms)=102550 Total time spent by all reduces in occupied slots (ms)=0 Total time spent by all map tasks (ms)=102550 Total vcore-seconds taken by all map tasks=102550 Total megabyte-seconds taken by all map tasks=105011200 Map-Reduce Framework Map input records=3 Map output records=3 Input split bytes=426 Spilled Records=0 Failed Shuffles=0 Merged Map outputs=0 GC time elapsed (ms)=1227 CPU time spent (ms)=3640 Physical memory (bytes) snapshot=390111232 Virtual memory (bytes) snapshot=3376676864 Total committed heap usage (bytes)=74018816 File Input Format Counters Bytes Read=0 File Output Format Counters Bytes Written=171 16/08/23 01:05:08 INFO mapreduce.ImportJobBase: Transferred 171 bytes in 57.2488 seconds (2.987 bytes/sec) 16/08/23 01:05:08 INFO mapreduce.ImportJobBase: Retrieved 3 records. 16/08/23 01:05:08 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `TBLS` AS t LIMIT 1 16/08/23 01:05:08 INFO hive.HiveImport: Loading uploaded data into Hive 16/08/23 01:05:19 INFO hive.HiveImport: 16/08/23 01:05:19 INFO hive.HiveImport: Logging initialized using configuration in jar:file:/var/local/hadoop/hive-1.2.1/lib/hive-common-1.2.1.jar!/hive-log4j.properties 16/08/23 01:05:19 INFO hive.HiveImport: SLF4J: Class path contains multiple SLF4J bindings. 16/08/23 01:05:19 INFO hive.HiveImport: SLF4J: Found binding in [jar:file:/var/local/hadoop/hadoop-2.6.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] 16/08/23 01:05:19 INFO hive.HiveImport: SLF4J: Found binding in [jar:file:/var/local/hadoop/hbase-1.1.5/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] 16/08/23 01:05:19 INFO hive.HiveImport: SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an e xplanation. 16/08/23 01:05:19 INFO hive.HiveImport: SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] 16/08/23 01:05:31 INFO hive.HiveImport: OK 16/08/23 01:05:31 INFO hive.HiveImport: Time taken: 3.481 seconds 16/08/23 01:05:31 INFO hive.HiveImport: Loading data to table default.sqoophook2 16/08/23 01:05:33 INFO hive.HiveImport: Table default.sqoophook2 stats: [numFiles=4, totalSize=171] 16/08/23 01:05:33 INFO hive.HiveImport: OK 16/08/23 01:05:33 INFO hive.HiveImport: Time taken: 1.643 seconds 16/08/23 01:05:35 INFO hive.HiveImport: Hive import complete. 16/08/23 01:05:35 INFO hive.HiveImport: Export directory is contains the _SUCCESS file only, removing the directory.

Created 08-17-2016 10:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Could you also confirm if the sqoop-site.xml has the rest address for atlas server configured ?

Sample configuration is available here

Created 08-17-2016 10:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Could you also confirm if the sqoop-site.xml has the rest address for atlas server configured ?

Sample configuration is available here

Created 08-22-2016 09:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

After I added atlas.rest.address property to the sqoop-site.xml, the problem is same. I search sqoop_process in the Atlas Web UI and no result was found.

But the Hive hook is work, it can capture the imported hive_table which is shown in the atlas Web UI.

I paste the output on the next answer. And the output doesn't report any error.

``

I remembered that, when I configured the Hive hook, I added some path of JARs for the HIVE_AUX_JARS_PATH. But the configuration process of Sqoop hook is lack of this step.

Is it necessary to add some path of JARs for sqoop? It seems that the SqoopHook Class doesn't work.

Created 08-23-2016 03:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Step 3: Link <atlas-home>/hook/sqoop/*.jar in sqoop lib. should take care of adding the required jar files on the sqoop path.

Created 08-23-2016 05:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How to link these JARs?

Copy these JARs into <sqoop-home>/lib ?

Or use command: ln -s <atlas-home>/hook/sqoop/* <sqoop-home>/lib/ ?

Created 08-19-2016 07:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you paste the console output for the executed sqoop command here? Also please make sure to add the atlas.rest.address property to the sqoop-site.xml or atlas-application.properties file and run the command to see if there is any difference.

Created 08-22-2016 09:35 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

After I added atlas.rest.address property to the sqoop-site.xml, the problem is same. I search sqoop_process in the Atlas Web UI and no result was found.

But the Hive hook is work, it can capture the imported hive_table which is shown in the atlas Web UI.

I paste the output on the next answer. And the output doesn't report any error.

``

I remembered that, when I configured the Hive hook, I added some path of JARs for the HIVE_AUX_JARS_PATH. But the configuration process of Sqoop hook is lack of this step.

Is it necessary to add some path of JARs for sqoop? It seems that the SqoopHook Class doesn't work.

Created 08-22-2016 09:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The sqoop hook still doesn't work. Here is the console output for the executed sqoop command:

sqoop import -connect jdbc:mysql://zte-1:3306/hive -username root -password admin -table TBLS -hive-import -hive-table sqoophook2 Warning: /var/local/hadoop/sqoop-1.4.6/../hcatalog does not exist! HCatalog jobs will fail. Please set $HCAT_HOME to the root of your HCatalog installation. Warning: /var/local/hadoop/sqoop-1.4.6/../accumulo does not exist! Accumulo imports will fail. Please set $ACCUMULO_HOME to the root of your Accumulo installation. 16/08/23 01:04:04 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6 16/08/23 01:04:04 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead. 16/08/23 01:04:04 INFO tool.BaseSqoopTool: Using Hive-specific delimiters for output. You can override 16/08/23 01:04:04 INFO tool.BaseSqoopTool: delimiters with --fields-terminated-by, etc. 16/08/23 01:04:05 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset. 16/08/23 01:04:05 INFO tool.CodeGenTool: Beginning code generation 16/08/23 01:04:05 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `TBLS` AS t LIMIT 1 16/08/23 01:04:06 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `TBLS` AS t LIMIT 1 16/08/23 01:04:06 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /var/local/hadoop/hadoop-2.6.0 Note: /tmp/sqoop-hdfs/compile/2606be5f25a97674311440065aac302d/TBLS.java uses or overrides a deprecated API. Note: Recompile with -Xlint:deprecation for details. 16/08/23 01:04:09 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hdfs/compile/2606be5f25a97674311440065aac302d/TBLS.jar 16/08/23 01:04:09 WARN manager.MySQLManager: It looks like you are importing from mysql. 16/08/23 01:04:09 WARN manager.MySQLManager: This transfer can be faster! Use the --direct 16/08/23 01:04:09 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path. 16/08/23 01:04:09 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql) 16/08/23 01:04:09 INFO mapreduce.ImportJobBase: Beginning import of TBLS SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/var/local/hadoop/hadoop-2.6.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/var/local/hadoop/hbase-1.1.5/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/S taticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] 16/08/23 01:04:10 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 16/08/23 01:04:10 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar 16/08/23 01:04:11 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job. maps 16/08/23 01:04:11 INFO client.RMProxy: Connecting to ResourceManager at zte-1/192.168.136.128:8032 16/08/23 01:04:16 INFO db.DBInputFormat: Using read commited transaction isolation 16/08/23 01:04:16 INFO db.DataDrivenDBInputFormat: BoundingValsQuery: SELECT MIN(`TBL_ID`), MAX(`TBL_ID`) FROM `TBLS` 16/08/23 01:04:17 INFO mapreduce.JobSubmitter: number of splits:4 16/08/23 01:04:17 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1471882959657_0001 16/08/23 01:04:19 INFO impl.YarnClientImpl: Submitted application application_1471882959657_0001 16/08/23 01:04:19 INFO mapreduce.Job: The url to track the job: http://zte-1:8088/proxy/application_147188295 9657_0001/ 16/08/23 01:04:19 INFO mapreduce.Job: Running job: job_1471882959657_0001 16/08/23 01:04:37 INFO mapreduce.Job: Job job_1471882959657_0001 running in uber mode : false 16/08/23 01:04:37 INFO mapreduce.Job: map 0% reduce 0% 16/08/23 01:05:05 INFO mapreduce.Job: map 25% reduce 0% 16/08/23 01:05:07 INFO mapreduce.Job: map 100% reduce 0% 16/08/23 01:05:08 INFO mapreduce.Job: Job job_1471882959657_0001 completed successfully 16/08/23 01:05:08 INFO mapreduce.Job: Counters: 30 File System Counters FILE: Number of bytes read=0 FILE: Number of bytes written=529788 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=426 HDFS: Number of bytes written=171 HDFS: Number of read operations=16 HDFS: Number of large read operations=0 HDFS: Number of write operations=8 Job Counters Launched map tasks=4 Other local map tasks=4 Total time spent by all maps in occupied slots (ms)=102550 Total time spent by all reduces in occupied slots (ms)=0 Total time spent by all map tasks (ms)=102550 Total vcore-seconds taken by all map tasks=102550 Total megabyte-seconds taken by all map tasks=105011200 Map-Reduce Framework Map input records=3 Map output records=3 Input split bytes=426 Spilled Records=0 Failed Shuffles=0 Merged Map outputs=0 GC time elapsed (ms)=1227 CPU time spent (ms)=3640 Physical memory (bytes) snapshot=390111232 Virtual memory (bytes) snapshot=3376676864 Total committed heap usage (bytes)=74018816 File Input Format Counters Bytes Read=0 File Output Format Counters Bytes Written=171 16/08/23 01:05:08 INFO mapreduce.ImportJobBase: Transferred 171 bytes in 57.2488 seconds (2.987 bytes/sec) 16/08/23 01:05:08 INFO mapreduce.ImportJobBase: Retrieved 3 records. 16/08/23 01:05:08 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `TBLS` AS t LIMIT 1 16/08/23 01:05:08 INFO hive.HiveImport: Loading uploaded data into Hive 16/08/23 01:05:19 INFO hive.HiveImport: 16/08/23 01:05:19 INFO hive.HiveImport: Logging initialized using configuration in jar:file:/var/local/hadoop/hive-1.2.1/lib/hive-common-1.2.1.jar!/hive-log4j.properties 16/08/23 01:05:19 INFO hive.HiveImport: SLF4J: Class path contains multiple SLF4J bindings. 16/08/23 01:05:19 INFO hive.HiveImport: SLF4J: Found binding in [jar:file:/var/local/hadoop/hadoop-2.6.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] 16/08/23 01:05:19 INFO hive.HiveImport: SLF4J: Found binding in [jar:file:/var/local/hadoop/hbase-1.1.5/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] 16/08/23 01:05:19 INFO hive.HiveImport: SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an e xplanation. 16/08/23 01:05:19 INFO hive.HiveImport: SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] 16/08/23 01:05:31 INFO hive.HiveImport: OK 16/08/23 01:05:31 INFO hive.HiveImport: Time taken: 3.481 seconds 16/08/23 01:05:31 INFO hive.HiveImport: Loading data to table default.sqoophook2 16/08/23 01:05:33 INFO hive.HiveImport: Table default.sqoophook2 stats: [numFiles=4, totalSize=171] 16/08/23 01:05:33 INFO hive.HiveImport: OK 16/08/23 01:05:33 INFO hive.HiveImport: Time taken: 1.643 seconds 16/08/23 01:05:35 INFO hive.HiveImport: Hive import complete. 16/08/23 01:05:35 INFO hive.HiveImport: Export directory is contains the _SUCCESS file only, removing the directory.

Created 08-23-2016 03:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

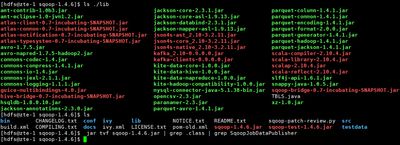

It is possible that only certain version of sqoop supports the hook and the output of the command doesn't seem to have hook kafka entry.

would it be possible to provide the output of this command ?

jar tvf sqoop-<version>jar | grep .class | grep SqoopJobDataPublisher

The output should look like

$ jar tvf sqoop-1.4.7-SNAPSHOT.jar | grep .class | grep SqoopJobDataPublisher

3462 Fri Jan 22 12:15:04 IST 2016 org/apache/sqoop/SqoopJobDataPublisher$Data.class

644 Fri Jan 22 12:15:04 IST 2016 org/apache/sqoop/SqoopJobDataPublisher.class

Created on 08-23-2016 06:05 AM - edited 08-19-2019 04:48 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The output of this command is nothing.

This is the result shown in the terminal. I also pasted the screenshot next to it.

[hdfs@zte-1 sqoop-1.4.6]$ ls bin CHANGELOG.txt conf ivy lib NOTICE.txt README.txt sqoop-patch-review.py src build.xml COMPILING.txt docs ivy.xml LICENSE.txt pom-old.xml sqoop-1.4.6.jar sqoop-test-1.4.6.jar testdata [hdfs@zte-1 sqoop-1.4.6]$ jar tvf sqoop-1.4.6.jar | grep .class | grep SqoopJobDataPublisher [hdfs@zte-1 sqoop-1.4.6]$